What is the Image Manager?

Image Manager Components

Image Manager DLLs

Image Manager ActiveX Controls

CVB Technology

Common Image Model

The Image Object

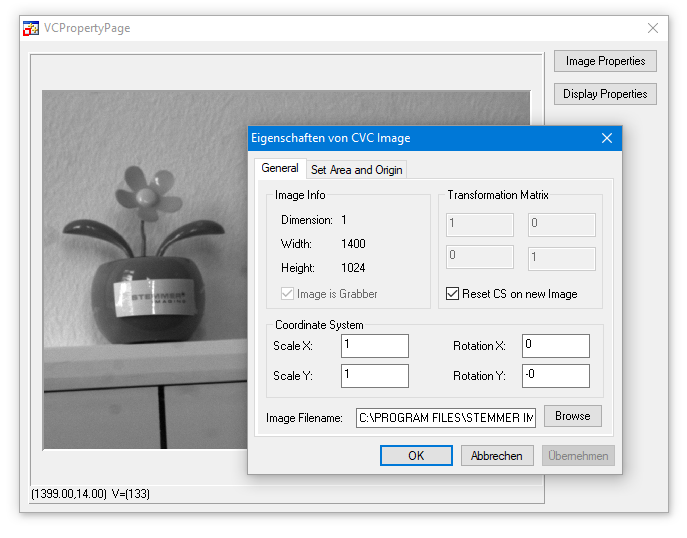

Coordinate System, Origin and Areas

Multithreading

Supported File Formats

High Dynamic Range Images

Image file handling

Acquisition device drivers and CVB Programming Interfaces

How to use the virtual driver

How to deal with video files

Areas of interest

Density and transformation matrix

Image Data Access

Unicode Support

Web Streaming

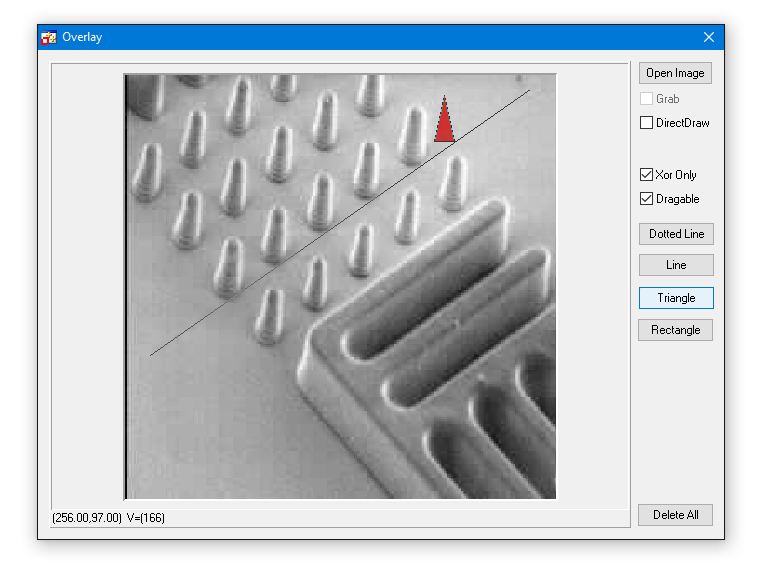

Destructive Overlays - Overview

Non-destructive Overlays

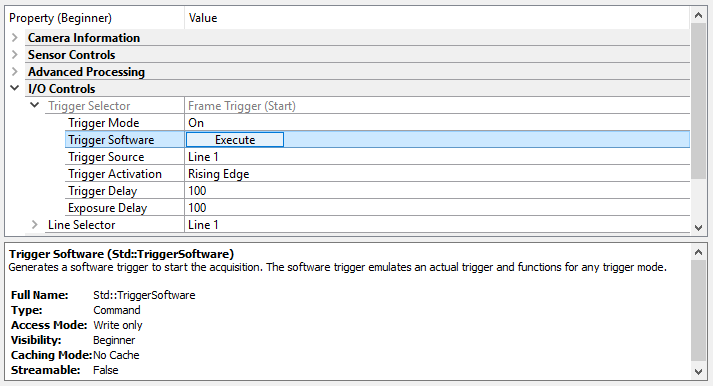

GenICam and CV GenAPI

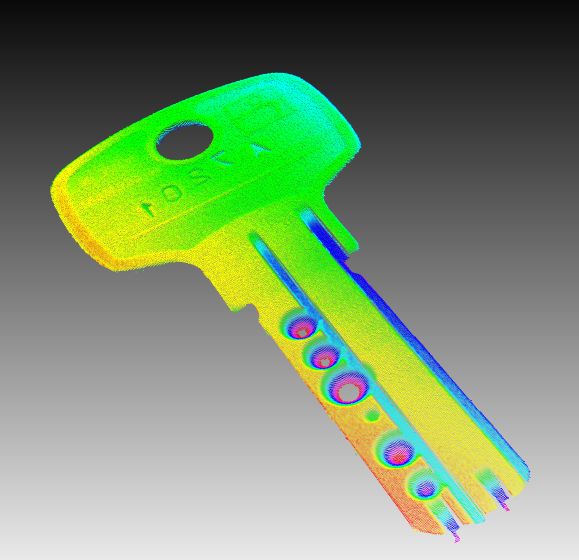

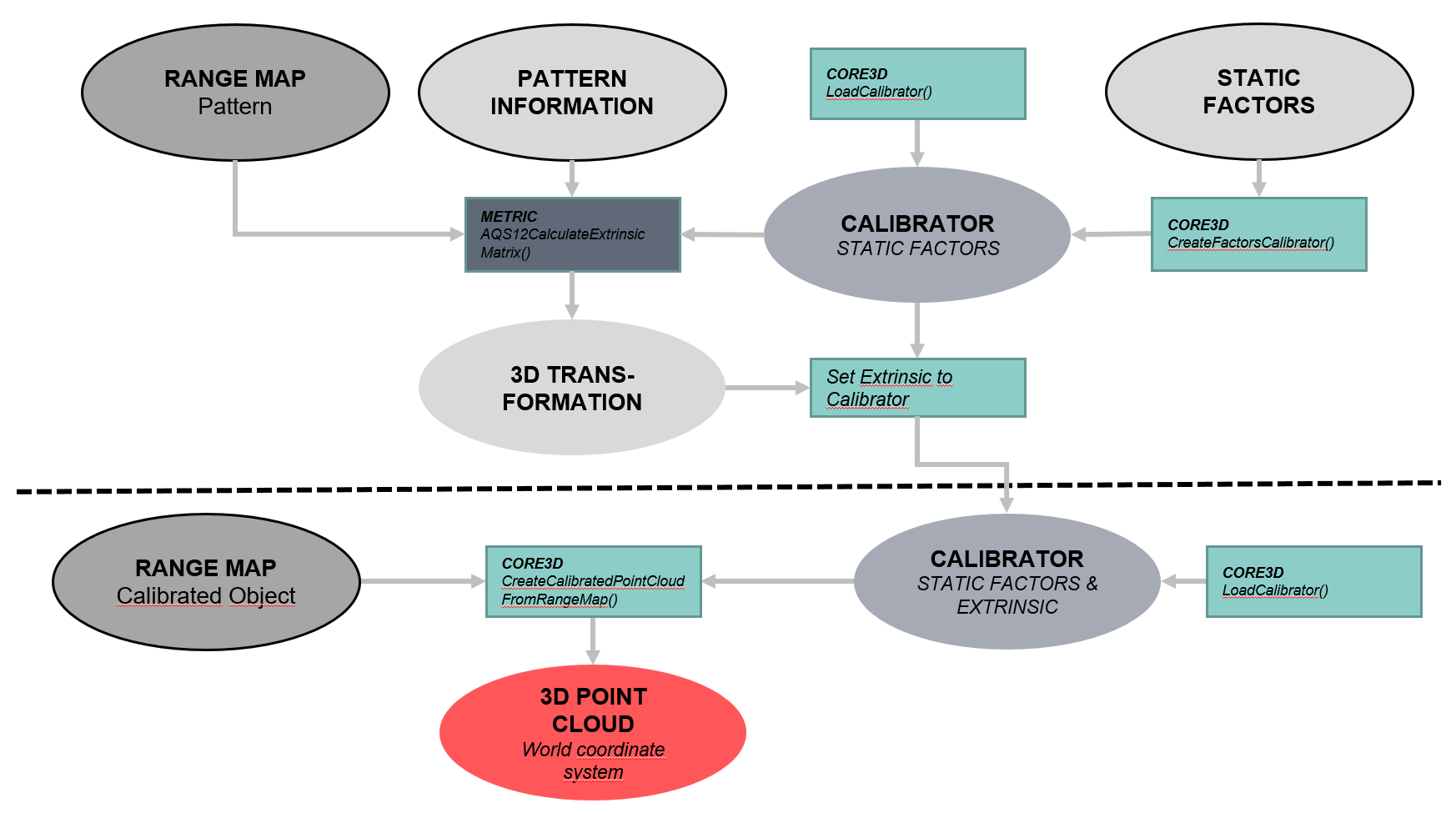

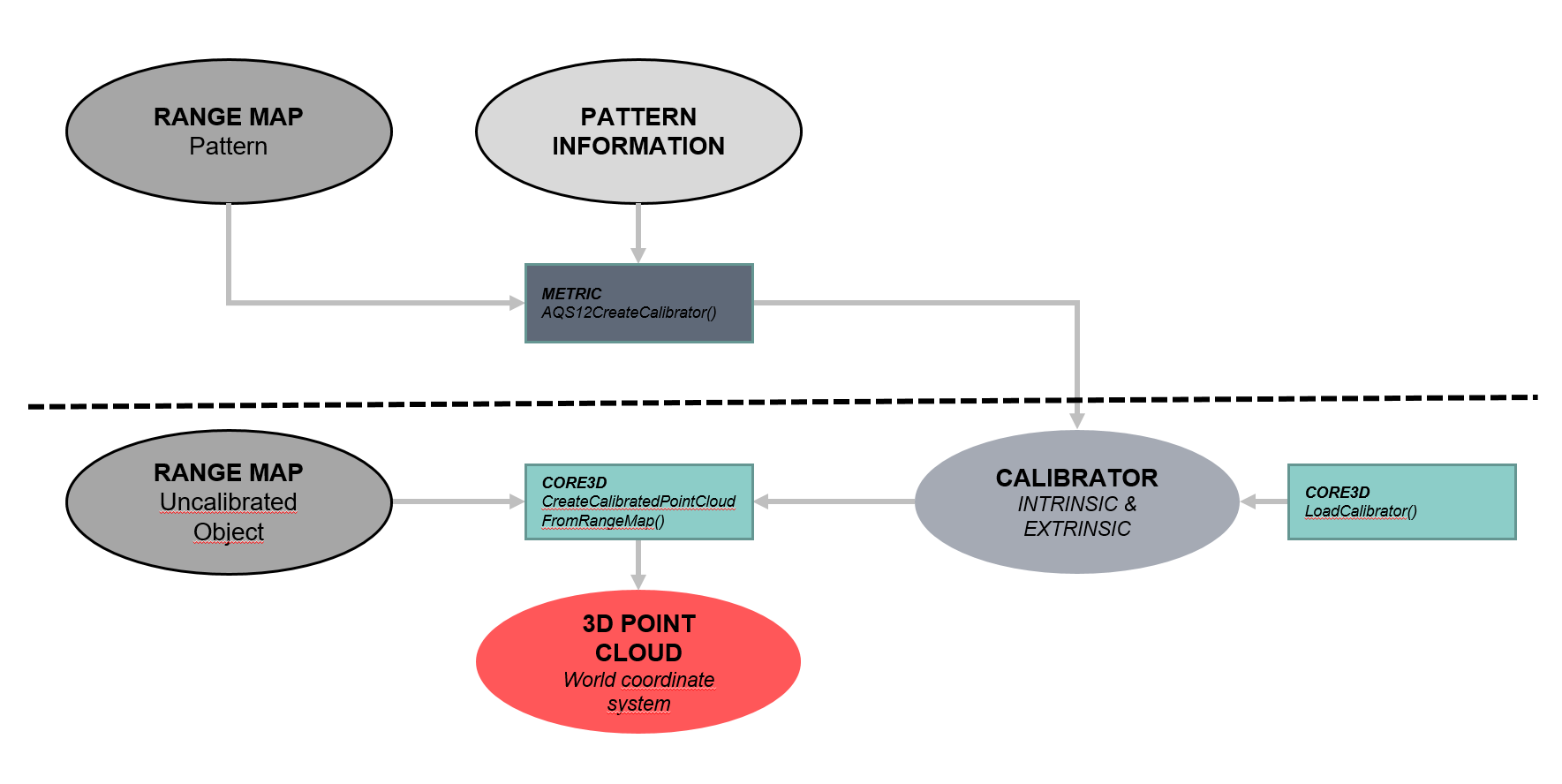

3D Functionality

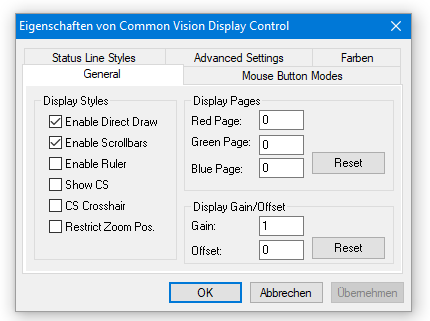

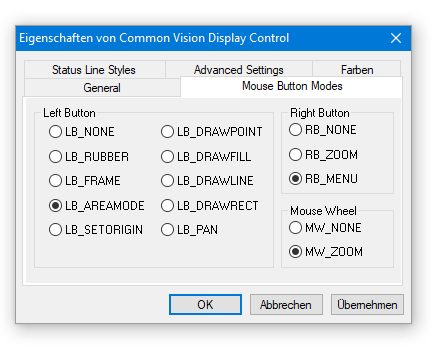

ActiveX Controls

Example Applications

Visual C++ Examples

.Net Examples

The CVB Image Manager is the basis for every Common Vision Blox application. The unique functionality and approach of the CVB Image Manager as an open standard provides an excellent basis for custom algorithms and applications in industrial image processing.

The CVB Image Manager offers unrivalled functionality in image acquisition, image handling, image display and image processing. It contains an extensive set of basic functionality allowing you to control many different types of image acquisition devices, as well as a wide range of image handling and processing functions. Furthermore it provides an optimised display with DirectX support and non-destructive overlays, a flexible coordinate system and support for multithreaded operation.

Based on the CVB Image Manager feature for image data access, it is also easily possible to create special algorithms for your specific application, based on the tutorials delivered for all supported compilers.

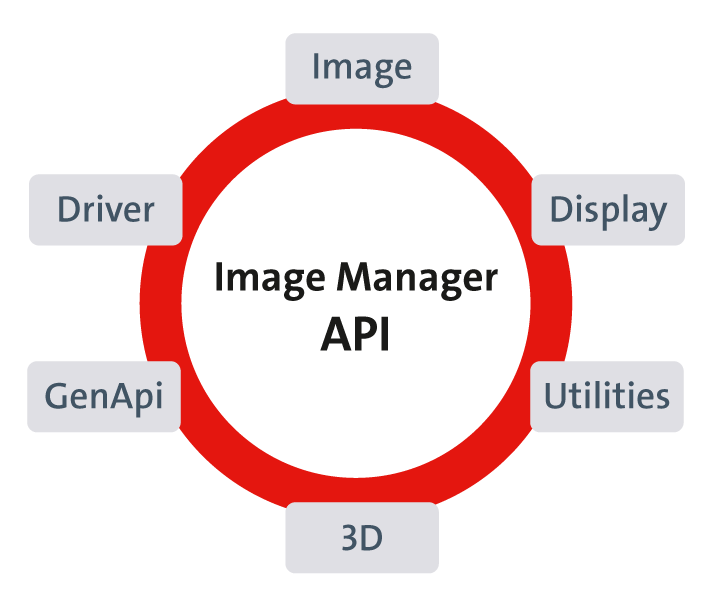

The functionality of the CVB Image Manager can roughly be split into the following groups:

Image Acquisition

Image Display

Image Handling

Image Processing

All Image Manager components are to be found in %CVB%. The complete API is available as CVB Reference. The core technology is based on DLL library files containing the full functionality of the Image Manager:

| Image: CVCImg.dll | This core library contains functions to generate, manage and manipulate image objects |

| Driver: CVCDriver.dll | This library contains functions to control all image acquisition device drivers |

| GenICam API: CVGenApi.dll | This library contains functions, which provide easy control of a GenICam compliant device like a GigE Vision camera. |

| 3D: CVCore3D.dll | This 3D libraries contains the basic classes for manipulation of 3D data. |

| Utilities: CVCUtilities.dll | This library contains functions for accessing CVB image data directly as well as functions that are not directly associated with CVB e.g. High Performance counters |

| Display: CVCDisp.dll | This core library contains functions to control every aspect of displaying images (not documented - for internal use only) |

| File: CVCFile.dll | This undocumented library is used to read and write different file formats, it is based on the AccuSoft ImageGear library. |

| WebStreaming: CVWebStreaming.dll | This library contains functions to setup a server and stream images. |

To make handling easier five ActiveX components (OCX's) have been developed to contain the functionality of the core libraries:

CVImage Control , CVdisplay Control , CVGrabber Control , CVDigIO Control , CVRingBuffer Control , CVGenAPiGrid control , CV3DViewer Control.

All the ActiveX components have the same structure and follow the same design rules to reduce development times and increase flexibility. The most important property that each of the controls contain is the Image property which contains a handle to the image object referenced. At application runtime all controls need an image object for processing. This pipelining defines links between each CVB tool and image object. The following is an example of pipelining an image object from an Image control to a Display control:

CVDisplay.Image = CVImage.Image

It is not essential to use ActiveX components instead of DLL libraries but they can often be faster to develop with and easier to use. If a tool is available in ActiveX form then it has a number of standard methods and properties.

Each of the core Common Vision Blox DLLs (Dynamic Link Libraries) is described in more detail following the links.

| CVCImg.dll |

The CVCImg.dll library is the basis for all tool and application development. It contains facilities for:

Functions that address the Windows clipboard are also included. Find functionality with: Area, Matrix, Map, Rect, Coordinate, Dongle, License, Tool, Draw, Fill, Flood, Image, Create, Copy, Set, Write, Error, Object, etc. Image objects can be generated from both image files and driver files.

| CVCDisp.DLL |

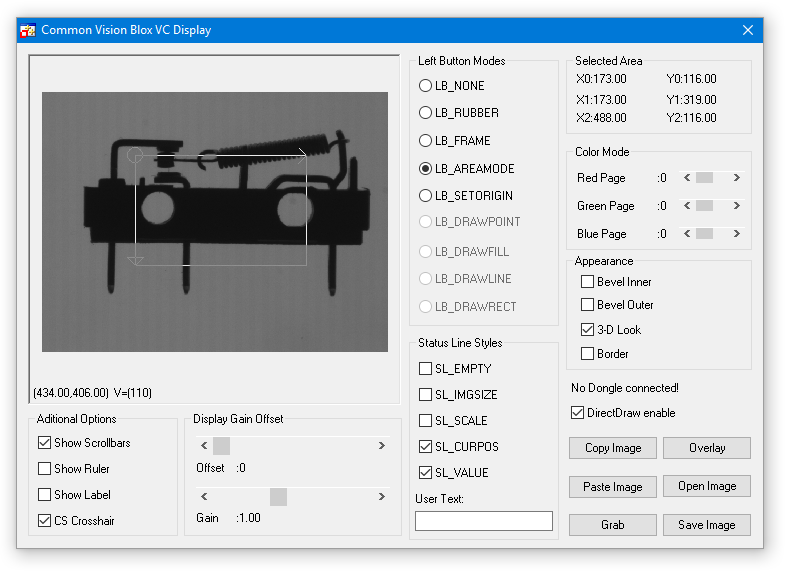

The CVCDisp.dll library contains functions for displaying images under Windows:

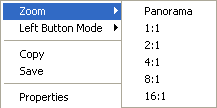

Find functionality with: Display, Object, Overlay, Area, Label, Image, Window, etc. Images are displayed using standard Windows functions (DIB) or by means of DirectDraw, if DirectDraw is used all overlays are flicker-free.

| CVCDriver.DLL |

CVCDriver.dll is the one library that is continually growing within the Common Vision Blox concept. The library contains functions to

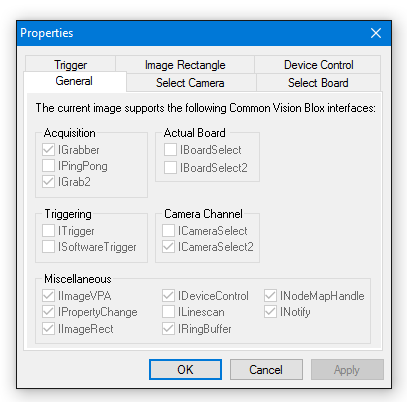

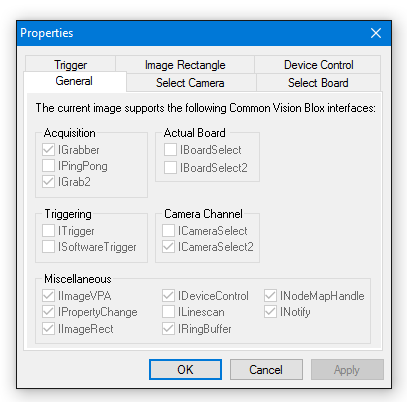

Find functionality with: BoardSelect and CameraSelect, DeviceControl, NodeMapHandle, Grab, Snap, Trigger, Image, Buffer, etc. A program or tool that requires a special interface should always verify if the required interface is available before attempting to access it.

| CVGenApi.DLL |

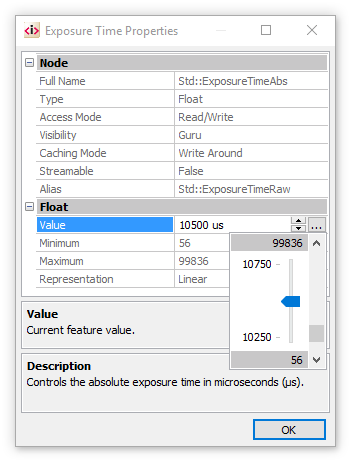

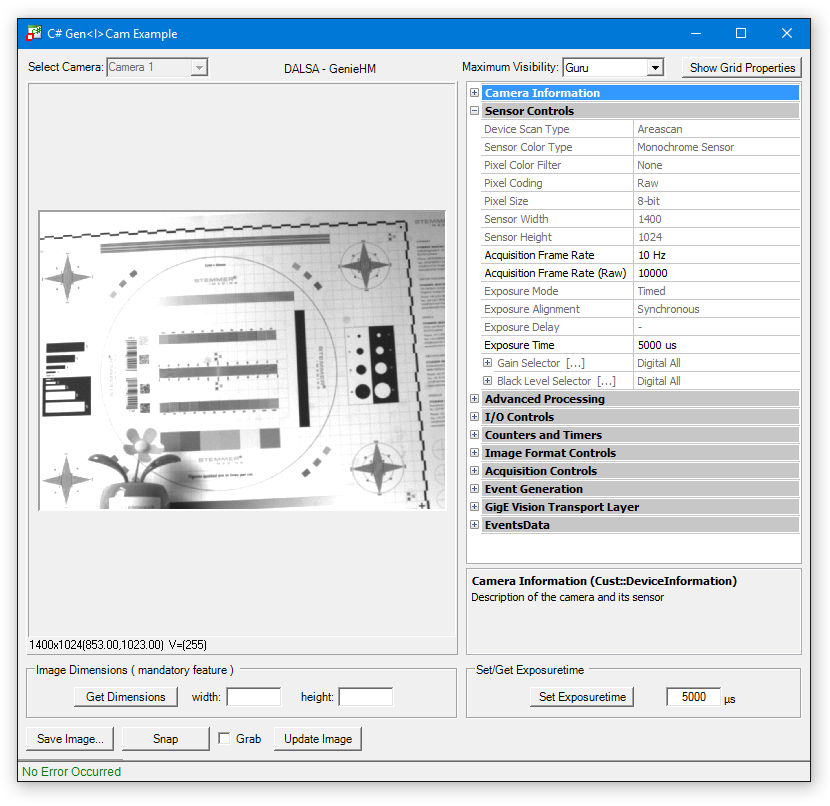

The GenAPI is part of the GenICam™ standard whereas the transport layer is typically provided by the camera or software vendor. The XML files are provided by the relevant vendor. At runtime, it may be necessary to modify individual camera features or present these to the end user. This can be achieved, on the one hand, using the CV Gen Api functions for accessing the CVB GenICam interface and, on the other, the Common Vision Gen Api Grid Control. The CVGeniApi.dll library contains functions to provide easy control of a GenICam compliant device like a GigE Vision camera.

Find functionality with: NodeMap, NM, Node, N, Load, Save, Get, Set, Info, etc.

| CVCUtilities.DLL |

This library contains

| CVCore3D.DLL, CVCore.DLL |

This libraries contain functions for

Find functionality with : Create, Transform, Calculate, Rotate, PointCloud, Matrix, Vector, etc.

Each of the ActiveX components is described in more detail below and following the links:

| Core library used to create the CV Image Control component: CVImage.ocx |

The CVImage Control is the basis for all tool and application development. It contains facilities for:

Functions that address the Windows clipboard are also included. Image objects can be generated from both image files and driver files.

| Core library used to create the CVdisplay Control component: CVDisplay.ocx |

CVdisplay Control contains functions for:

Images are displayed using standard Windows functions (DIB) or by means of DirectDraw, if DirectDraw is used all overlays are flicker-free.

ActiveX controls are available as simple wrappers for the CVCDriver.dll:

| CVGrabber Control: CVGrabber.ocx |

| CVDigIO Control: CVDigIO.ocx |

| CVRingBuffer Control: CVRingbuffer.ocx |

The CV GenAPI Grid Control contains functions to provides easy control of GenICam compliant devices like a GigE Vision cameras.

| Core library used to create the CV GenAPi Grid control: CVGenApiGrid.ocx |

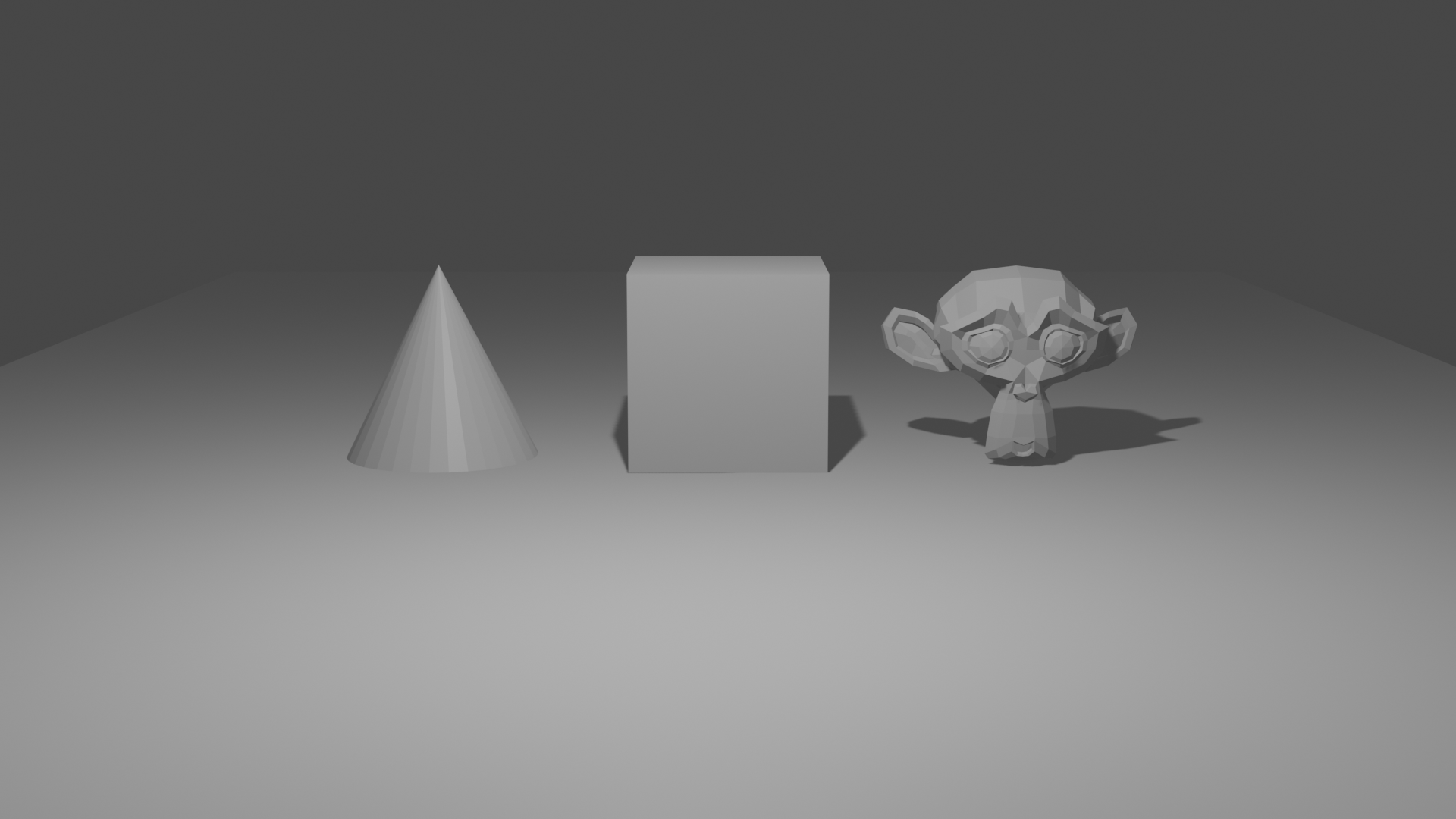

The CV Core3D Viewer Control (3D Display Control) contains functions to handle 3D data.

| Core library used to create the CV Core 3D Viewer control: Display3D.ocx |

Common Vision Blox is an open platform for image processing and machine vision development.

To maintain an open platform it is important to communicate the underlying technology and concepts to all users and developers. Communicate this technology involves describing the

This document describes in detail the proposal for a Common Image Model, it is split into the following sections:

Linear memory representations

Virtual Pixel Access

VPA Compatible memory representations

Coordinate System

Example VPA Tables

Use also the Image data access chapter to get informations about working with CVB Images.

What constitutes an image?

Physically, images are created through optical projection of some section of the real world onto a two dimensional surface e.g. the retina, CCD or CMOS device. The incident light reacts with some discrete set of sensors (Rods, Cones, Photosites...) distributed over this surface, changing the state of each sensor which serves to record local properties such as gray value, color, etc.

To us, an image in its most primitive sense is a state of such an ensemble of sensors. Mathematically it is a function assigning to every sensor, a state in the sensor's state space.

Parametrizing the sensors by their surface coordinates and assuming that their states can be modeled in some common, finite dimensional vector space you arrive at an analytical model of images:

The set P, the dimension d and the function v are the essential properties of an image.

With a basis of V fixed, then equivalently to (2) and (3) there are scalar functions v0, v1, ..., vd - 1 such that v(X, Y) = (v0(X, Y), v1(X, Y), ..., vd - 1(X, Y)). The functions vi are essentially scalar (gray scale) images and are called color planes or more generally image planes (RedPlane, BluePlane, GreenPlane, HuePlane, IntensityPlane, SaturationPlane, ...). Again equivalently there is a scalar function v of [0, ..., d - 1] x P such that v(i, X, Y) = vi(X, Y) (e.g. v(Green, 100, 120) = 17.5, ...). This is the simplest and most general model of an image. It includes gray scale images, color images, pyramids, stereo images, finite time sequences of images,...

To make the model suitable for computing, three problems have to be solved:

a) The representation of the model in memory

b) Given (X, Y) rapid decision if (X, Y) is in P (a legal pixel)

c) The rapid computation and/or modification of the function v

Problems (a) and (b) are made solvable by the following simplifications

i. P is a sub-rectangle in a two-dimensional square lattice. In fact, since global translation of image coordinates is not relevant we can assume that (0, 0) is one of the corners of the rectangle.

Therefore P is specified by the integral image properties (Width, Height).

This allows problem (b) to be solved with four comparisons.

The lattice assumption causes problems in rotations and other geometric transformations, interpolation mechanisms have to be provided.

ii. The range of the functions vi is a set Ti of numbers conveniently handled by a computer. This ranges from 8-bit (usual) to 64-bit (double precision) scalars, and may perhaps vary from image plane to image plane.

The data type Ti should support basic arithmetic functions.

The number of bits to represent the i'th image plane is a further property of an image.

To summarise the definition of a CVB image here is a list of essential properties:

An image in the sense of CVB can therefore be regarded as a vertical stack of one-dimensional images. The height of the stack is the dimension of V (e.g. 1 for gray scale images, 3 for RGB color images, two for stereo or complex images, the height of the pyramid for pyramids, the length of the time sequence for time sequences, etc).

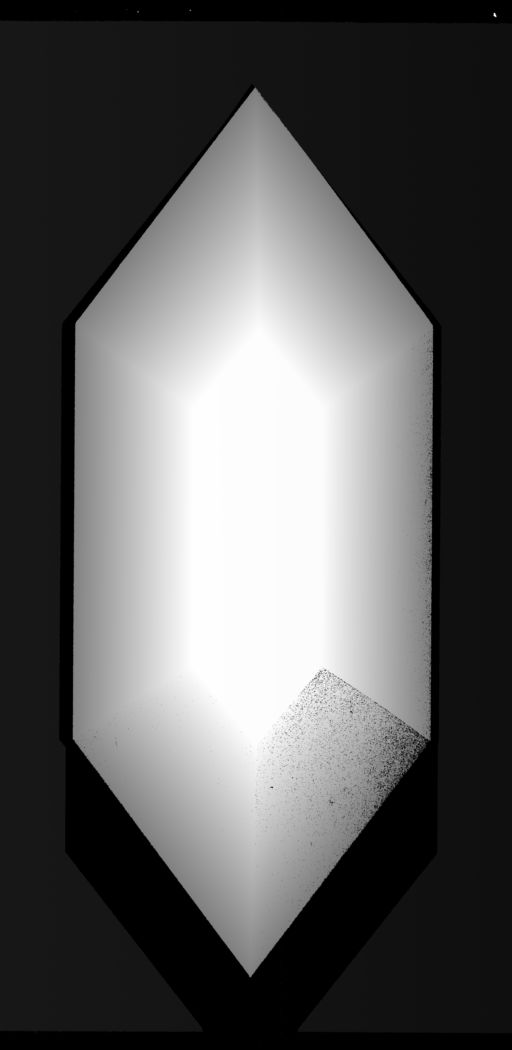

The diagram below illustrates the image concept.

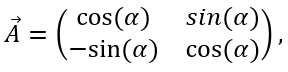

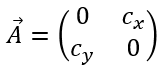

The Coordinate system, defined by an origin and a 2x2 matrix,

Number of Image planes (Dimension)

Data type of up to 255 Bits per pixel per plane

Virtual Pixel Address Table (VPAT) per plane

The planes are double linked lists which can be manipulated.

Problem of representation of the image model in memory raises the question of compatibility. To make the software useful it has to be compatible with existing memory representations for restricted types of images, at least DIBs (because we work under Windows) and various image acquisition devices. If we disregard 1-bit and 4-bit DIBs we are still left with gray scale and color DIBs, which may or may not be upside down.

The situation is worse when we look at image acquisition device with conceivable combinations of interlaced, noninterlaced, 8-10-12-24-32 bit pixels, various schemes for color representations or possibly separate memory pages for the different color planes.

One solution is to fix some standard memory representation (e.g. DIB) and copy/translate an incoming image to this format whenever necessary. We reject this idea because it introduces redundant information involving additional memory and computation time (the argument that relative to processing, the copying time is negligible on modern systems is not acceptable, what if we just want to monitor the gray values of a few pixels?).

Instead, we propose to exploit the fact that most (flat) memory representations of image planes have a property of linearity: If the value for pixel location (X, Y) resides at address M(X, Y) then

M(X, Y) = M(0, 0) + X*DeltaX + Y*DeltaY

for some DeltaX, DeltaY. These, together with the base M(0, 0) can be regarded as properties of the image plane in question.

By communicating DeltaX and DeltaY together with M(0, 0) and some fundamental base offset for every image plane, we could augment the image model above with a description of how the pixels are placed in memory. This description would serve as a basis for a more rapid computation of the function v.

This is the starting point of the old MINOS driver model. Essentially the driver model replaced the idea of »image data« with »knowledge about image data«.

Rather than communicating the data itself, the driver only communicated information about the organization and location of the data.

The linearity formula above has three disadvantages:

The current version of Common Vision Blox resolves these three problems internally. By publishing the underlying mechanism, and simplifying and streamlining the definitions, the method can be made the basis of an image description format that is suitable for the purposes of high-speed machine vision.

The greatest problem in image processing is the rapid computation and/or modification of the function v. Given a linear memory representation of an image plane this amounts to rapid computation of the address

M(X, Y) = M(0, 0) + X*DeltaX + Y*DeltaY

for a given image plane. This is done in CVB with the aid of tables to effect the two multiplications involved and simultaneously resolve complexities such as interlaced scan lines.

To understand the basic idea we introduce two functions (tables)

XTable ( X ) := M(0, 0) + X*DeltaX, for X = 0, ..., Width - 1 and

YTable ( Y ) := Y*DeltaY, for X = 0, ..., Height - 1.

Then the address is computed by

M(X, Y) = XTable ( X ) + YTable ( Y ),

which can be computed very rapidly.

The pixel reference tables XTable and YTable provide random access to pixels in a few clock cycles. Details and the associated data structures are described in the next section which also explains how the tables are to be programmed.

In addition to providing random access, the tables are able to influence the underlying image geometry without any loss in subsequent computation time:

Suppose you interchange the tables, the resulting image is reflected and rotated by 90°. If you also reverse the order of one of the tables the result is a rotation without reflection. By omitting (duplicating) every other value in both tables you shrink (enlarge) the image by a factor of two. Practical applications are the zoom function in image display and the squaring of pixels from non square pixel image acquisition device.

It is important to realise that these operations need computations in the order O(Width + Height) when programmed through the tables while the order is o(Width * Height) if the computations are carried out on the images themselves.

This is typically larger by a factor > 100. Of course the tables have to be programmed just once for a given target geometry of an image plane.

We propose the tables as descriptors for images. We know of no other addressing scheme possessing the same generality, speed and flexibility. The principal philosophy is to shift the attention from the specific memory layout of an image to information about this layout.

It seems appropriate to call the corresponding image addressing virtual pixel access, VPA in short.

The VPA scheme clearly resolves the problem of interlaced images (an example is included). It would, in fact, also describe an interlaced image, where the odd field is upside down and the even field rightside up or more pathological cases.

It is interesting to identify the class of memory representations which can be represented by VPA tables.

The class evidently includes all linear representations. Which are excluded? The equation

M(X, Y) = XTable ( X ) + YTable ( Y ),

evidently implies that

M(X1, Y) - M(X2, Y) = XTable ( X1) - XTable(X2) is independent of Y, so that

M(X1, Y1) - M(X2, Y1) = M(X1, Y2) - M(X2, Y2)

for all X1, X2, Y1, Y2 which is equivalent to

M(X1, Y1) + M(X2, Y2) = M(X1, Y2) + M(X2, Y1),

for all X1, X2, Y1, Y2.

This equation exactly describes image planes which can be described by VPA tables. It must hold if the tables exist. On the other hand if the formula is valid then the required tables are easily seen to be

XTable(X) = M(X, 0) - M(0, 0)

YTable(Y) = M(0, Y)

The asymmetry is only superficial. We could have subtracted M(0,0) from the Ytable entries just as well.

In more intuitive terms the basic condition is that in memory, all scan lines (chaotic as they may be) are translations of each other, and that (equivalently) all scan columns are also translations of each other. Excluded memory representations therefore change the representation scheme between scan lines.

An example of an excluded representation is a four-quadrant type of frame grabber where the quadrants are mapped to essentially random offsets in memory. If the quadrants are mapped to equidistant offsets in memory (e.g. sequentially) the representation is already covered by VPA (bizarre as it may be).

This discussion is somewhat academic. We may simply summarize: Almost all practically relevant memory formats can be represented by VPA.

Examples for the most common ones will follow.

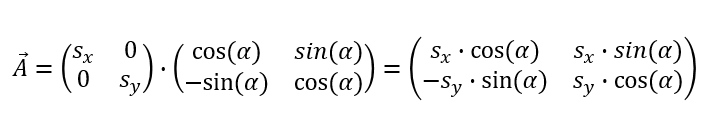

Normally the coordinate system of an image is fixed by the image itself. The origin is at the top left corner. The x axis extends along the top of the image, and the y axis extends down the left side. The unit of measure is one pixel.

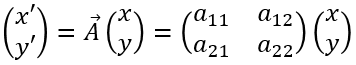

In many applications a flexible coordinate system can be a helpful tool. The coordinate system in CVB consists of the origin and a matrix defining the linear transformation of the image. A linear transformation can be described by a matrix A where:

The matrix A acts on any vector according to the equation:

A<x, y> = <x', y'> = <x*a11 + y*a12, x*a21 + y*a22>

Since each pixel in an image can be considered as a vector <x, y>, the matrix A acts on each pixel according to equation I, thus accomplishing linear transformation.

For example, the matrix A defined below acts on an image by doubling its height and doubling its width (a scaling factor of two).

So, any pixel <x,y> in the image is transformed into the pixel <x', y'> where, by equation I:

<x', y'> = <x*2 + y*0, x*0 + y*2> = <2x, 2y>

Consider the pixel <50, 80>: A<50, 80> = <2*50, 2*80>

The unit of measure becomes 1/2 pixel rather than one, thus increasing the virtual size of the image to 200% of its actual size.

There is no need for an application to support the coordinate system but it is often nice to have such a tool. This calculates the position of a point P (without CS) to a position in the image CS system:

P' = P * CS + Origin

To refute the possible objection that the tables, although convenient and fast to use, are somewhat complicated and difficult to create we will present a small number of practical examples.

First we state the equations defining the tables for a generic linear memory representation, then specialise with some frequent cases and also present examples outside the scope of linear representations.

Generic linear memory representation:

M(X, Y) = M(0, 0) + X*DeltaX + Y*DeltaY

VPAT [X].XEntry := M(0, 0) + X*DeltaX

VPAT [Y].YEntry := Y*DeltaY

The equations for the tables are always understood for X = 0, ..., Width - 1 and Y = 0, ..., Height - 1.

Note that the tables can be programmed sequentially so that multiplications do not have to be carried out. It suffices to add DeltaX or DeltaY when incrementing the table index. The same simplification applies to most other equations below, however even if multiplication were carried out it the overhead would not be high: The tables have order o(Width + Height) as opposed to the image itself which has order o(Width * Height). It is also important to remember that they need to be programmed just once for an image object.

Note that the way the VPA tables are used, we could have included offset M(0, 0) just as well in the Ytable as in the Xtable.

Example 1

For a practical example first consider an 8-bit gray scale image with width W and height H, the gray values are sequentially written in memory, with the address incremented from the last pixel of one scan line to the first pixel of the next scan line. A simple 4-byte pointer lpImage points to the first pixel in the first scan line (this is the simplest image format):

IImage.Dimension = 1

IImage.Width = W

IImage.Height = H

IImage.Datatype = 8

(We omit the index for one-dimensional images)

IImageVPA.BaseAddress = lpImage

IImageVPA.VPAT [X].XEntry = X

IImageVPA.VPAT [Y].YEntry = Y * W

Example 2

Next in complexity we consider an 8-bit DIB in the upside down format where the last scan line comes first in memory. There is an added complexity because, for DIBs, the scan lines wrap on Dword boundaries. In this case we have the pixel data represented by a memory handle hImage which needs to be locked in memory prior to addressing:

IImage.Dimension = 1

IImage.Width = W

IImage.Height = H

IImage.Datatype = 8

IImageVPA.BaseAddressIndex) = lpImage

IImageVPA.VPAT [X].XEntry = X

IImageVPA.VPAT [Y].YEntry = ((W + 3) and 0xFFFFFFFC)*(H - Y - 1)

The complicated »(W + 3) and 0xFFFFFFFC)« simply gives the length of a scan line in memory extended to Dword boundaries.

The factor (H - Y - 1) implements the upside down order of scan lines: For Y = 0 this is (H - 1) i.e. the last scan line in memory and the first in the logical image. For Y = H - 1 (last row in image) it becomes zero, addressing the first scan line in memory.

Example 3

Here is the image definition for a 24-bit RGB DIB, again upside down, referenced with a simple pointer lpImage to the bitmap data.

Recall that the values in memory are now BGRBGRBGR..., however we want plane 0 to refer to the red plane (otherwise it would be a BGR image):

IImage.Dimension = 3

IImage.Width = W

IImage.Height = H

IImage.Datatype = 8

(Don't let this confuse you: the 24 bits refer to RGB, individual color planes are still 8-bit images)

IImageVPA.BaseAddress = lpImage

IImageVPA.VPAT[X].XEntry = 3*X

IImageVPA.VPAT(0)[Y].YEntry = ((3*W + 3)and 0xFFFFFFFC)*(H - Y - 1) + 2 (The added »2« takes care of the red plane)

IImageVPA.VPAT(1)[Y].YEntry = ((3*W + 3)and 0xFFFFFFFC)*(H - Y - 1) + 1 (Green plane

IImageVPA.VPAT(2)[Y].YEntry = ((3*W + 3)and 0xFFFFFFFC)*(H - Y - 1) + 0 (Blue plane)

Note that the Xtable does not depend on the color.

Example 4

Now you may want to regard the RGB image as a single entity rather than as a stack of three gray images. In this case you can change the definition to the following (listing only the functions which change):

IImage.Dimension = 1

IImage.Datatype = 24 (This provides the desired 24-bit BGR pixels)

IImageVPA.VPAT[X].XEntry = 3*X

IImageVPA.VPAT[Y].YEntry = ((3*W + 3) and 0xFFFFFFFC) * (H - Y - 1)(Addressing the BGR scan lines)

Example 5

A single, elegant formulation combines both cases by creating a four-dimensional image where planes 0, 1 and 2 give the R, G and B images, respectively, and plane 3 the combined BGR pixels:

IImage.Dimension = 4

IImage.Width = W

IImage.Height = H

IImage.Datatype(Index) = 8 for Index = 0,1,2 and

IImage.Datatype (3) = 24 (Here the fourth plane actually has a different data type)

IImageVPA.VPAT[X].XEntry = 3*X

IImageVPA.VPAT(0)[Y].YEntry = ((3*W + 3) and 0xFFFFFFFC) * (H - Y - 1) + 2 (The added »2« takes care of the red plane

IImageVPA.VPAT(1)[Y].YEntry = ((3*W + 3) and 0xFFFFFFFC) * (H - Y - 1) + 1 (Green plane)

IImageVPA.VPAT(2)[Y].YEntry = ((3*W + 3) and 0xFFFFFFFC) * (H - Y - 1) + 0 (Blue plane)

IImageVPA.VPAT(3) = IImageVPA.VPAT (2)

Addressing for the fourth plane is the same as for the blue plane. Only interpretation of the address content is different.

Example 6

Next we consider a complex, double-precision image such as the output of some FFT functions. One memory block (lpReal) contains the real values, the other (lpImaginary) the imaginary ones in a simple scan line format (as the first example). We want to interpret plane 0 as the real part and plane 1 as the imaginary part:

IImage.Dimension = 2

IImage.IWidth = W

IImage.IHeight = H

IImage.Datatype = 64 + float + signed (Each plane has double-precision pixel values)

IImageVPA.BaseAddress (0) = lpReal

IImageVPA.BaseAddress (1) = lpImaginary

IImageVPA.VPAT [X].XEntry = X*8

IImageVPA.VPAT [Y].YEntry = Y*W*8

Note that in this case a pointer to one VPA table can be used for both image planes.

Example 7

In the next example a frame grabber (512 x 512 x 8) has non-square pixels with an aspect ratio of 3:2 and deposits the scan lines in interlaced format, the even field in the first 128 K of the memory block and the odd field in the second one. Part of the object is to square the pixels by mapping linearly onto a 768 x 512 rectangle (without anti-aliasing).

IDimension = 1

IWidth = 768

IHeight = 512

IDMType (Index) = 0

IDMBaseAddress (Index) = lpImage

VPAT [X].XEntry = (2*X) div 3

This performs the squaring of pixels

VPAT [Y].YEntry = 512*(Y div 2) for even Y and

VPAT [Y].YEntry = 131072 + 512*(Y div 2) for odd Y

Subsequent to programming of the tables, the complexities of the interlaced case are hidden to any processing software.

Also this is a situation where the geometry of an image is actually modified using the tables - virtually squaring the pixels. Of course the resulting image still has a fault resulting from aliasing (in every row every other pixel is duplicated).

Nevertheless, if an algorithm is not too sensitive to high local frequencies it can be ported to this type of image without modification.

Note that in all of the above examples, the image pixels are accessed in a unified way once the tables have been programmed. If we forget about the complex floating point and BGR images for the moment, we can actually state that any processing routine which works on one image plane of the above images will also work on all the other image planes of all the other images above.

Example 8

In the next example we suppose an image A as already been defined in the VPA syntax above, and describe the definition of another image B, of prescribed width wb and height hb, mapping a subrectangle [Left, Top, Right, Bottom] of image A onto [0, 0, wb - 1, hb - 1].

IDimensionB = IDimensionA

IWidthB = wb

IheightB = hb

IDMTypeB (Index) = IDMTypeA (Index)

IDMBaseAddress (Index)= IDMBaseAddressA (Index)

This implies that the memory for image A must not be freed while image B is in use. See reference counting below.

VPATB [X].XEntry := VPATA [Left + (X * (Right - Left)) /(wb - 1)].XEntry

VPATB [Y].YEntry := VPATA [Top + (Y * (Bottom - Top)) /(hb - 1)].YEntry

The tables implement the affine map required (this method of scaling again does not provide any antialiasing). In a similar way, images can be virtually rotated by 90° or reflected - always with a number of computations in the order of o(Width + Height) rather than o(Width * Height).

Finally we describe a problem which occurs when filtering an image with a convolution kernel, and its solution using VPA. In this case the output is first defined for possible translation positions of the kernel within the input image. Assuming a 5 x 5 kernel and a 512 x 512 input image, the output image would be 508 x 508. This is implemented in the present version of MINOS, resulting in harsh criticism by users who wish the output image to have the same dimensions regardless of the arbitrary definition of the boundary pixels.

The additional pixels cannot be left completely uninitialized. It has been proposed to copy the values of adjacent, well defined pixels to the fringe pixels after the filtering. Our suggestion is to extend the input image at the fringes before filtering to create a 516 x 516 image which is then filtered in a straightforward way.

Extending the image is very simple with the tables. Suppose we want to extend the image by DFringe on each of the four sides. This is done by

VPAT [X].XEntry := VPAT [0].XEntry - DFringe <= X <0 ,

VPAT [X].XEntry := VPAT [Width - 1] .XEntry for Width - 1<X<= Width + DFringe - 1,

VPAT [Y].YEntry := VPAT [0].YEntry, for -DFringe <= Y <0 ,

VPAT [Y].YEntry := VPAT [Height - 1].YEntry for Height - 1<Y<= Height + DFringe - 1.

This requires an overhead of 4*DFringe operations when the tables are programmed and saves 2*DFringe*Width + 2*DFringe*Height operations when the image is filtered.

The additional memory requirement is 16*Dboundary bytes. If we regard, say 9 x 9, as the largest kernel size we wish to implement without any special processing, this additional memory requirement is 64 bytes which is so small that we propose to make such an extension the standard for images.

It is important to understand that the Image Object is considerably more than simply image data. It should be considered as a

The images have width, height and the third dimension is depth, this third dimension can be used for colour images, stereo images, image sequences and many other image processing techniques. The data type for each plane can be set separately and can contain data with up to 250 bits per plane, signed, unsigned, fixed or floating point.

This section contains very detailed information about the Image Object. It is important to understand that the Common Vision Blox concept allows users full control over very powerful objects without the need for this detailed information, this information is provided primarily for tool developers who require more in-depth knowledge.

Image data can be accessed via a base address and a pixel offset, this offset information is stored in a virtual pixel access table (VPAT) which contains the offsets for a line, y, and for a pixel, x.

Every plane in an image object contains a separate VPAT and base address. Using this powerful approach to data access, zoomed, expanded, rotated and geometrically shifted images can be created very quickly without the need to copy or manipulate data but by simply rewriting the VPAT. In addition, the base library provides some optimised functions for accessing image data, these can be used by users who do not need background information on the structure of the image object. The Image Object is a Microsoft compatible COM object (For further information on COM objects can be found in ”Inside OLE Second Edition” written by Kraig Brockschmidt and published by Microsoft Press).

The important aspect of the Image Object is that it is NOT a Microsoft COM object but a Microsoft COMPATIBLE COM Object, this means that no Microsoft header files or libraries and no Microsoft compilers are needed to generate an image object. The only condition is that the compiler must be able to process object-oriented code.

The COM standard allows an object to be accessed via interfaces. Once defined, interface functions are not allowed to be changed, however new interfaces permitting access to the actual object data are allowed to be created. The Common Vision Blox Image Object has implemented a number of interfaces including the IImageVPA interface.

Images which have been acquired by an image acquisition device like a frame grabber are a special case within this concept, an image acquisition device driver has other interfaces in addition to the IImageVPA interface. As an absolute minimum for a driver, the IGrabber interface must have been implemented. This interface provides the Grab, Snap and ShowDialog functions with which images can be acquired from a camera, they are the absolute minimum for the device functionality. Other interfaces can be attached to the image object to support special hardware features such as display, trigger or digital I/O. The image object itself is the handle for the IImageVPA-Interface, this handle can be used to query whether other interfaces have been implemented and are available. The result of the query is a handle for the queried interface, this handle can be used to access all the functions in that interface. Providing an image is not reassigned and therefore the handle defines a different image object, the query for the interfaces only has to be performed once when the application starts.

One element of the Image Object is the coordinate system, image data is accessed through the coordinate system and it consists of the origin and a 2 x 2 transformation matrix. Linear transformations can be implemented using the matrix which effects all the image data accessed through the coordinate system. The coordinate system can be used for many different functions, for example, a frame grabber delivers an image with non-square pixels, the aspect ratio can be corrected by means of the transformation matrix.

NOTE: Not every tool supports the coordinate system (CS), for example CVB Minos, CVB Edge support the CS but CVB Barcode and CVB Manto do not. As a general rule if a tool requires a region of interest in Area Mode (X0, Y0, X1, Y1, X2, Y2) then is does support the CS, if the region of interest is required in Rectangle Mode (Top, Left, Bottom, Right) then is does not support the CS.

These examples offer 2 ways in which the driver interface can be used, there are many many possible options, these are offered as examples to stimulate the user's imagination to consider other uses.

This driver model differs from many other driver models in commercially available software, the two main aspects are : 1.No image data is exchanged between the driver layer and user layer, only information about the position and orientation of the image data. This has a considerable increase in speed compared with interfaces based on ReadLine or ReadRect concepts that copy data internally.

2.The interface can be extended dynamically without giving rise to compatibility problems. Existing interfaces are not allowed to be changed and if an application uses an interface named MyInterface and other interfaces are added to the driver (possibly years later), then the application can still work with the driver because MyInterface has not been changed and its address is ascertained at program runtime.

Please see the section 'Proposal for a Common Image Model' for more detailed information on the components of the Image Object.

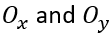

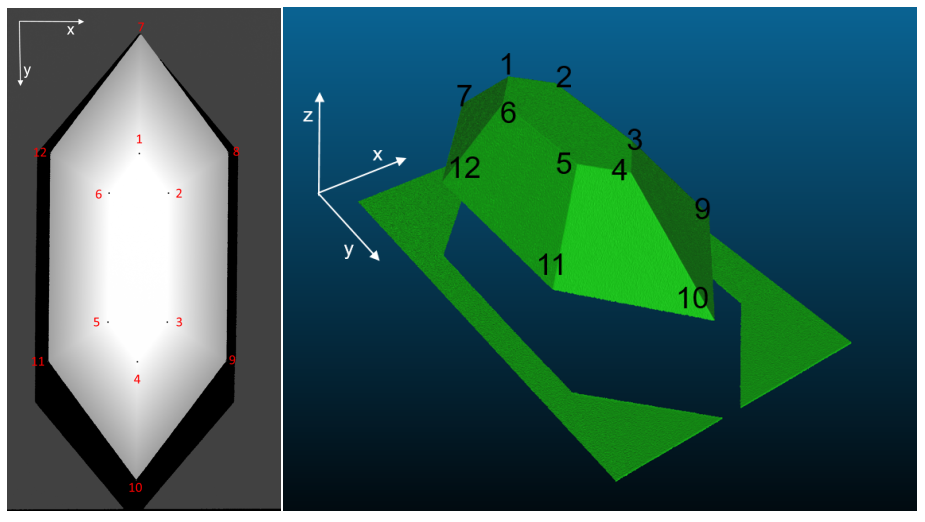

This section discusses two of the most powerful features of Common Vision Blox: the coordinate system and the selection of subareas of an image (defined by P0, P1, and P2).

Normally the coordinate system of an image is fixed by the image itself. The origin is at the top left corner. The x axis extends along the top of the image, and the y axis extends down the left side. The unit of measure is one pixel. Points, rotations, subimages and the sizes of features in an image are all defined with respect to the image coordinate system.

The coordinate system is implicit in the image, it overlays the image and is established according to your instructions. You specify the origin, determine the orientation and define the unit of measure. Thus, you control the structure on which positions, rotations and areas are based.

Dynamically within Common Vision Blox you can specify the virtual origin within an image to which all other points relate, it is also possible to do any or all of the following :

When you specify an area of interest (AOI) in a SubImage, Histogram or other task which uses the area structure, the area is fixed with respect to the coordinate system in effect at the time the task executes. This means that the AOI is translated, rotated or scaled according to changes made dynamically in the coordinate system. Because of this capability and the fact that you can define the CS with respect to a defined position, you can :

Note: The rest of this section discusses these features in more detail. Refer to chapter Areas for further information on defining subareas, their orientation and controlling the search direction within them.

In many applications a flexible coordinate system can be a helpful tool. The coordinate system in Common Vision Blox consists of the origin and a matrix defining the linear transformation of the image. A linear transformation can be described by a matrix A where:

The matrix A acts on any vector according to the equation:

A<x, y> = <x', y'> = <x*a11 + y*a12, x*a21 + y*a22>

Since each pixel in an image can be considered as a vector <x, y>, the matrix A acts on each pixel according to equation I, thus accomplishing linear transformation.

For example, the matrix A defined below acts on an image by doubling its height and doubling its width (a scaling factor of two).

So any pixel <x, y> in the image is transformed into the pixel <x', y'> where, by equation I:

<x', y'> = <x*2 + y*0, x*0 + y*2> = <2x, 2y>

Consider the pixel <50, 80>: A<50, 80> = <2*50, 2*80>. The unit of measure becomes 1/2 pixel rather than 1, thus increasing the virtual size of the image to 200% of its actual size.

It is not essential for an application to support the coordinate system but it is often a very useful tool. This calculates the position of a point P (without CS) to a position in the image CS system: P' = P * CS + Origin

The coordinate system provides a reference system for the description of areas. Using CVB DLLs you can reset the origin and the CS vector dynamically.

When the first BMP image is loaded, the CS origin is at the top left corner (x = 0, y = 0). The coordinate system vector (CS vector) that describes the scale and rotation of the coordinate system is <1, 0, 0, 1> (given in pixel coordinates). Its equivalent polar form is polar (1,0) in which the modulus (first coordinate) indicates that the image is at a 1:1 scale, and the argument (second coordinate) indicates that the coordinate system is rotated an angle of 0° from the top border of the image.

The flexibility of the CVB coordinate system allows you to move an image into the »right« place (translation), give it the »right« orientation (rotation), and change it to the »right« size (scaling) while you are looking at it.

The CS origin defines the translation by specifying the pixel coordinates of the coordinate systems origin, relative to the top left corner of the image (y-axis increases downward).

The CS vector describes the combined effects of rotation and scaling. Its modulus is the scaling factor and its argument is the angle of rotation.

The vectors are defined as follows:

P0 The origin point of the parallelogram. The task is performed in relation to it

P1 The corner that defines the direction of scan lines in the parallelogram

P2 The corner that defines the search direction in the parallelogram

P0', P1', and P2' are the standard vector variables CurrentP0, CurrentP1, and CurrentP2, and are calculated according to the following equation:

Pn' = Pn * CS + Origin

where CS is the current CS vector and Origin is the current CS origin.

P0, P1, and P2 are defined in relation to the current CS and remain fixed with respect to it. So, as the CS is translated, rotated, or sized so is the subarea. P0', P1', and P2' are defined in relation to the current image, therefore they change according to changes in the CS.

Through definitions of P0, P1, and P2, you control the orientation of the subimage as well as its size, shape and location.

So for example you can 'Cut Out' a subimage whose sides are not parallel to the sides of the image. When the subimage becomes the current image, P0 is the origin, P1 defines the x axis, and P2 defines the y axis.

If you want to keep a subarea you have selected, but want it rotated (by 90°, for example) when it is passed into the data frame, simply change the settings of P0, P1, and P2 so that they specify different corners of the same rectangle. (Compare this illustration to the previous one.)

In Scan tasks (ScanPlaneUnary, ScanPlaneBinary, ScanImageUnary and ScanImageBinary), these vectors also determine the scan direction and search direction.

So, by controlling the vectors you can change the scan direction. The first scan line is always from P0 to P1, and the scan direction is always from P0 to P2.

Related Topics

Image Control - Property Pages

Image Dll - Coordinate System Functions

The following section describes the use of multithreading in Common Vision Blox.

These notes are targeted primarily at users who have multiple image acquisition devices in a system, or who are working with the Coreco Imaging IC-Async. Registry settings are described and should only be used in the above cases. It is highly advisable not to make any changes to registry settings if only one frame grabber or no IC-Async is installed in the system.

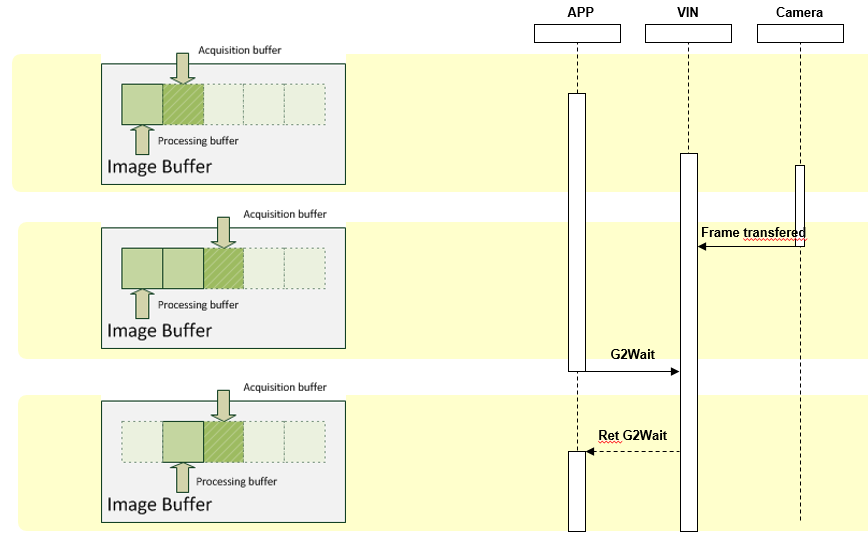

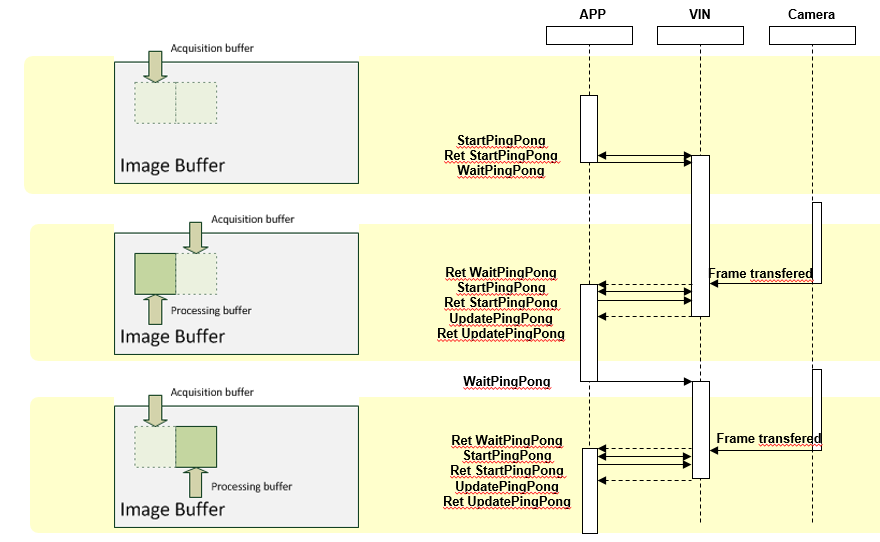

We will be looking at the ping-pong method of acquisition in a separate thread. It appears somewhat complicated on the surface and there are also two different methods, the difference is only slight but difficult to describe. Readers who have already developed multithreading applications will recognize the difference easily. The following figure shows the non-default method:

This case only applies when the driver that is used supports ping-pong and the PingPongEnabled property of the Image Control has been set to TRUE. When the Grab property is set to TRUE for the first time, a separate thread is started. This thread runs in parallel to the application thread and serves exclusively to acquire images with the aid of the ping-pong interface. However, the images generally need to be processed in the application and the two threads must therefore be synchronized in some way. This synchronization is done by means of events.

An event is a connection between occurrences in the Control and the application in which the Control is located. The end points of this connection are called the event source and event sink. When the event has been triggered, the Control branches to this function if a sink function has been specified in the application. Basically, an event can therefore be regarded as a callback function.

Now we come to the difference between the two methods : In the first method the ImageSnaped event is executed directly from the acquisition thread of the Control , and is not passed to the applications message queue for further processing. For the application, this means that everything that is done in the event potentially involves unreliable thread handling. A classic example of this is a list of threads which is processed in the ImageSnaped event. At the same time the program provides buttons with which new threads can be inserted in the list or existing ones deleted from it. If no synchronization takes place, users can delete a thread from the list while this element is being accessed in the event. Inexperienced users fall into an unpleasant trap if they are not aware of this situation. For this reason we have implemented a simple form of synchronization with the user interface in the Common Vision Display and Image Controls.

The event is triggered by the client's message queue. This ensures that no user inputs are possible while the event is executing. This is the second, non-default method.

Switching between the two modes is implemented by a flag in the registry. Under HKEY_LOCAL_MACHINE\SOFTWARE\Common Vision Blox\Image Manager there is a DWORD value named GlobalAsyncACQ Enabled. A value other than 0 disables synchronization via the message queue. A value of 0 (default) enables it. The default value should not be changed for normal applications. If, however, multiple image acquisition device instances and thus multiple Image Controls are in use, the value should be changed to ensure that the application handles threads reliably.

A simple test illustrates the difference. Take two Image Controls and load two drivers. Each Control is assigned a driver instance. A Sleep(1000) is called in the ImageSnaped event of one Control . If the Grab property of both Controls is set to TRUE, the second display acquires new images at frame rate whereas the first display only acquires a new image every second. This requires Global AsyncACQ Enabled to have been set to 1, the two displays therefore run in parallel.

If Global AsyncACQ Enabled has been set to 0, the two displays run at just one image per second because the slower thread delays the other one (there is only one message queue).

Experienced developers will have noticed a striking infringement of the Microsoft Windows Design Rules in the case of asynchronous acquisition. According to Microsoft, the user interface (UI) is not allowed to be accessed from multiple threads at the same time. This is understandable because the output device only has one logical pixel x, y which can only assume one state. Some kind of synchronization between the threads is therefore needed.

This puts Common Vision Blox in a tricky situation because users can extend the acquisition thread by means of the ImageSnaped event. For instance, users can call the AddDisplayLabel method, which draws a label over the image as an overlay, in the ImageSnaped event. In this case, changes are made to the UI from the thread. Common Vision Blox could provide safeguards against all dangerous calls but this would lead to a drop in performance, therefore the Image and Display Controls open up their internal synchronization objects allowing users the opportunity to ensure that their calls handle threads reliably. Everything that is under the direct control of Common Vision Blox (e.g. interactive zooming, scroll bars etc.) is safeguarded internally.

All external UI functionality has the potential for unreliable thread handling, it has to be made reliable by means of synchronization objects. At this point we must stress that this tricky situation does not originate solely with Common Vision Blox UI calls but affects all outputs with Windows UI functions in all programs. If, for example, MFC is used to display text in a text box from a second thread, the program will crash pitilessly. Visual C++ users, however, must draw upon SDK functions to enable UI outputs from a separate thread.

Two methods are available in the Controls for synchronization:

Lock () : ... locks all internal outputs of all instances of the Image or Display Control

Unlock () : ... unlocks the above

If labels are to be added to an image in the ImageSnaped event, the CVDisplay.AddLabel(...) call has to be inserted in a Lock-Unlock block. Of course, there is only necessary if GlobalAsyncACQ Enabled has been set to a value other than zero which, in turn, only makes sense if multiple independent acquisition threads have to run (e.g. when multiple image acquisition devices are going to be used simultaneously).

The acquisition thread thus branches to the application using one of the two methods described above and remains there until the event function is exited. The reason for this is that we want to evaluate images. There is no point running a thread that constantly acquires new images which cannot be processed, thereby consuming valuable computation time. In the figure above, the course of the acquisition thread is marked in red.

Attentive readers will have noticed a disadvantage of the implementation here. The application is not told in any way whether all images were processed or how many images were not processed. The application may need to acquire various images quickly one after another for processing later. The solution to this lies not just in creating a memory area for the image but also in managing the images in a circular buffer of adjustable size. If the circular buffer is big enough, the application described above can be implemented. Such a procedure can be implemented with the functions that are currently available in Common Vision Blox (see Sequence tool for example).

Related Topics

GetGlobalAsyncACQEnabled and SetGlobalAsyncACQEnabled functions from Utiilites Dll

Lock and Unlock method of the Display Control

Common Vision Blox supports a number of standard and non-standard file formats. Non-standard file formats are often required to store extended information, for instance Windows graphics file formats do not offer the ability to store Common Vision Blox coordinate system information, for this reason a proprietary CVB format exists.

The hardware independent architecture of CVB requires a Video INterface file (VIN) to be loaded when an image acquisition device like a frame grabber or camera is used, the VIN format is proprietary to CVB and supports a wide variety of interfaces for ping pong acquisition, linescan acquisition, triggered acquisition and many more.

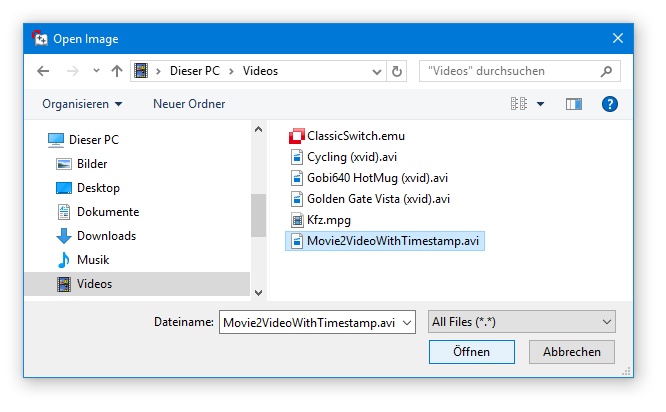

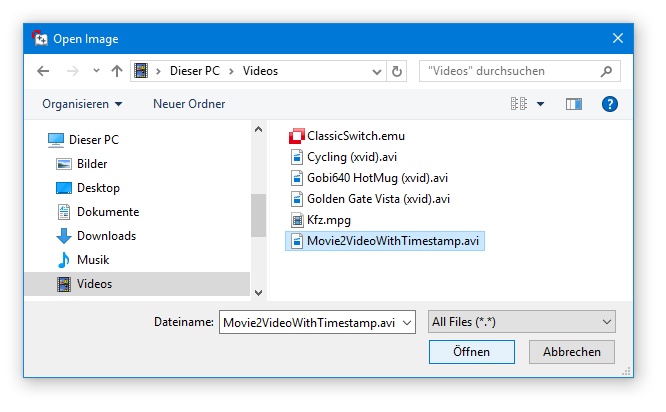

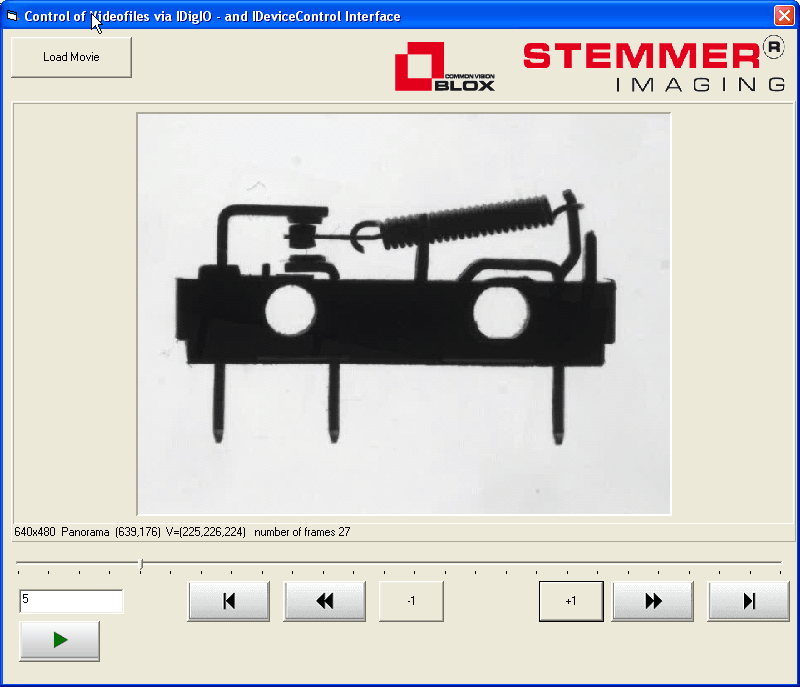

Video files such as AVI or MPG can be handled as an image acquisition device, meaning they expose at least the IGrabber interface which is used to acquire frame by frame of the video file. This functionality is also available for a list of images that is defined in the so called EMUlator file format. A list of any graphic files can be defined in a simple text file (extension *.EMU). Once the EMU file is loaded as an image it exposes the IGrabber interface.

Graphics File Formats

Video files

VIN Format ( image acquisition devices)

MIO Format

EMU Format

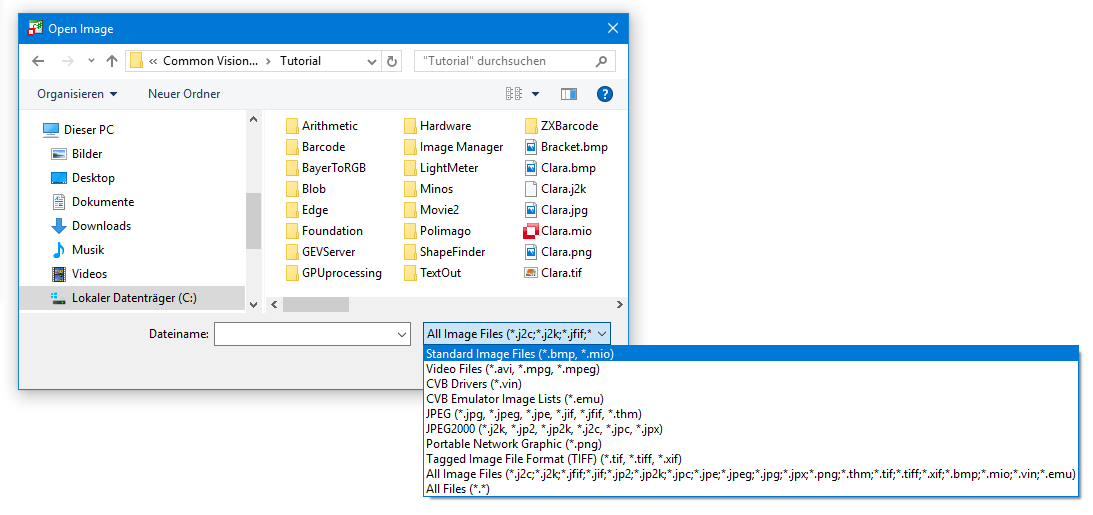

Common Vision Blox supports several standard and non-standard image file formats and it does so using different libraries for loading and saving different image formats. Find below a list of the supported formats along with some additional information :

The Windows Bitmap format is perhaps the most important format on the PC platform. CVB supports BMP files with either 8 bits/Pixel (monochrome) or 24 bits/Pixel (colour). RLE-compressed BMP can neither be loaded nor saved with CVB. BMP file format support for CVB is native to the CVCImg.dll, i. e. no additional libraries are used for that format.

The MIO file format (MIO = Minos Image Object) is an uncompressed format proprietary to CVB and may only be loaded and saved using CVB-based software (e. g. the VCSizeableDisplay.exe sample application).

The MIO format supports any kind of image representation that CVB supports, i.e. you may have an arbitrary number of planes per image, any bit-depth supported by CVB, even floating point pixels. Additionally the MIO format contains the coordinate system associated with the image object, plus the overlay information of your image (if the image has been created as an overlay image). MIO support is again native to the CVCImg.dll.

The TIFF-format is a very widespread format on several computer platforms. However, the TIFF standard itself is quite complex and offers a huge variety of pixel formats, additional tags, planes etc. Therefore almost all software packages support only a certain subset of the official TIFF standard - and CVB is no exception to this. TIFF formats supported by CVB are from 8 to 16 bits/Pixel for one (monochrome) or 3 channels (colour). Reading is supported for uncompressed images, LZW77 compressed images and Packbits compressed images. Huffman-coding and Fax-coding are not supported as they would result in images with less than 8 bpp. TIFF support in CVB is achieved through the libtiff library, version 3.5.7, Copyright (c) 1988-1997 Sam Leffler, Copyright (c) 1991-1997 Silicon Graphics, Inc.

The source of libtiff is available from www.libtiff.org. For the complete libtiff copyright notice see below.

The JPEG format is the perhaps most popular and most widespread image format with lossy compression. JPEG support in CVB comprises 8 bpp images (monochrome) and 24 bpp images (colour) with jpeg compression. There is an inherent limitation to images of 65536 pixels width and height in jpeg formats. To adjust the compression level use the functions SaveLossyImage (method of the image Control ) or WriteLossyImageFile (function from the CVCImg.dll).

Valid quality values range from 0.1 to 1.0). The default compression factor is 0.7. JPEG support in CVB is fully based on the libjpg library version 6 provided by the Independent Jpeg Group, Copyright (C) 1991-1998, Thomas G. Lane, www.ijg.org. JPEG2000 (*.j2k, *.jp2, *.jp2k, *.jpc, *.jpx) The comparatively young JPEG2000 format is - much like its ancestor JPEG - a lossy image compression format based on wavelet compression technology. It is recommended to use this image format only on fairly up-to-date computers, because JPEG2000 compression and decompression are extremely time consuming. The boon of using JPEG2000 is a comparatively good image quality even at higher compression ratios (like JPEG, JPEG 2000 supports a quality parameter that may be set using the functions SaveLossyImage (method of the image Control ) or WriteLossyImageFile (function from the CVCImg.dll).

Valid quality values range from 0.002 to 1.0. The default compression factor is 0.075. Under CVB, JPEG2000 supports image formats with 8, 10, 12 or 16 bpp (monochrome) as well as 3x8, 3x10, 3x12, 3x16 bpp (colour). JPEG2000 support in CVB is based on the free j2000 codec available from https://jpeg.org/jpeg2000/. The following file formats are supported in CVB through the commercially available AccuSoft ImageGear library. They are available in CVB for the sake of completeness and flexibility, however we strongly recommend that you use the image formats listed above that cover most of the possible applications and offer a reasonably wide range of portability. All of the formats below support 8 bpp and 24 bpp images only, the only exception being WMF which only supports 24 bpp. For IFF and TGA both the RLE-compressed and the uncompressed variant are supported. Please note that the GIF support has been removed.

For more information and examples see chapter More Basics - Image file handling (load and save).

The Minos Image Object (MIO) file format is proprietary to CVB. This format saves the image data as well as the coordinate system and origin information. This extended information could be used to store calibrated spatial information, coordinate information used for correlation or simply information about a processed image.

The MIO format allows to store images with more than 8 Bit image data called High Dynamic range images (HDR).

This CVB AVI driver enables access to DirectShow compatible video files via the grabber interface of Common Vision Blox. Among the supported file types are AVI and MPEG formats, but effectively the selection of compatible file formats depends on the codecs installed on your system. As a general rule of thumb, most video formats that can be replayed using Microsoft Media Player can also be used with this driver.

For more details regarding the AVI driver take a look extra chapter How to deal with Video files.

The Video INterface (VIN) files are used in Common Vision Blox to access different frame grabbers, cameras and other real or virtual devices. The are at their core DLLs that contain the adaption of the hardware abstraction layer of Common Vision Blox for a given data source and are commonly referred to as "drivers" or "vin drivers" in Common Vision Blox. For details refer chapter Image acquisition device drivers.

An EMU file is a simple text file defining a number of images to be loaded. Using the IGrabber interface the application can 'acquire' from the image list. Of course all images must have the same size and the same number of planes. For details refer extra chapter How to use the virtual driver (EMUlator).

libtiff Copyright notice:

Copyright (c) 1988-1997 Sam Leffler Copyright (c) 1991-1997 Silicon Graphics, Inc. Permission to use, copy, modify, distribute, and sell this software and its documentation for any purpose is hereby granted without fee, provided that (i) the above copyright notices and this permission notice appear in all copies of the software and related documentation, and (ii) the names of Sam Leffler and Silicon Graphics may not be used in any advertising or publicity relating to the software without the specific, prior written permission of Sam Leffler and Silicon Graphics. THE SOFTWARE IS PROVIDED "AS-IS" AND WITHOUT WARRANTY OF ANY KIND, EXPRESS, IMPLIED OR OTHERWISE, INCLUDING WITHOUT LIMITATION, ANY WARRANTY OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. IN NO EVENT SHALL SAM LEFFLER OR SILICON GRAPHICS BE LIABLE FOR ANY SPECIAL, INCIDENTAL, INDIRECT OR CONSEQUENTIAL DAMAGES OF ANY KIND, OR ANY DAMAGES WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER OR NOT ADVISED OF THE POSSIBILITY OF DAMAGE, AND ON ANY THEORY OF LIABILITY, ARISING OUT OF OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.

In general, Common Vision Blox provides possibilities to handle high dynamic range images with more than 8 bit per pixel image data. Here is a short overview of the possibilities, some hints and restrictions.

Acquiring HDR images

CVB is able to work with combinations of frame grabbers and cameras which provide images of 10 , 12 or 16 bit pixel data type.

Display of HDR images

In all cases, where a high dynamic range images is assigned to a CV Display control the output will lead to undesired results for colors greater than 255 (white in 8bit). This is due to the fact that only the least 8 significant bits in gray values will be displayed, the other pixel channel bits are ignored. The solution is to scale down the display range to 8 bit respective 256 gray values. To do so , one can obtain a image copy with 8bit with the Image Library function MapTo8bit. The original HDR image keeps available in the Image control.

Processing of HDR images

As shown this list, the following Common Vision Blox Tools do support High Dynamic Range images:

As a basic function, the HDR image data can be accessed with the Image Library function GetImageVPA. The retrieved pointer can be used to process the raw data directly.

Processing of high bit data is limited. Means there are functions and Tools which support this others don't. Please refer the manual sections for details.

Loading and saving HDR images

In Common Vision Blox it is possible to load and save high bit images in the MIO-format, in TIFF-format and in JPEG2000 format.

Related Topics

Supported File formats

MapTo8bit

CreateGenericImageDT

ImageDataType

The available methods and functions for loading and saving image files are distinguished by user interaction. The most straight way is to pass the file name as function argument and process the file directly. Second, other functions ask for the file name from the user in a file selection dialog and process the file then.

Methods and functions for loading images

Image Control- LoadImage method

Image Control- LoadImageByDialog method

Image Control- LoadImageByUserDialog method

Image Library- LoadImageFile function

Methods and functions for saving images

Image Control- SaveImage method

Image Control- SaveImageByDialog method

Image Control- SaveImageByUserDialog method

Image Control - SaveLossyImage method

Display Control- SaveImage method

Display Control- SaveImageByDialog method

Image Library- WriteImageFile function

Image Library - WriteLossyImageFile function

Loading or saving

The supported file formats are provided in the methods and functions for image file handling. CVB will find out the format type automatically in file names by the file name extension given. This is even possible in file selection dialogs with no corresponding file filters. How to load an image via the dialog is shown here:

Choose Image Files and you will get a list of all files in supported file formats like TIF, JPG, PCX, MIO. Then choose the desired file. That's it.

To save an image in a supported file format, enter the desired file name and the appropriate extension. The file will be stored automatically in this file format.

Related Topics

Image Control

Supported File formats

There are the core DLLs that contain the adaption of the hardware abstraction layer of Common Vision Blox for a given data source and they are commonly referred to as "drivers" or "vin drivers" in Common Vision Blox.

The Video INterface (VIN) files are used in Common Vision Blox to access different frame grabbers, cameras and other real or virtual devices. These drivers are installed to the %CVB%Drivers directory.

The set of CVB hardware drivers that is available for a given release can be found on the CVB user forum. Please visit the CVB website frequently for getting actual available driver setups for supported cameras and framegrabbers. Video interface drivers use an COM-like interface concept for their implementation and are therefore not tied to specific versions of Common Vision Blox. In other words: It is (within certain boundaries) no problem to use a video interface driver that is significantly older or newer than the version of Common Vision Blox you are using.

The majority of available vin drivers has been developed by STEMMER IMAGING. A Driver Development Kit (DDK) for implementing vin drivers is available - interested hardware manufacturers are encouraged to contact STEMMER IMAGING for details.

CVB interfaces

Find all CVB interfaces listed here in Image Manager CVCDriver.dll and CVCImg.dll.

Common CVB interfaces What software interfaces do image/drivers support?

Most of these interfaces are implemented by the drivers, details are listed in each specific CVB driver documentation ( %CVB%Drivers directory)

| Interface in CVCDriver.dll | Description |

|---|---|

| IBasicDigIO | Controlling IOs |

| IBoardSelect/IBoardSelect2 | Switching between different devices |

| ICameraSelect/ICameraSelect2 | Switching between different devices |

| IDeviceControl | Controlling hardware settings |

| IGrab2/IGrabber | Image acquisition |

| IImageRect | Image handling and acquisition (grab and snap) |

| IImageVPA | Image Access |

| INodeMapHandle/INodeMapHandle2 | Control of GenICam compliant devices |

| INotify | Register callback functions for events |

| IPingPong | Image acquisition |

| IRingBuffer | Recording to ram buffers |

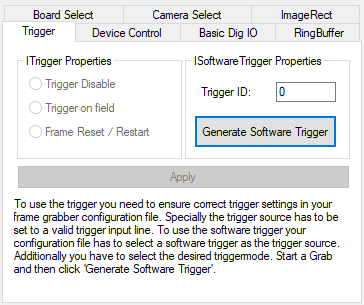

| ISoftwareTrigger | Sending a software trigger to a device |

| ITrigger | Reacting on external triggers |

For details using these interfaces refer CVCDriver.dll and CVBImg.dll description. Tutorials with examples how to use these interfaces to access devices and process images can be found in

Specific CVB interfaces

| Interface in CVCDriver.dll | Description |

|---|---|

| ILineScan | Special features for controlling line scan cameras |

| IPort | Only AVT FireWire driver |

| IPropertyChange | Dalsa Genie driver, AVT driver |

| IRegPort | GenICam driver only |

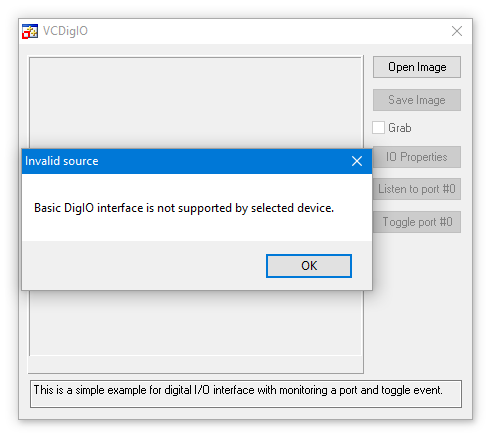

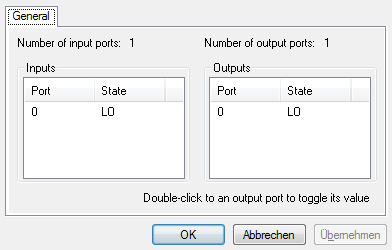

Register bits for I/Os can be controlled via the CV Digital IO-Control or by using the IBasicDigIO functions of the CVCDriver.dll . Find functions for checking available input and output ports, states of them and groups of ports. Also it can be verified whether the image supports the BasicDigIO interface.

For testing purposes, please use the CVB Image Manager Tutorial VC Digital IO Example (in %CVB%Tutorial directory) .

If the IBasicDigIO interface is not supported by the used driver, then it is indicated while loading the driver :

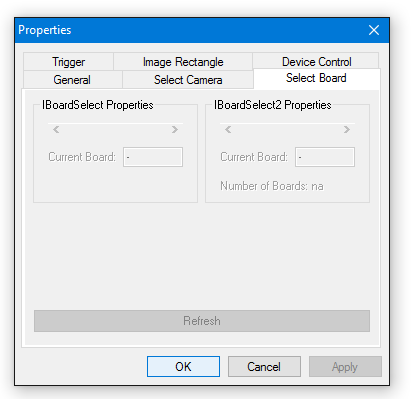

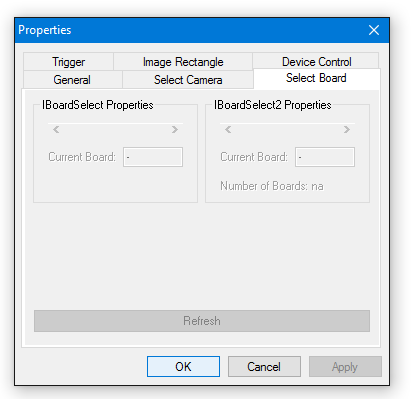

The IBoardSelect2 interface allows to switch between different connected image acquisition devices (frame grabbers or cameras). CVB handles device switching via the IBoardSelect2 functions of the CVB Driver Library or the Board property of the CV Grabber Control.

For testing purposes, please use the CVB Image Manager Tutorials (in %CVB%Tutorial directory) :

VC Sizeable Display (button Show Grabber properties) to access IBoardSelect and IBoardSelectSelect2 properties and methods.

or VC Pure DLL/VC Driver example (menu Edit item Image Properties).

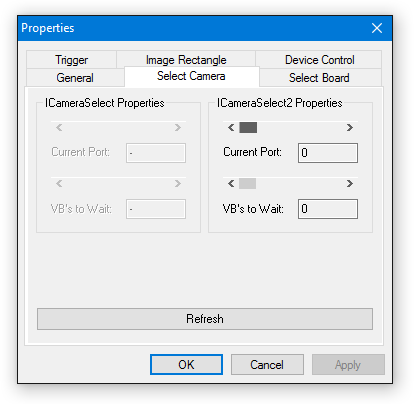

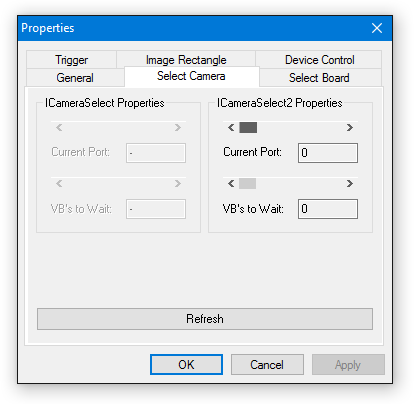

ICameraSelect2 Interface: switching between different devices

The ICameraSelect2 interface allows to switch between selected camera ports or image acquisition devices. The switching between devices is realized in CVB via the ICameraSelect2 functions of the CVB Driver Library using CS2Get... and CS2Set... or with CamPort property of the CV Grabber ActiveX Control.

Access ICameraSelect and ICameraSelect2 properties and methods:

A platform independent C++ code example can be found in the MultiOSConsole example (Camera.cpp) of the Image Manager

Windows: %CVB%Tutorial\Image Manager\VC\VCMultiOSConsole

Linux: /opt/cvb/tutorial/ImageManager/ComplexMultiOSConsoleExample

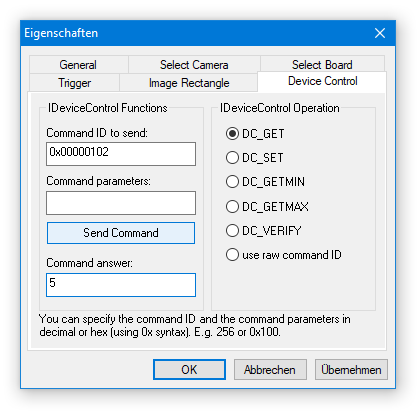

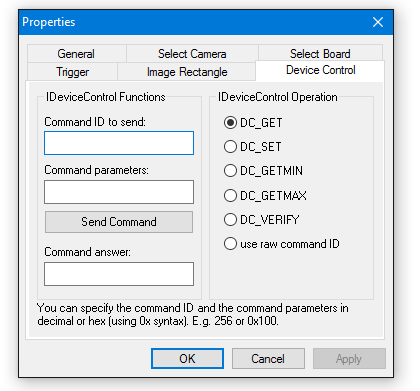

IDeviceControl Interface: controlling hardware settings

The IDeviceControl interface offers the possibility to set or change hardware (i.e. camera) parameters directly over CVB.

The IDeviceControl Interface can be accessed through DCStrCommand and DCBinaryCommand function of the CVB Driver Library or over CV Grabber ActiveX Control - SendStringCommand or SendBinaryCommand method.

The complete list of available command values can be found in the driver specific iDC_*.h header file. Refer always to the CVB Driver User Guide for your image acquisition device to check the list of supported interfaces. It contains all IDeviceControl definitions for the VIN driver for use with DCStrCommand and DCBinaryCommand functions of the Image Manager as well as the SendBinaryCommand and SendStringCommand methods of the grabber ocx.

Often used command strings are listed here:

DC_BUFFER_INFO_TIMESTAMP

This is the time stamp received from the acquisition hardware saved to the acquired buffer in the memory.

DC_BUFFER_INFO_IMAGEID

This is the image id received from the acquisition hardware saved to the acquired buffer in the memory.

DC_BUFFER_INFO_NUM_PACKETS_MISSING

This is the number of missing packets from the image transfer of a GigE Vision camera related to the acquired image of the currently used buffer.

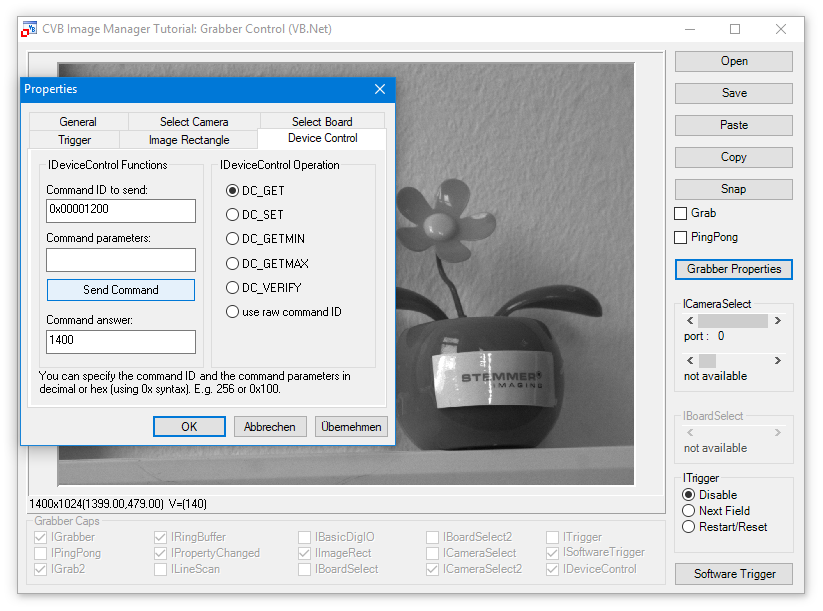

Command ID can be tested over CVB Image Manager Tutorials VC Sizeable Display example, VBGrabber.NET or one of the following code examples.

For GenICam vin driver use the iDC_GenICam.h in %CVB%Lib\C

Send command DC_BUFFER_INFO_DELIVERED_WIDTH = 0x00001200, Command Answer results 1400, this is the delivered image width value of the connected GenICam device.

VC++ Example Code: retrieve ImageID (from the VC++ GenICam Simple Demo)

VC++ Example Code: retrieve lost packets from last frame

DCBinaryCommand function sends the binary command directly to the CVB driver.

It has to be called twice when working with output buffer size (first call with iInBufSize NULL and second call with resulted iOutBufSize as iInBufSize).

If you use the CV Grabber Control, the SendStringCommand method is similar to the DCStrCommand function of the Driver Dll.

IDeviceControl Commands Beside accessing all different parameters by the GenApi interface some other commands are supported to give extra information.

DC_DEVICE_PORT_NODEMAP

returns the node map handle of the camera xml. This handle is returned when the configuration generates a valid GenApi xml file.

If no valid xml file could be found a NULL pointer is returned.

A custom xml can be used by defining it in the ini file.

DC_DEVICE_NODEMAP

returns the Nodemap handle to the DMA/Board xml.

This handle is returned when the configuration generates a valid GenApi xml file.

If no valid xml file could be found a NULL pointer is returned.

A custom xml can be used by defining it in the ini file.

These commands return special single values:

DC_BUFFER_INFO_TIMESTAMP

this command returns the time stamp of the last frame buffer acquired in µs.

DC_BUFFER_INFO_IMAGEID

frame ID is the number of the acquired image frame increased in steps of 1. Starts with 1.

IGrab2/IGrabber Interface: image acquisition

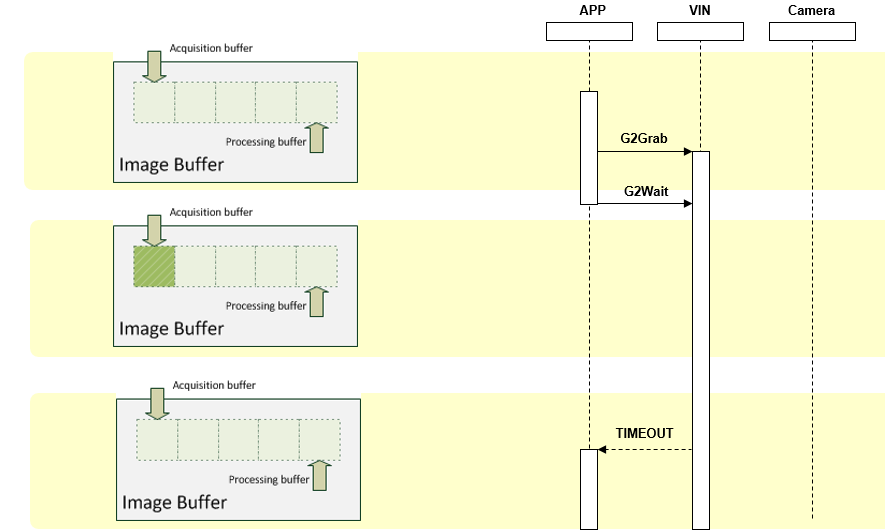

The IGrab2 interface was designed to do a continuous image acquisition into a image ring buffer and to be able to protect certain image buffers against overwriting. It provides functions for starting and stopping the acquisition, wait for an image, etc. and belongs to the CVB Driver Library. All functions of this IGrab2 interface are also used by the CV Image Control if you start an acquisition by setting it's Grab property to TRUE.

The function G2GetGrabStatus with its options offers information about the image acquisition which can be used for monitoring and analysis.

Option examples:

G2INFO_NumPacketsReceived

This is related to GigE and indicates the number of data packets received on the GigE Vision streaming channel.

G2INFO_NumResends

This is related to GigE and indicates the number of resend requests issued by the host. If this number increases, it indicates that something is wrong with the data transmission. Possible reasons may be: Settings of the network card, CPU-load on the host, cable/switch problems, bandwidth problems on the Link.

and much more.

A platform independent C++ code example is the MultiOSConsole example (Camera.cpp) of the Image Manager

Windows: %CVB%Tutorial\Image Manager\VC\VCMultiOSConsole

Linux : /opt/cvb/tutorial/ImageManager/ComplexMultiOSConsoleExample

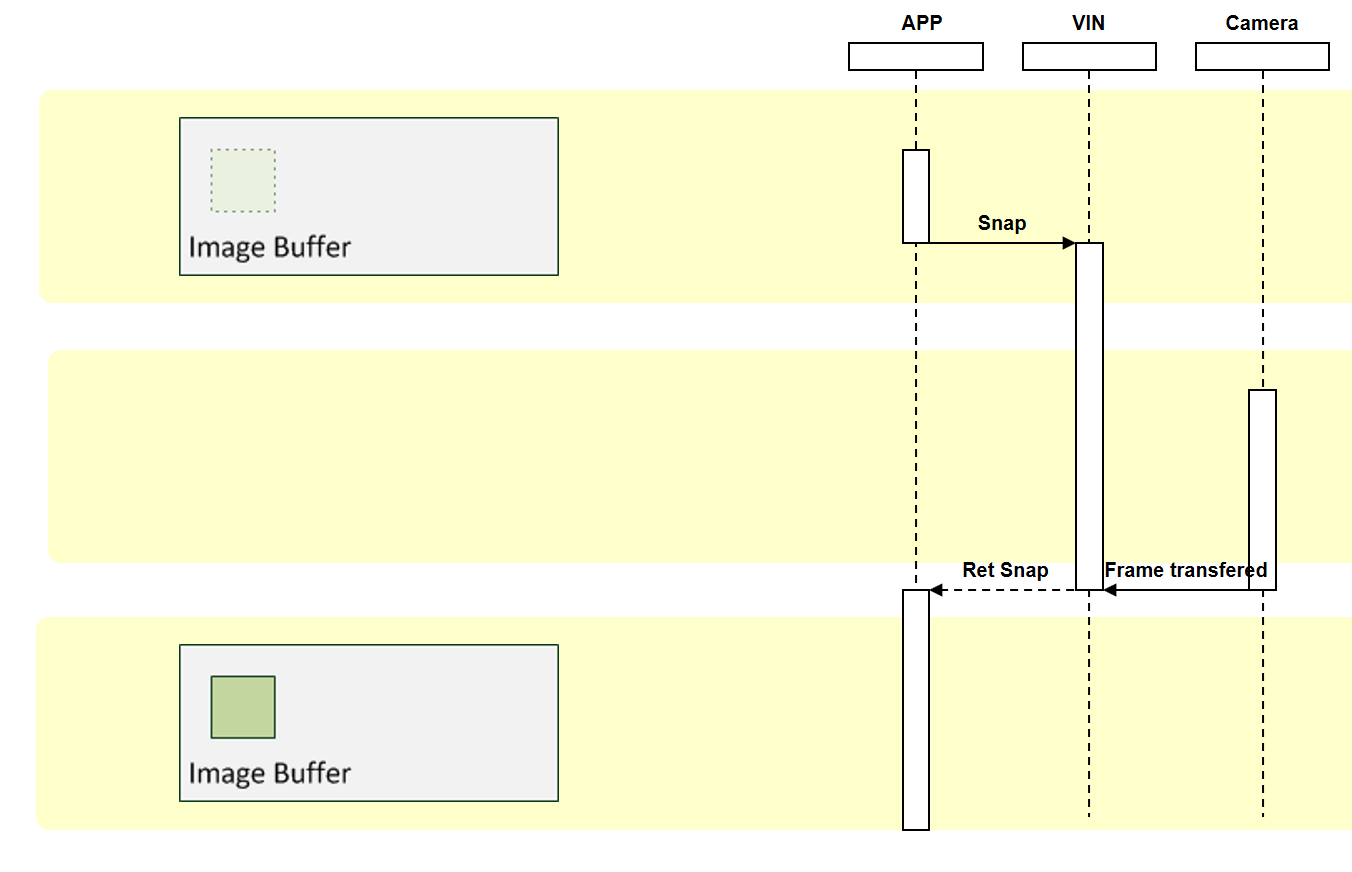

The IGrabber interface is used for single image acquisition functions (snap).

Option examples:

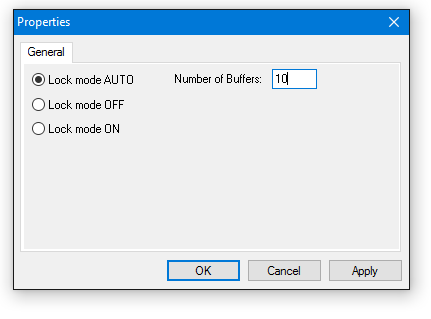

IPingPong description - image acquisition

The IPingPong interface implements an asynchronous image acquisition with two buffers. All functions of this interface are also used by the CV Image Control if you start an acquisition by setting its Grab property to TRUE. In CVB, this can be implemented via the PingPong functions of the CVB Driver Library or the Grab property and Snap method of the CV Image Control.

A description of these interfaces can be found in the Common Vision Blox Manual. For testing purposes, please use the CVB Image Manager Tutorials VB.Net Ping Pong example or the VCPureDLL/VCDriverMDI example (menu Edit-PingPong Grab) or any other CVB Tutorial program .

Modern image acquisition devices can support more than 2 buffers using the IGrab2 interface.

All functions of this interface are also used by the CV Image Control if you start an acquisition by setting its Grab property to TRUE.

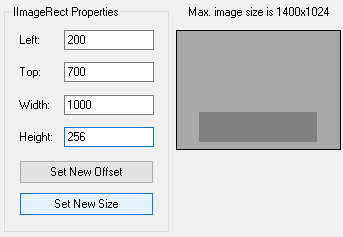

IImageRect Interface description: image resolution changes

Some cameras or other devices allow the user to read out a reduced size image or parts of an image, so this interface can be used to change the resolution of the image or to inform the driver for resolution changes made previously in the hardware.

Changing Resolution over IImageRect

For cameras allowing to read out a reduced size or parts of the image this can be used with the IImageRect Interface. The settings can be adjusted with the

Inform the driver about changed resolution or pixel format

With GenICam compliant cameras settings as resolution, pixel format and - if supported - binning or partial scan, can be changed in the camera directly. This can be done either with a control tool or the node map interface. If one of these settings is changed, the image object has to be updated.

You can restart the application or inform the driver about the changed resolution or pixel size. This is necessary as the underlying image object holds preinitialised buffers where the image stream from the camera is written to. When the size of the image changes the underlying image object need to be informed and the buffers need to be reinitialized.

To do that you need to:

C# Example Code:

For testing, please use the Common Vision Blox Viewer or CVB Image Manager Tutorials VCPureDLL/VCDriverMDI example or VB Grabber OCX.

The IImageVPA interface provides rapid access to image pixels in cases where the image is represented in memory in some VPA-compatible format. In addition to the usual QueryInterface, Addref and Release, IImageVPA contains the following functions:

ImageDimension

Returns the dimension of the image object, i.e. the number of image planes. The image planes are accessed by an index and all indexed functions below are defined for

0 <= Index < (IMDimension - 1).

ImageWidth/Height

Logical width and height of the image. This is how wide and high the image should appear to the processing software. All planes of the image have this logical width and height which needn't match their actual width and height in memory - SourceWidth and SourceHeight below.

Virtual image dimensions can be used to control aspect ratios of non-square pixel frame grabbers or for pyramids.

ImageDatatype(Index)

Returns the data type of a pixel value in the image plane given by Index. The format for the encoding of data types should include the bit count (8-bit is probably the most frequent) and flags to specify signed or unsigned numbers or possibly floating point numbers where there is any ambiguity (e.g. for 32 bits). Our proposal is to use a Dword to identify the data type where the lowest byte defines the bit count and bits 8 and 9 stand for signed/unsigned and float/integer, respectively. Higher bits are free to implement user-specific flags (i.e. ColorBGR, as may be desired for one of the examples presented).

ImageVPA (Index)

Returns the address of the VPA table for the Index-th image plane and the base address of the image plane. The VPA table is an array of simple 8-byte structures containing the two Dword fields XEntry and YEntry. Details on the size of this array and its precise definition follow in the complete definition of the interfaces.

GetImageCoords/SetImageCoords

Sets and returns the current coordinate system. The coordinate system is defined by the origin and a 2x2 matrix which represents scaling and rotation.

These functions are implemented in a semantically correct way if the scalar value of the pixel (X, Y) in the Index-th image plane can be addressed using the following equation:

Address := BaseAddress (Index) + VPAT (Index)[X].Xentry + VPAT (Index)[Y].YEntry.

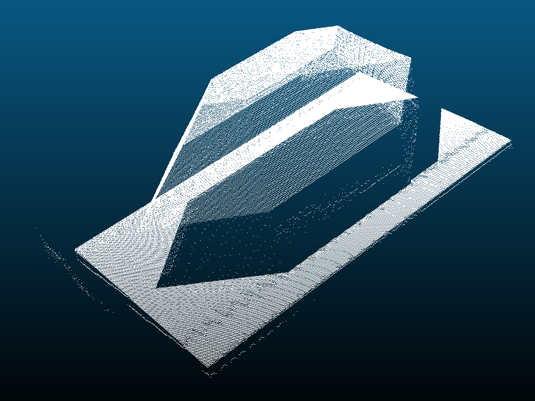

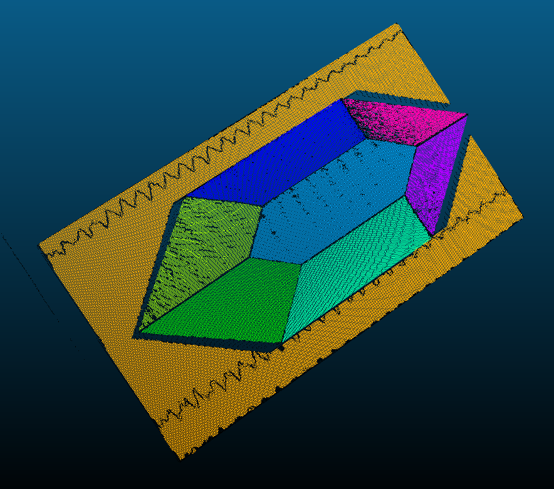

The following figure shows the mechanism of pixel addressing with VPA without using the coordinate system:

The following figure shows the mechanism of pixel addressing with VPA using the coordinate system:

Suppose that for some processing routine the index of the image plane in question has been fixed, and that the two pixel coordinates (X, Y) reside in the general registers (ecx, edx) and the value of VPAT in ebx. Then the assembler statements

mov eax, BaseAddress ; load base address

add eax,[ebx+8*ecx] ; add XTable(X) - scaled addressing

add eax,[ebx+8*edx+4] ; add YTable(Y) - scaled addressing

compute the pixel address. This involves a minimum of 5 processor cycles (no cache miss on BaseAddress and the tables). Three cycles are to be added if the VPAT and the coordinates have to be moved to memory first.

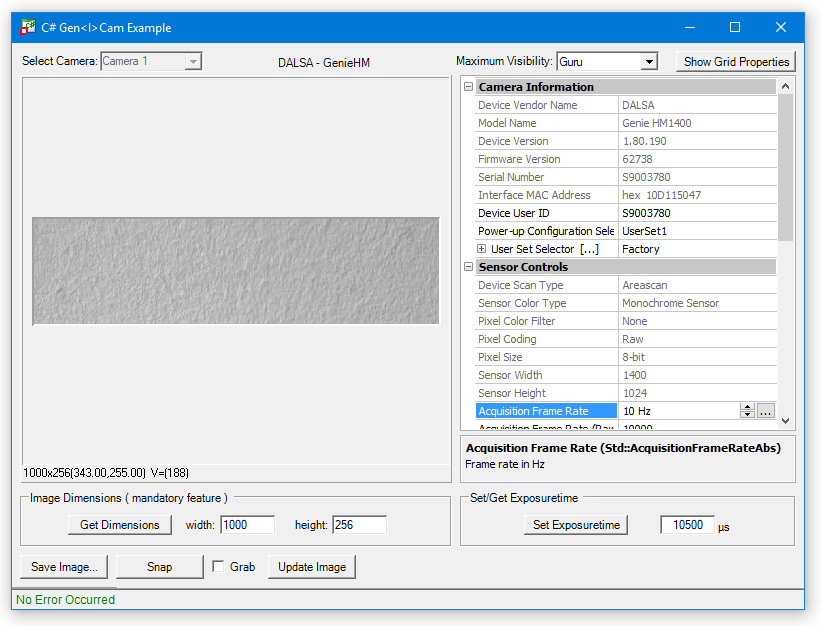

INodeMapHandle Interface: access to the Nodemap

This interface is used to obtain access to the Device NodeMap. INodeMapHandle interface is part of the CVB Driver Library and provides functions to set and retrieve values from the NodeMap for the current device (e.g. a GigE Vision camera).

Sample Code in C++

For testing purposes, please use the CVB GenICam Tutorial: VCSimpleDemo or CSGenICamExample (%CVB%Tutorial\Hardware\GenICam).

INodeMapHandle2 Interface description: access to different Nodemaps

A GenICam transport layer (GenTL) exports a number of separate NodeMaps to access logical parts of the driver. Beside the well known Device Nodemap a GenTL Producer provides a NodeMap to configure the DataStream and to get statistical data. Other technologies might as well have multiple NodeMaps. INodemapHandle2 interface is part of the CVB Driver Library and allows to enumerate the various NodeMpas and to get access.

NodeMap access can be used inside GenICam browser:

INotify Interface description: callback functions for events

The INotify interface allows to register callback functions for events like disconnect/reconnect or events generated by the device. In case such an event carries additional data, it is passed to the callback function.

VC++ Example Code:

C# Example Code:

Connection Monitoring using the INotify interface Within our GenICam architecture it is possible to be informed if a device disconnects or reconnects. This is called Connection Monitoring and is supported with GigE Vision.

This feature can be useful if a device temporarily loose its power and the connection needs to be re-established. The Connection Monitoring is realized over the INotify interface with its DEVICE_DISCONNECTED and DEVICE_RECONNECT event. For details refer INotify interface description.

Important Notes:

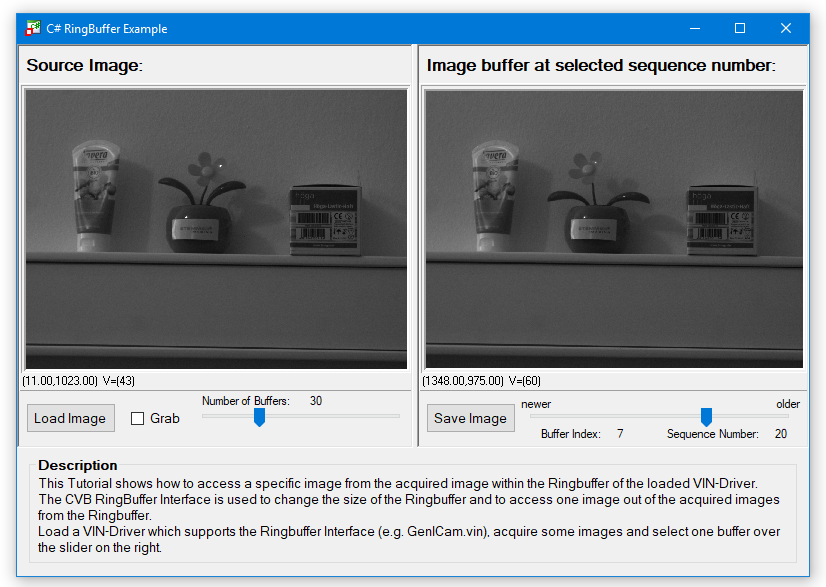

IRingbuffer Interface : image acquisition using RAM buffers