The TeachBench is a comprehensive and modular multi-purpose application that serves as:

Its focus is on:

This document consists of the following topics:

Image material for training and testing may be imported from bitmap files, video files or acquisition devices and processed using the operations offered by TeachBench prior to entering a learning database or testing a classifier.

Example project files and images are stored under:

Following file types are used depending on the project:

| *.clf | Minos Classifier |

|---|---|

| *.mts | Minos Training Set |

| *.pcc | Polimago Classification Predictor |

| *.prc | Polimago Regression Predictor |

| *.psc | Polimago Search Classifier |

| *.ptr | Polimago Test Results |

| *.pts | Polimago Search Training Set |

| *.sil | Manto Sample Image List |

| *.xsil | Polimago extensible Sample Image List |

Throughout this documentation, a few terms are going to be assigned and used with a certain concept in mind that may or may not be immediately apparent when looking at these words. For clarity, these terms are listed and defined here in alphabetical order:

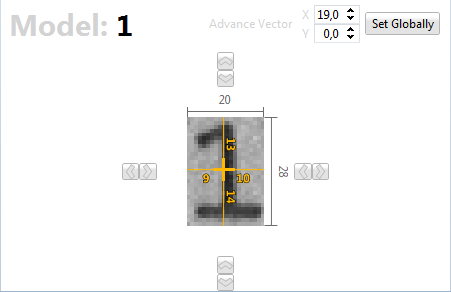

Advance Vector

OCR-capable pattern recognition algorithms may speed up reading by incorporating a-priori knowledge about the expected position of the next character: For a character of a given width w it is usually reasonable to assume that the following character is (if white spaces are absent) located within w ± ε pixels of the current character's position in horizontal direction (at least for Latin writing...). Therefore it sometimes makes sense to equip a trained model with this knowledge by adding to its properties a vector that points to the expected position of the following character. In Common Vision Blox this vector is generally called Advance Vector, sometimes also OCR Vector. Note that the concept of an advance vector is of course not limited to OCR: Any application where the position of a follow-up pattern may be deduced from the type of pattern we are looking at may profit from a properly configured advance vector.

Area of Interest

The area of interest, often abbreviated as "AOI" (sometimes also ROI for "Region of interest") in this document usually means the area inside an image (or the whole image) to which an operation (usually a search operation involving a classifier) is applied. One thing to keep in mind about the concept of the area of interest in Common Vision Blox is that the area of interest is commonly considered to be an outer boundary for the possible positions of a classifier's reference point (and thereby the possible result positions). In other words: An area of interest for searching a pattern of e.g. 256x256 pixels may in fact be just 1x1 pixel in size, and the amount of pixel data that is actually involved in the operation may in fact extend beyond the boundaries of the area of interest (with the outer limits of the image itself of course being the ultimate boundary).

Classifier

A classifier is the result of the learning process and may be used to recognize objects in images. To assess a classifier's recognition performance it should be tested on images are have not been part of the training database from which it has been generated, since the classifier has been taught with these very images and a classifier test on those images would yield over-optimistic results regarding the classifier's classification quality.

Command

in the context of this description means any of the available operations represented in the TeachBench's ribbon menu by a ball-shaped button with an icon.

Density

Some tools and functions in Common Vision Blox apply the concept of a scan density to their operation as a means of reducing the effective amount of pixels that need to be taken into account during processing. The density value in the TeachBench always ranges between 0.1 and 1.0 (note that in the API values between 1 and 1000 are used) and gives the fraction of pixel actually processed. Note that the density is applied likewise to the lateral and the transverse component of the scanned area (e.g. the horizontal and the vertical component of a rectangle), which means that the actual number of pixels processed for a given density d is actually d2 (e.g. with a density value of 0.5 only 0.5*0.5 = 0.25 = 25% of the pixels will be affected by an operation).

Feature Window

is a rectangular shape that defines the area from which a Common Vision Blox tool may extract information for generating a classifier. Feature Windows are usually described by their extent relative to an image location: If the object to which the feature window refers is located at the position *(x, y)*, then the feature window is described by the parameters *(left, top, right, bottom)* which give the amount of pixels relative to *(x, y)* to include in each direction (note that left and top are typically zero or negative).

Learning

is the process of generating a classifier from a database of suitably labeled sample images (see Training). The learning process is usually not an interactive process and the only user/operator input it requires in addition to the sample database is a (module-specific) set of learning parameters.

Module

A module is a DLL that will be loaded at runtime by the TeachBench application and plugs into the user interface. Modules can provide image manipulation operators or project types - they effectively decide over the functionality that is visible and usable inside the TeachBench application. The list of currently loaded modules (along with their version numbers) as accessible through the application's "About" dialog.

Negative Instance

A negative instance is the opposite of a positive instance: A sample in an image that may (at least to some degree) look similar to an object of interest, but is not actually a good sample to train from (e.g. because it belongs to a different class or because parts of the object are missing or not clearly visible). In other words: Negative instances are counter sample to the actual object(s). It should be pointed out that negative instances are (unlike positive instances) not a necessity for pattern recognitions and not all algorithms do make use of negative instances to build their classifier. However, those algorithms that do can use the "knowledge" gained from counter sample to resolve potential ambiguities between objects.

Positive Instance

Positive instances are complete and useful samples of an object of interest in an image, suitable to train a classifier from.

Project

See Sample Database.

Radius

Some of the TeachBench modules use the definition of a radius around a location of interest for various purposes (like negative sample extraction, suppression of false positive samples, sliding inspection region during OCR operations). The definition of the radius always uses the L1 norm, not the Euclidean norm - therefore radius always defines a square (not circular!) region containing (2*r* + 1)2 pixels (where r is the radius value).

Reference Point

The reference point is also sometimes called the anchor point of a model. It is the location in the pattern relative to which the feature window is described (and relative to which some of the TeachBench modules describe a model's features in the classifier). The reference point is not necessarily the center of the object but it generally has has to be inside a model's feature window. For some algorithms the reference point is "just" a descriptive feature that influences where a result position will be anchored; for some algorithms however the choice of the reference point should be given more thought because it may influence the effectiveness of (some of) the algorithm's features (like e.g. the OCR vector in Minos).

Sample Database

The sample database is the set of images generated during the training process (see Training). TeachBench projects are usually the logical representation of that database inside the TeachBench. The database will always be saved in a proprietary format specific to the module with which the project file is being edited. Projects may also be exported to and imported from directories if module-specific restrictions are observed.

Teaching

in the context of the TeachBench means the combination of training (of samples relevant for classifier generation) and learning (of a classifier).

Training Database

See sample database.

Training

denotes the process of gathering images suitable for classifier generation and pointing out the regions inside the image that contain features or objects of interest (i.e. objects the classifier should be able to identify correctly). Effectively this can be thought of as assigning a label with (module-specific) properties to an image region.

Following is a description of those parts of the TeachBench that are always available regardless of the tool modules that have been loaded. This covers the main window and the modular ribbon as well as the Image Pool which can hold any image, video or acquisition device loadable in Common Vision Blox. The Image Pool serves as a work bench on which the available operators may be applied to an image and as the basis from which the classifier training can draw - how exactly that happens depends on the module and is described in the section about the TeachBench projects.

The Start View

When the TeachBench is started it shows the Start View which gives the user a chance to:

Note that these lists are cleaned (unreachable files are removed from them) every time the TeachBench starts. Entries in the lists of recently used files may be removed from the lists via the entries' context menus.

Main View

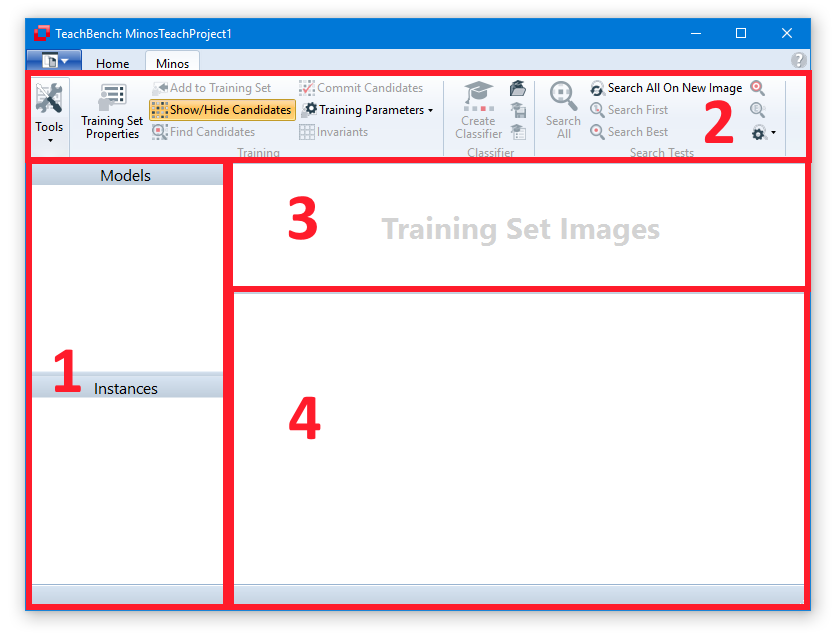

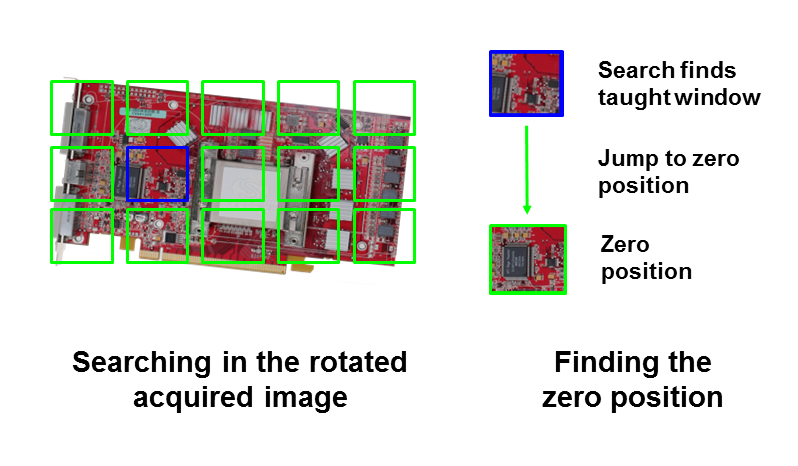

The Main View is composed of five regions, some of which might be hidden at certain times depending on the context:

In addition to these main regions, the TeachBench user interface provides Region 5:

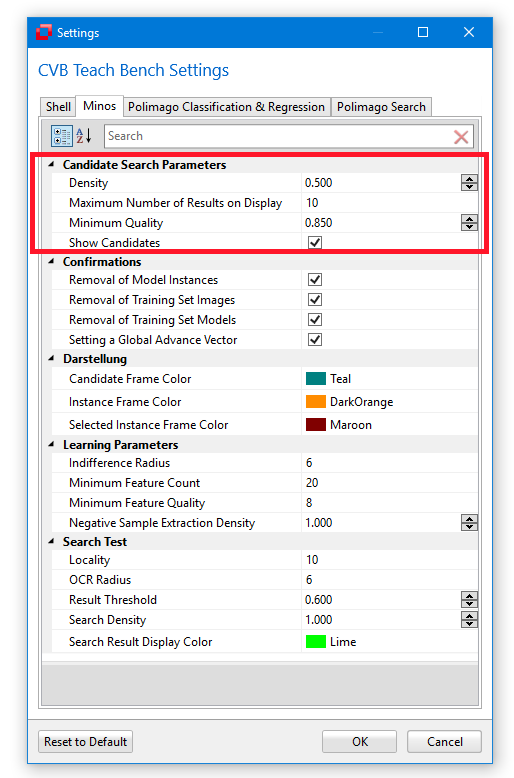

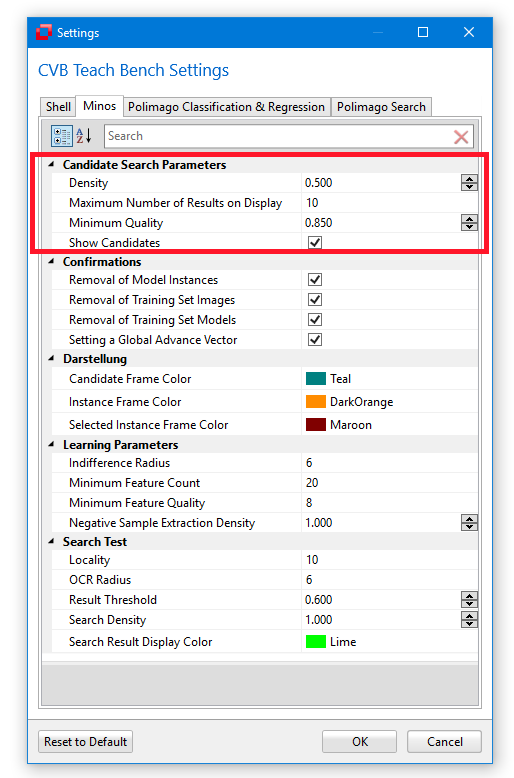

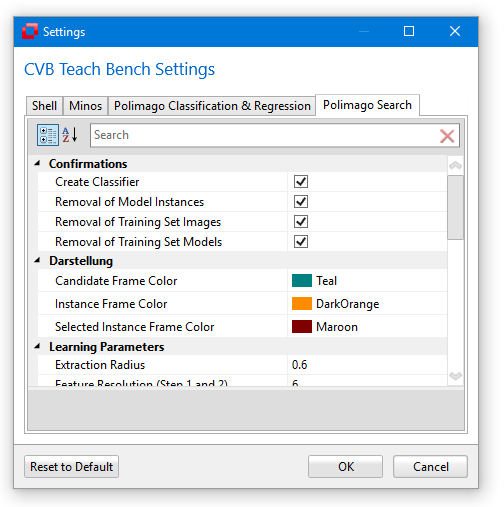

The options can be accessed via the File Menu. Here various general settings are displayed and can be changed. Every teaching tool has its own tab.

|

|

Values that are set here will be consistent over an application restart. Some properties might be changed via a dialog from other parts of the TeachBench and can be accessed via the Options later on.

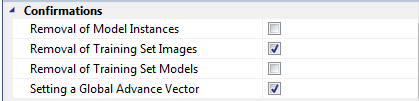

Confirmation Settings

At some point the TeachBench might ask you for setting an option permanently. For instance, if you remove a model when working in a Minos project you will be displayed a warning.

|

|

You can check the box to not display this warning again.

These settings can be reverted from the Options Window. In this case, the option for displaying the warning when removing a model from the Training Set can be found under the tab Minos, section Confirmation. For details of the Minos options see the Options section of the specific module.

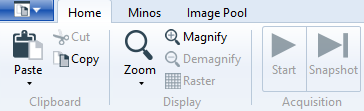

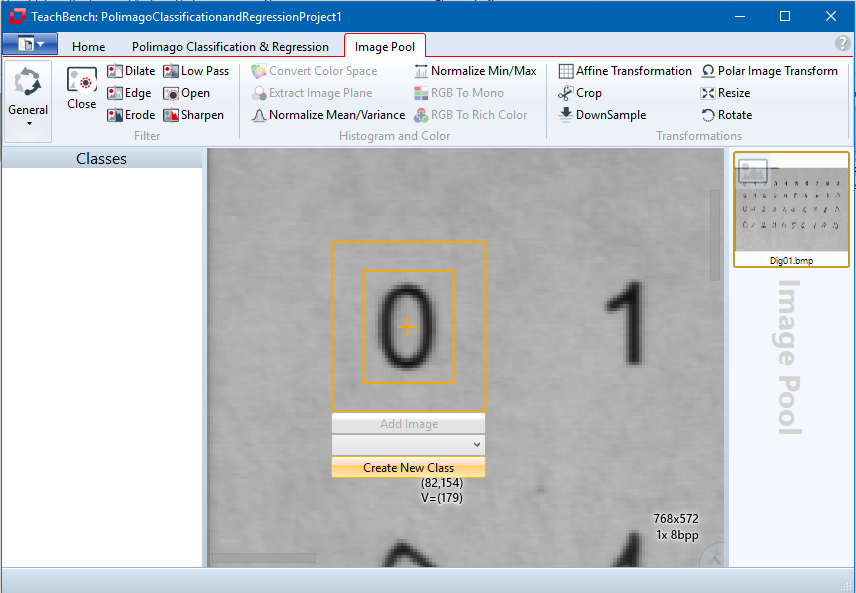

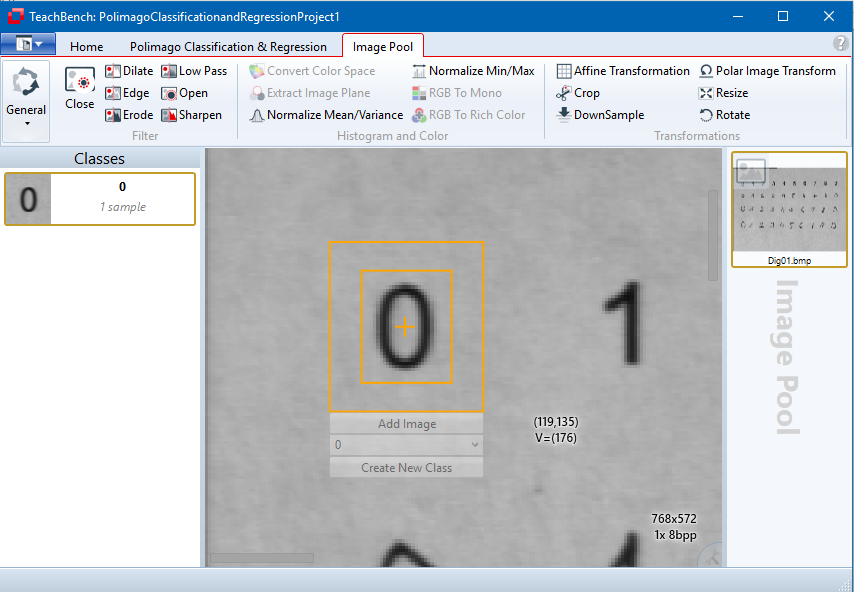

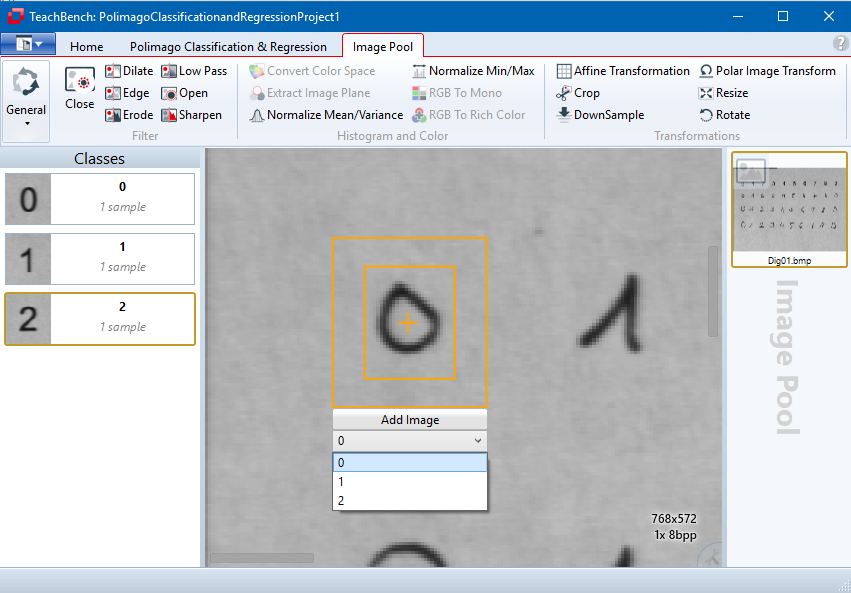

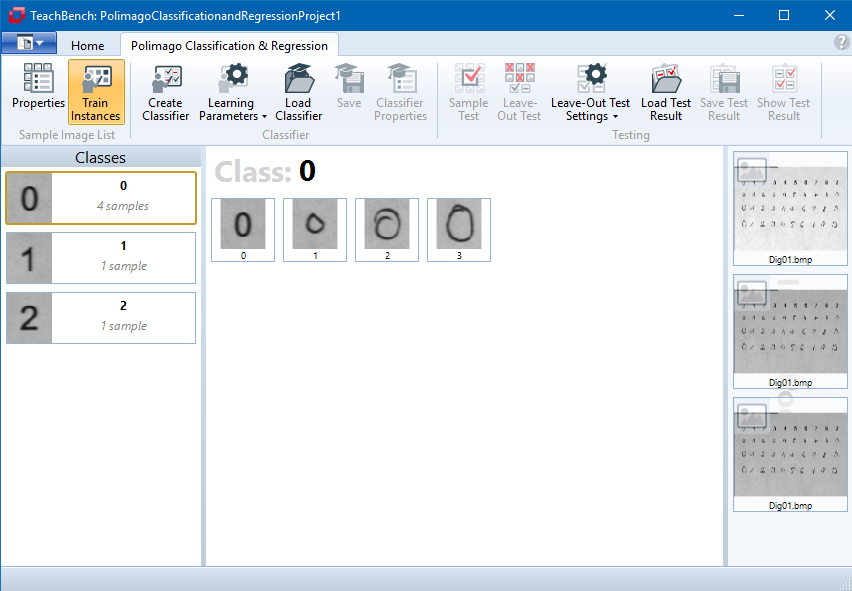

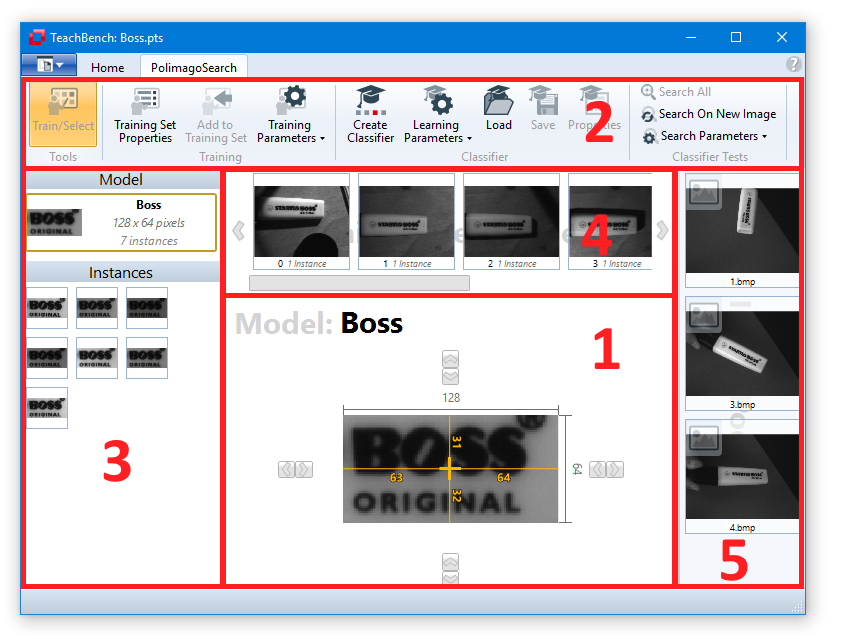

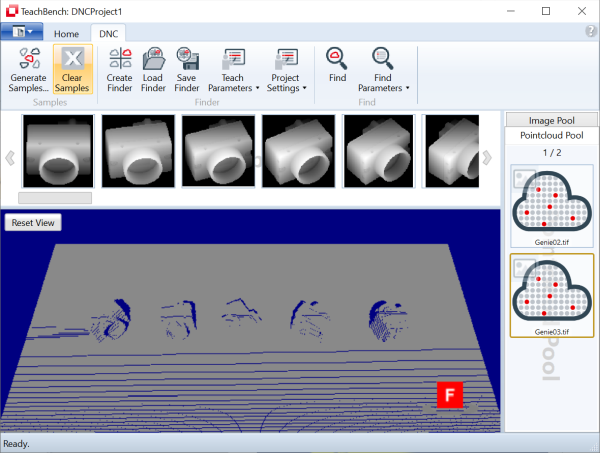

Managing and pre-processing opened images, videos and drivers

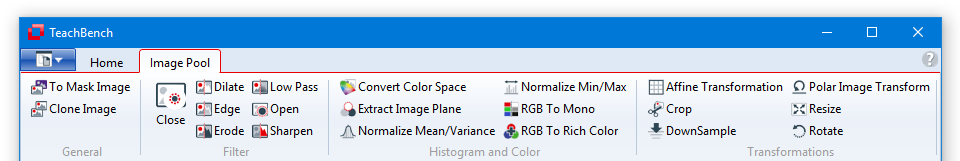

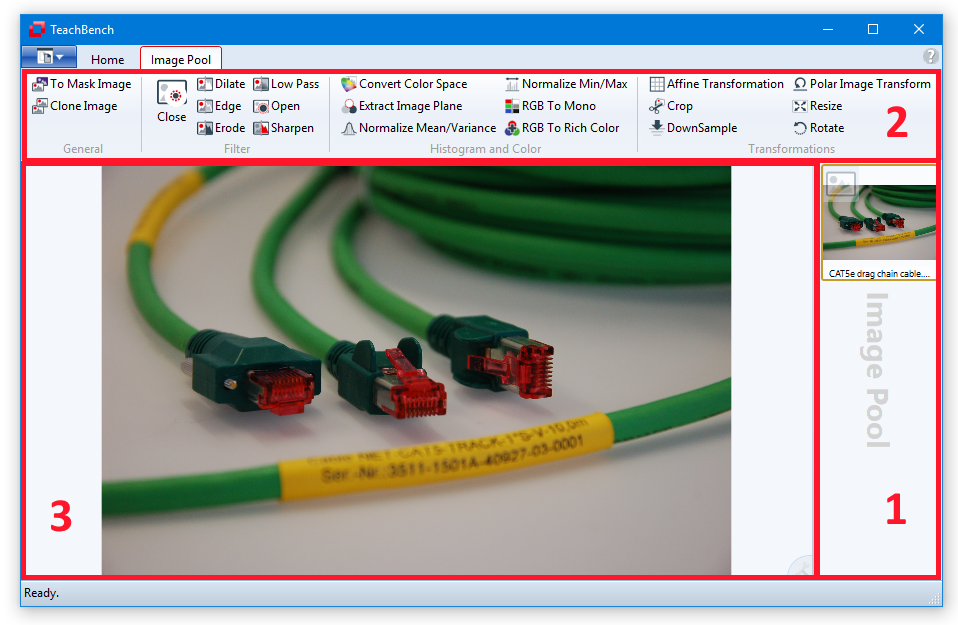

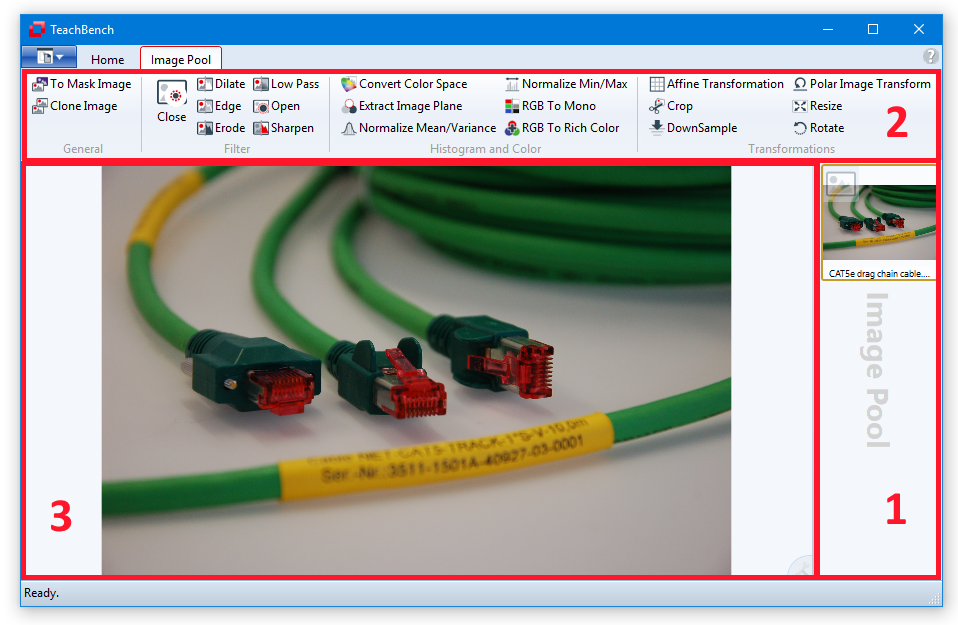

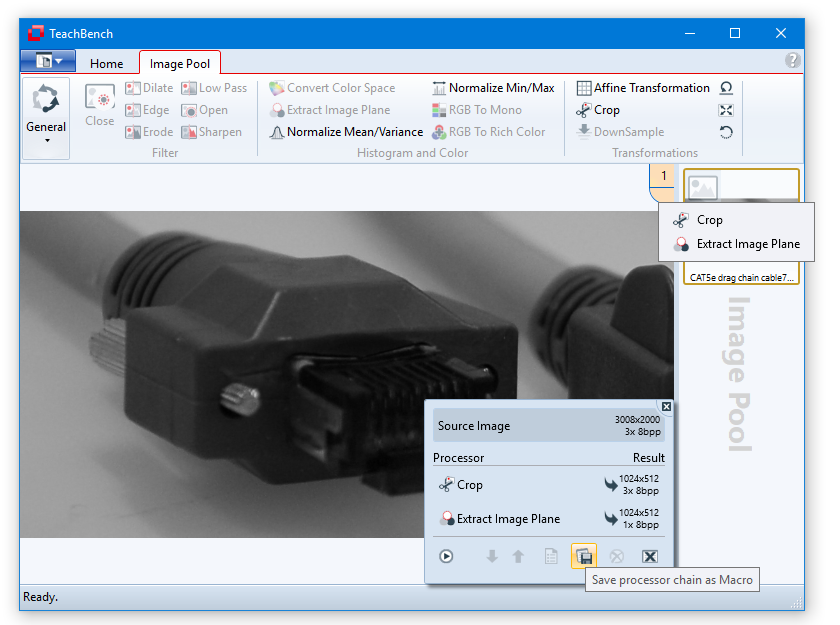

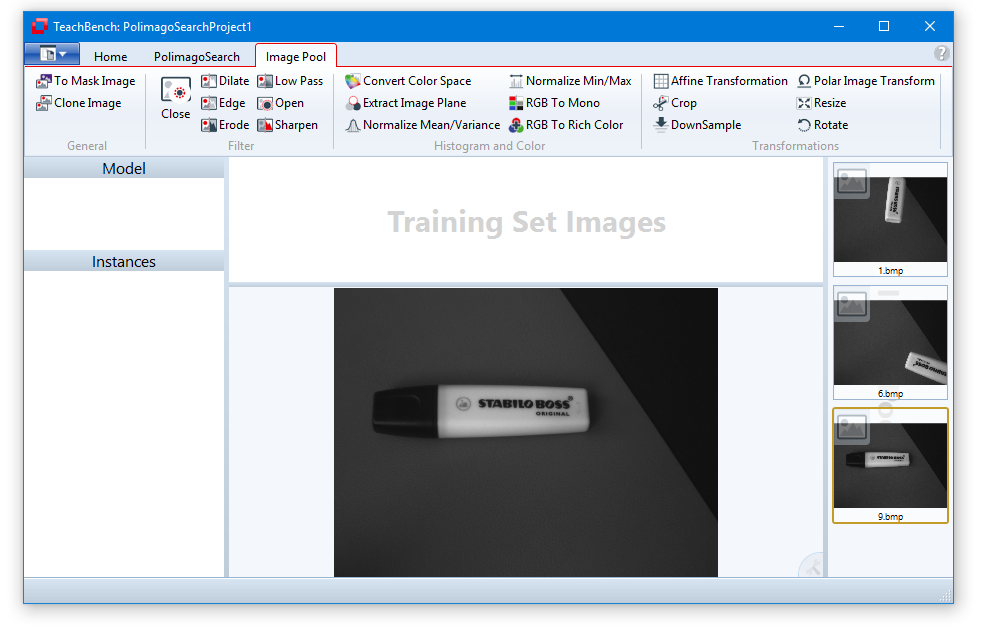

The Image Pool View consists of the Image Pool region itself at the right (1), the corresponding ribbon tab (2) and the center Editor View region (3). The Home tab is also available and provides functions regarding clipboard, display and acquisition (if a supported file is selected). The Image Pool region holds all added images, videos and drivers. Files can be added multiple times. When using the To Mask Image command, a copy of the current image is created as a mask image and immediately added to the Image Pool. Files can be added to the pool (opened) via the File Menu or by pressing F3.

In this section some exemplary work flows regarding images and videos/drivers are illustrated.

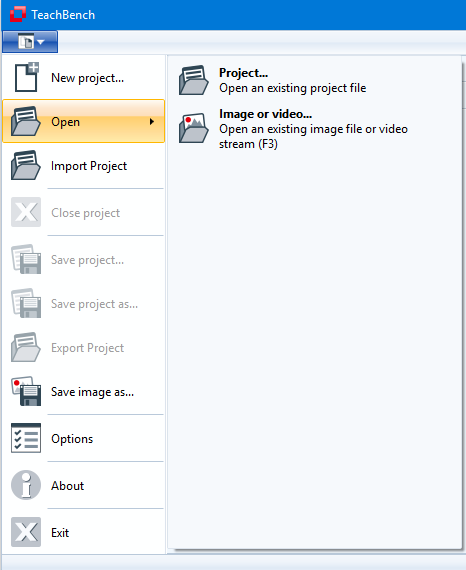

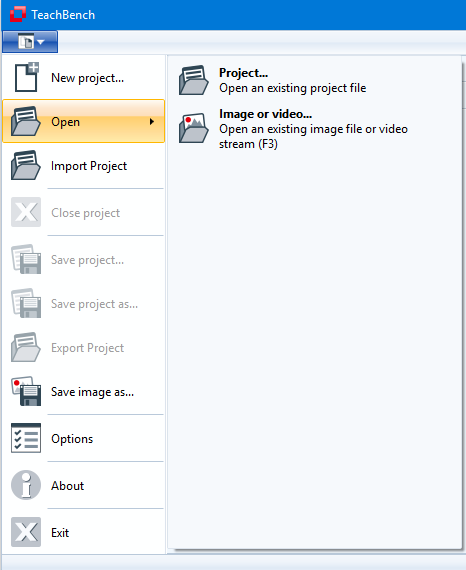

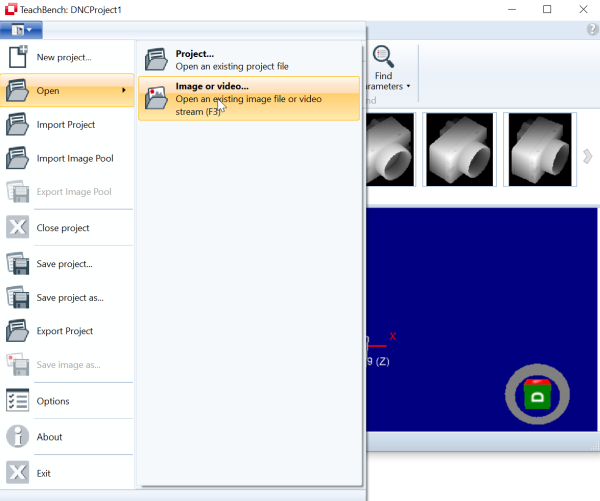

Images can be added to the Image Pool by opening them via the File Menu > Open > Image or Video.... The File Menu is located at the top left of the window. Image source files (bitmaps, videos and acquisition interface drivers) can also be opened by pressing F3.

Once an image has been opened, it is added to the Image Pool region at the right. Please note that an image is added to the pool as often as it is opened. So, one can add an identical image several times to the pool if necessary. As soon as an image has been added to the pool, it is displayed at the center Editor region. Here the mouse wheel can be used for zooming in and out of the image while holding down the CTRL key. When zoomed in, the image region can be panned by holding the CTRL key and dragging the mouse (left mouse button pressed).

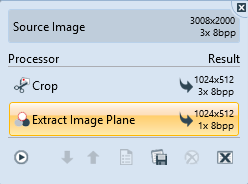

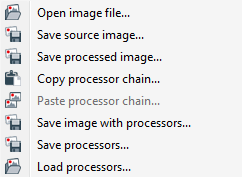

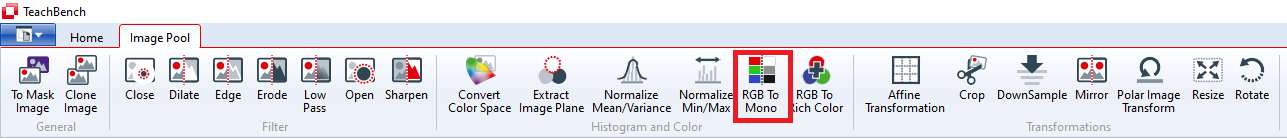

With the context menu of the Image Pool region (1) image processor stacks can be saved and loaded (applied) to a selected pool item. Images can also be saved via the context menu. Different image types are indicated by different icons. The processors of the Image Pool ribbon tab (2) are always applied to the image seen on the center Editor View Region (3). Some processors might be disabled (grayed out) in the ribbon tab depending on the currently selected image's format, size and other properties or the availability of licenses. The stack of applied image processors may be accessed via the icon at the lower right corner of the Editor View Region. Here the order in which the processors are applied and their parameters can be edited.

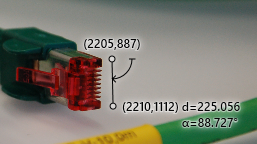

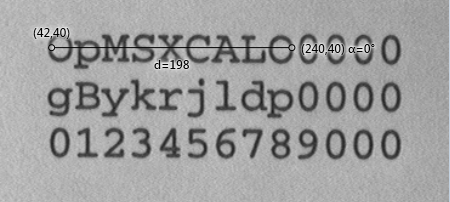

By holding down Shift and dragging the mouse (left mouse button pressed) on the display in the Editor View Region, you can bring up the Measurement Line Tool which displays distance and angle between start and end point:

In this section, the available Image Processors will be discussed and working with Image Processor Stacks will be described. The available image processors are located in the Image Pool ribbon tab. Under General you can find the To Mask Editor command (see section Mask Editor View) and the Clone Image command.

These are:

Note that generally, the ribbon button of an operator is only enabled if the image that is currently on display in the working area is compatible with it (for example a color space conversion will not work on a monochrome image). Furthermore, some of the operators require a license for the Common Vision Blox Foundation Package. If no such license is available on the system, those operators' buttons will remain disabled.

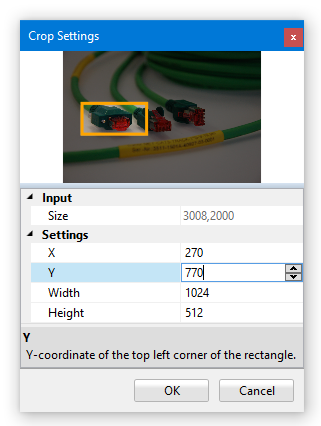

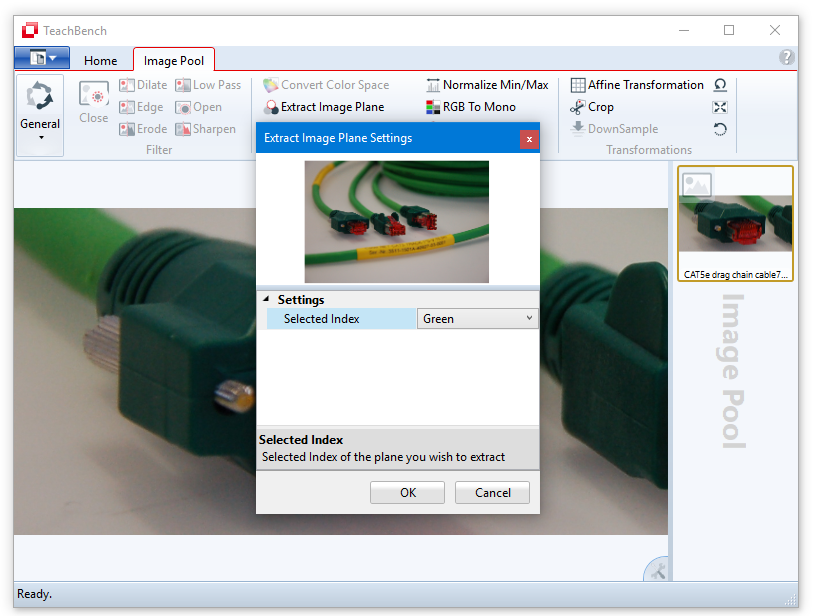

Some image processors are applied instantly to the current image by clicking the corresponding button in the Image Pool ribbon menu. Others will show a settings dialog first where various parameters may be set (when using the edit boxes in the settings window the preview will be updated as soon as the edit box looses focus). The image processors can be applied in any order and usually work on the full image. An exception is the Crop operator that can crop out a certain image area. If available for a given processor, a Settings Dialog with a preview is shown:

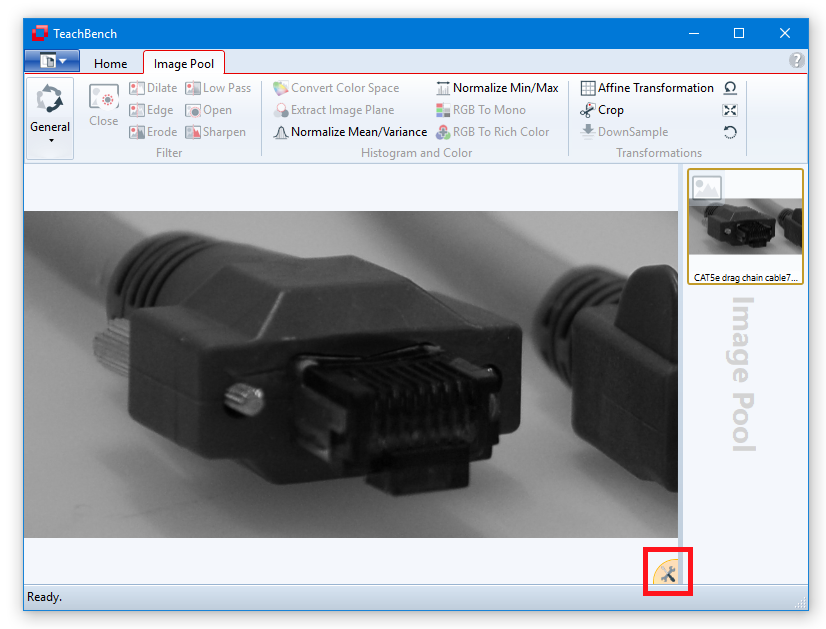

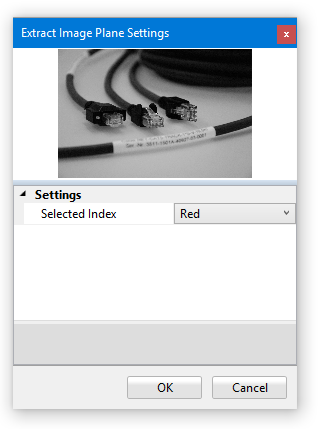

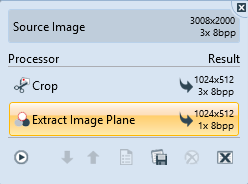

Once the image is cropped you may want to extract a single image plane (Extract Image Plane). Here, we choose the blue plane.

By confirming the operation, the image in in the Image Pool is updated. Please note that some image processors are now disabled because they are only applicable to color/multi-channel images. If at any given time an image processor is disabled, the currently selected image is not compatible (for example RGB To Mono cannot be applied to a monochrome image) - or a license for using this processor is missing.

Clicking the icon in the lower right corner of the main working area all image processors currently active on this image are shown in a small pop-up window. Here the order of their application may be changed as well as the settings of each image processor (second icon from right).

The complete stack of applied processors can also be saved (to an xml file), restored and applied to other images within the Image Pool via the context menu (right-click) within the Image Pool region.

A loaded processor stack is applied automatically as it gets loaded. In order to apply a stack of processors the image has to satisfy the input restrictions based on the processors in the processor stack. For instance, the processor loading (and successive application) will fail if the stack contains a gray scale conversion or plane extraction on an image containing only one plane (monochrome image). Also a crop will not work if the target image is smaller (width and height) than the region specified in the processor settings.

This section will discuss the following Image Processors:

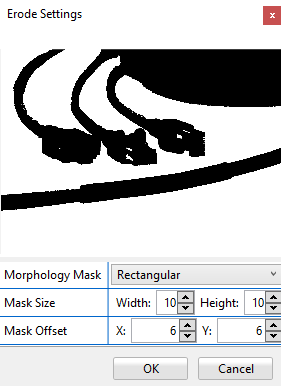

This section discusses the available morphological operations of the TeachBench. Morphological operations are a set of processing operations that are based on shapes (in a binary sense), the so-called Structuring Element which is applied to the input image and the pixel values of the output image are then calculated by comparing the currently processed pixel to those neighboring pixels that are part of the structuring element.

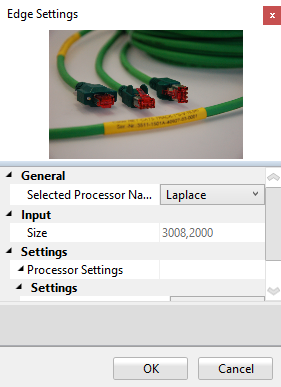

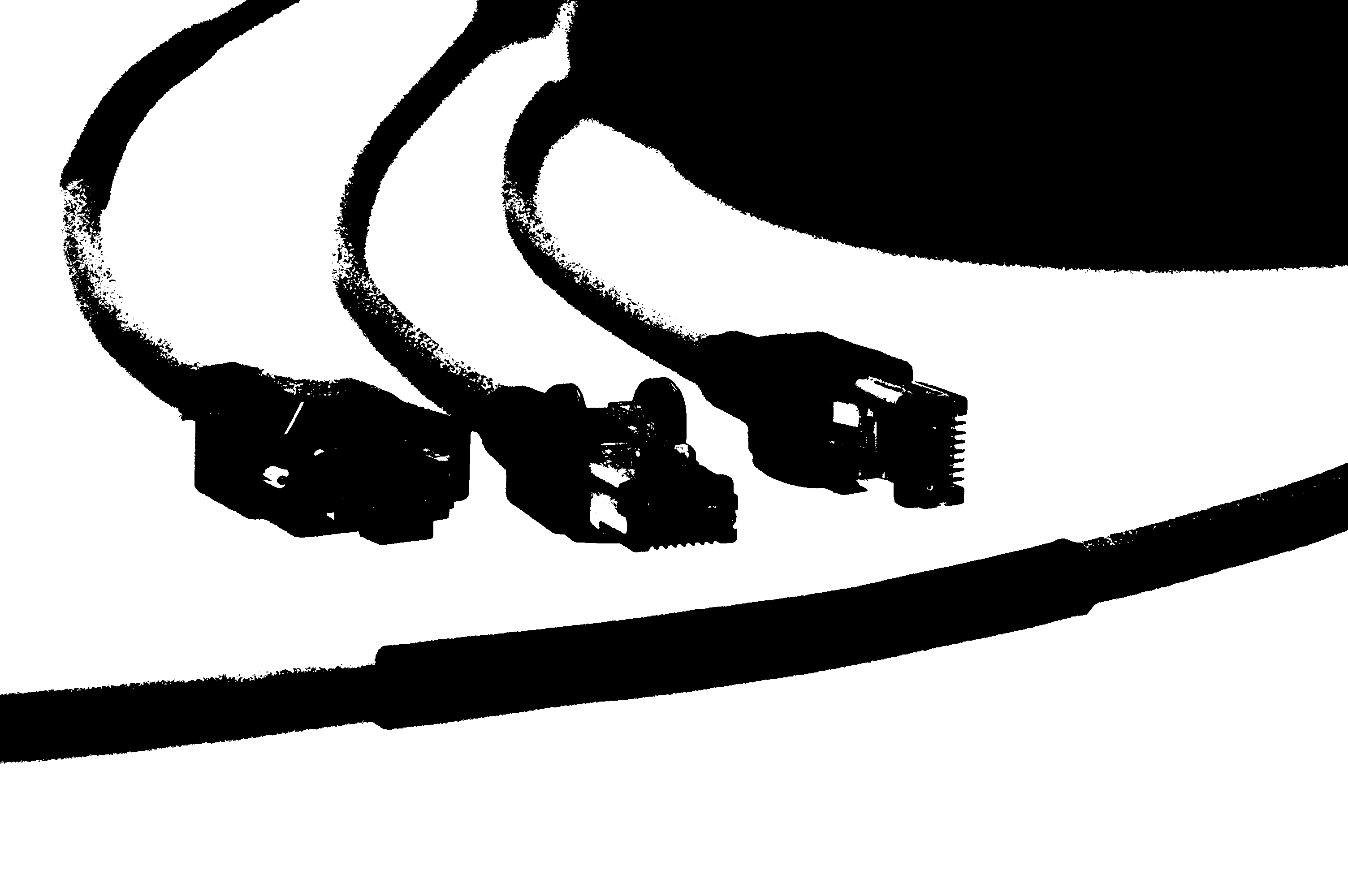

As the name implies, this tool lets you apply an edge filter to emphasize edges in an image. Edges are areas of strong changes in brightness or intensity. This image processor is represented by the following icon:

In the General section you can chose which processor you want to use. Under Settings you can chose the filter size (3x3 or 5x5) you want to use and, if the selected processor supports it, the desired orientation. Available processors are:

As the name implies, this tool filters the image with a low pass filter in order to blur (or smooth) it. If you want to blur an image significantly, you can apply this image processor several consecutive times. This image processor is represented by the following icon:

Under Settings you can chose between two different filter sizes, 3x3 and 5x5. This filter blurs an image by averaging the pixel values over a neighborhood.

Binary

With binary images it is easiest to show the effect of morphological files. Consider, for example, the following input image (generated over %CVB%Tutorial/Foundation/VC/VCThresholding):

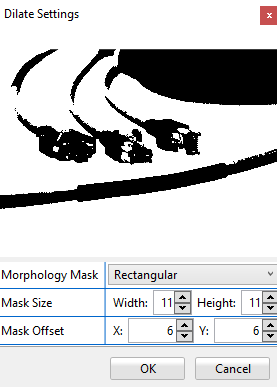

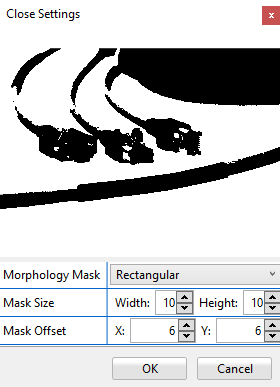

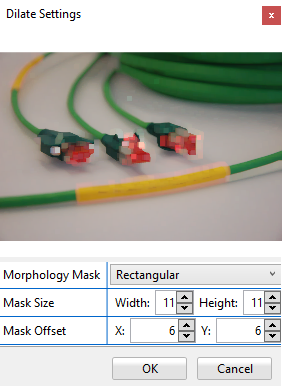

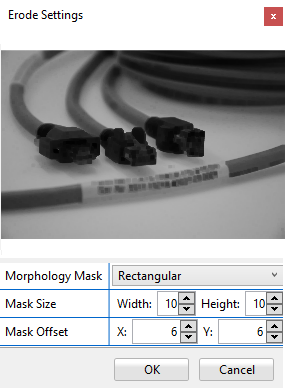

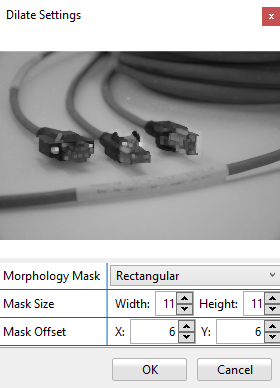

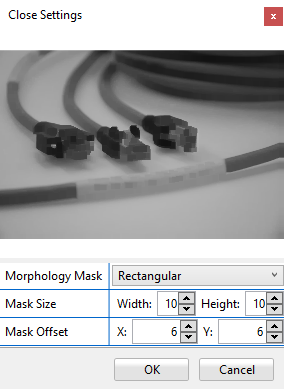

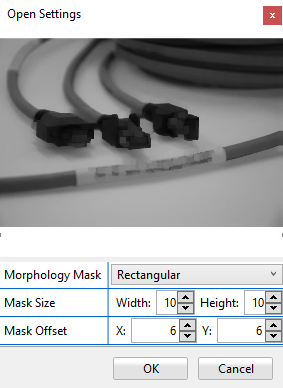

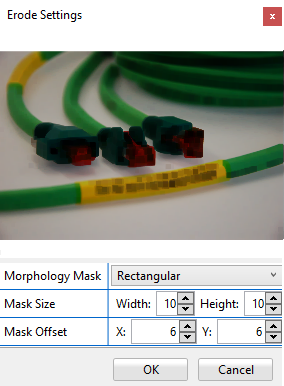

For all the operations that follow, the size of the morphological mask is defined by the parameters Mask Size Width and Mask Size Height i.e. a rectangular structuring element will be used. The mask has an anchor point whose relative position inside the mask is defined by the parameters Mask Offset X and Mask Offset Y.

Erosion

Erosion

The morphological technique of Erosion is also known as "grow", "bolden" or "expand". It applies a structuring element to each pixel of the input image and sets the value of the corresponding output pixel to the minimum value of all the pixels that are part of the structuring element, thus increasing the size of the black areas.

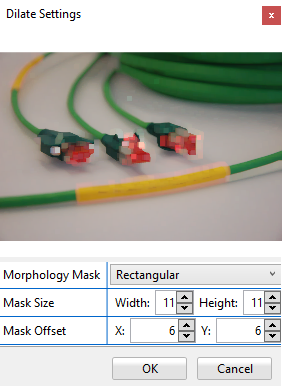

Dilation

Dilation

This method is the opposite morphological operation of the erosion. It applies a structuring element to each pixel of the image and sets the value of the corresponding output pixel to the maximum value of all the pixels that are part of the structuring element, decreasing the size of the black areas.

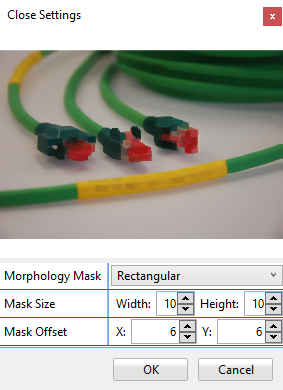

Closing

Closing

This method first dilates the input image and then erodes it. The Closing operation is usually used for deleting small holes inside an object. Inner edges get smoothed out and distances smaller than the filter mask are bypassed.

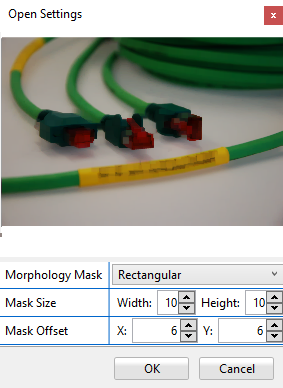

Open

Open

This method first erodes the input image and then dilates it. The Opening operator is used for removing small regions outside of the object. Whereas outer edges are smoothed and thin bridges are broken.

Grayscale

Using morphological operators on monochrome images applies the same logic as on binary images (see above).

Color

The results are again similar for color. Here, the operator is applied to every image plane separately, which may give rise to color artifacts.

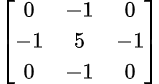

As the name implies, this tool sharpens the input image. This image processor is represented by the following icon:

It uses the following 3x3 filter kernel:

Please note: Underflow and overflow gray-values are truncated to 0 and 255 respectively. Although the function causes a visible "de-blurring" (sharpen) of images it also amplifies noise and should therefore be used with caution.

This section will discuss the following Image Processors:

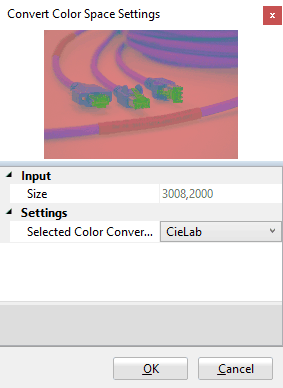

As the name implies, this tool converts an image from one color space to another. This image processor is represented by the following icon:

Under Settings you can select which color conversion you want to use. Available are the established conversions:

Note that the converted images are simply displayed as if they were RGB images, i.e. on YUV image the red channel represents the Y component, the green channel represents the U channel and the blue channel represents the V channel.

As the name implies, this tool extracts a single image plane from a multichannel (typically RGB) image. This image processor is represented by the following icon:

Under Settings you can select the image plane you wish to extract. The resulting image is a monochrome (1 channel) 8 bit image.

The Normalize command comes in two flavors: Normalize Mean/Variance and Normalize Min/Max. This image processors are represented by the following icons: and

and

Both commands will alter the histogram of the input image such that the output image makes use of only a selectable range of gray values (Normalize Min/Max) or that the histogram of the result image exhibits a gray value distribution with selectable mean and variance value (Normalize Mean/Variance). Both normalization modes may be useful in compensating variations in the overall brightness of image material (in such cases the mean/variance mode often provides superior results).

|

|

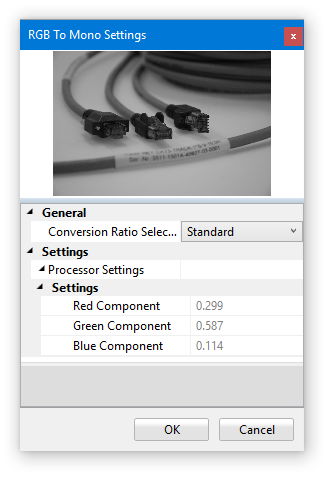

As the name implies, this function converts an RGB image to 8 bit mono. This image processor is represented by the following icon:

Under General, you can select the color ratio that will be used for the conversion. The Standard setting* uses:

Y = 0.299 R + 0.587 G + 0.114 B

Equal uses:

Y = 0.333 R + 0.333 G + 0.333 B

Custom lets you select different values.

This tool from the Histogram and Color section of the Image Pool ribbon menu converts an RGB image to Rich Color features. The purpose of this conversion is to enhance the color space by iteratively concatenating the available color planes. This image processor is represented by the following icon:

This function creates a nine-planar color image from a three-planar color image by adding color planes containing the information of R*R, R*G, R*B, G*G, G*B, B*B in plane 4 to 9 (normalized to a dynamic range of 0...255). Please note: Since the resulting image is no ordinary 3 channel color image, most operations cannot be performed on the resulting image (e.g. RGB to Mono).

This section will discuss the following Image Processors:

The Affine Transformation Tool from the Transformation section of the Image Pool ribbon tab launches the Affine Transformation editor which can be used for performing geometric transformations on the image. This image processor is represented by the following icon:

The available geometric transformations are:

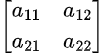

All these transformations may be described by a 2x2 matrix:

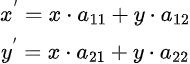

and transformation is then defined by the following equations:

Where [ x', y' ] are the coordinates of a point in the target image and [ x, y ] are the coordinates of a point in the source image.

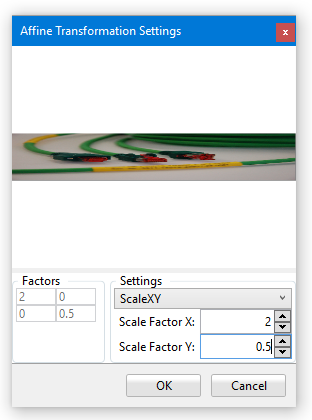

The four text boxes below the image at the left represent the 2x2 transformation matrix: top left a11, top right a12, bottom left a21, bottom right a22. At the right you will find a drop down box for selecting the type of transformation. Below you will find the text boxes where you can enter the desired values for the transformation operation. When using the edit boxes to modify the values please keep in mind that the preview will only update if the edit box looses focus. The available transformation operations are:

Free

If you choose the Free option, transformation matrix must be edited directly. This might be useful if a known matrix transform should be applied to the image - for all other purposes the settings described further down are probably easier to work with.

Rotation

Choose Rotation to rotate an image by any desired angle entered below the drop down box. Note that there is in fact and operator Rotate that may achieve the same effect.

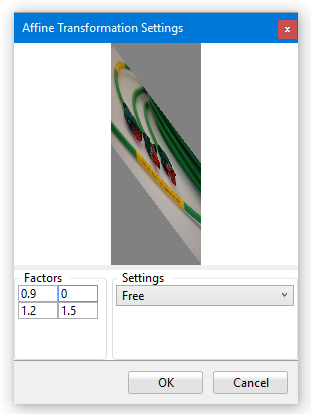

Scaling

You can change the size of an image with the Scale operation. Enter the desired value in the text box below the drop down menu, once you confirm by leaving the focus of the text box you will see the changes. Values less than 1.0 result in a reduction. Enter values greater than 1.0 up to a maximum of 10.0 to enlarge the image. For example, if an image of 640 x 477 pixels is enlarged by a factor of 1.5 (50%), the result is an image of 960 x 714 pixels. Note that there is an operator Resize that may achieve the same effect.

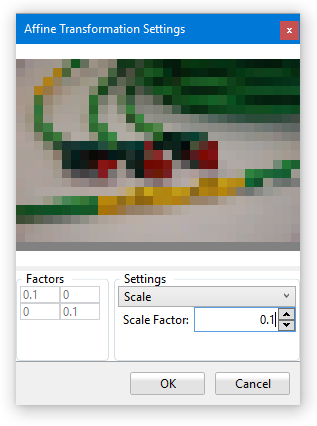

Rotate Scale

As the name suggests, this operation rotates and simultaneously scales the image according to the entered values Degree and Scale Factor. Its result is identical to the successive application of the Rotate and the Resize operator.

ScaleXY

Same as Scale - however independent scale factors for X and Y may be entered.

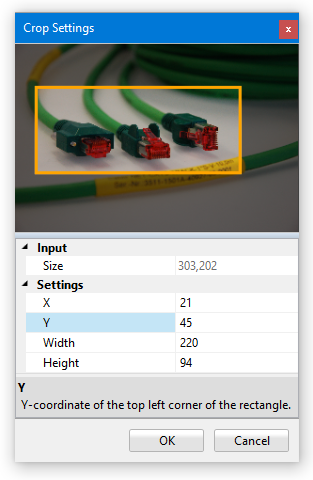

Crop

Crop

This tool allows you to crop the image and create a sub-image that only contains a part of the original image. You can either manipulate the AOI in the preview display with the mouse or change the values of X, Y, Width and Height with the corresponding text boxes.

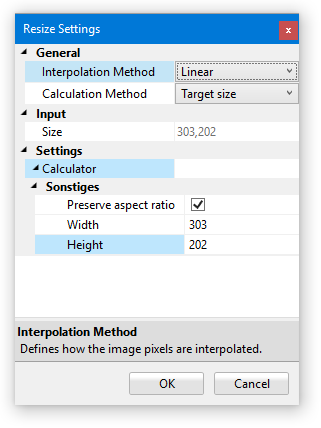

Resize

Resize

As the name implies, Resize allows you to change the size of an image. In the General section you can chose the desired interpolation method and whether you want to specify the desired image size in percent or in absolute pixels. The available interpolation modes are (depending on the availability of a Foundation Package license): none, nearest neighbor, linear, cubic or Lanczos. In the Settings section you can chose if you want to preserve the aspect ratio of the image.

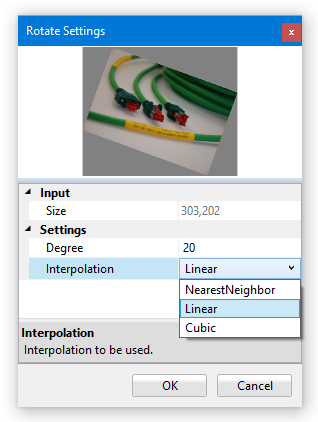

Rotate

Rotate

As the name implies, Rotate allows you to rotate the image. In the Settings section you can specify the number of degrees by which you want to rotate the image. Here you may also select the desired interpolation algorithm. Available Interpolation algorithms are: NearestNeighbor, linear and cubic. If no Foundation Package license is available, Linear interpolation will be the only valid option.

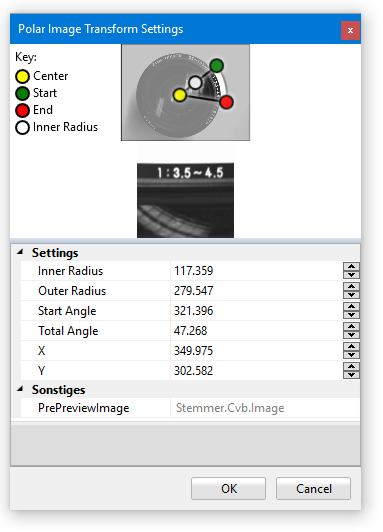

The Polar Image Transformation is useful for unwrapping circular structures in image (for example for teaching characters that are arranged in a circle like in the example below). This image processor is represented by the following icon:

The processor requires the following parameters:

This tool downsizes the image based on a Gaussian pyramid. Down Sample will cut it in half. For example, an image of 512x512 pixels will result in a resolution of 256x256 after one down sample operation. This image processor is represented by the following icon:

Note that many consecutive Upsample operation can easily bring images to a size where the memory consumption for these images becomes problematic. The TeachBench tries to handle these situations as gracefully as reasonably possible - yet simply clicking this operator 10 to 15 times in a row remains the most reliable way of rendering the application unusable or even driving it into an uncaught exception.

To create a Gaussian pyramid, an image is blurred using a Gaussian average and scaled down after that. Doing this several times will yield a stack of subsequent smaller images - a pyramid.

Videos and drivers can (just like images) be added to the Image Pool by opening them via the File Menu > Open > Image or Video... . The File Menu is located at the top left of the window. Files can also be opened by pressing F3.

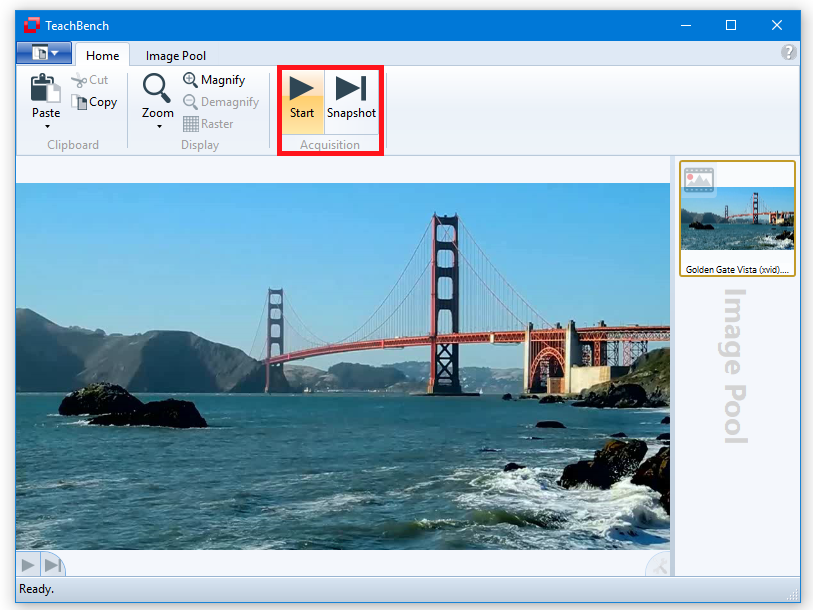

Once a file has been opened, it is added to the Image Pool region. Please note that a video is added to the pool as often as it is opened, so an identical video can be processed in different ways. A camera opened by a driver is only available once due to technical limitations (an image acquisition device can usually only be opened once). Once a video/driver has been added to the pool, it is displayed at the center Editor region. The TeachBench detects automatically if a file has been opened (selected from within the Image Pool) that supports a CVB Grabber Interface and will display the corresponding controls accordingly in the ribbon section. The Start button starts the grab (playback in case of a video or EMU, acquisition in case of a driver). Snapshot captures a single image or frame. With the Snapshot button, you can go through a video frame by frame.

Similar to working with images, the mouse wheel may be used for zooming while holding down the CTRL key; the video can be panned with CTRL + dragging (left mouse button pressed). Holding down Shift and dragging the mouse while pressing the left mouse button brings up the Measurement Line Tool which displays distance and angle between start and end point.

Similar to Image Processing, compatible Image Processors can be applied to Videos and Drivers as well. The workflow and features are the same as with images within the Image Pool - for instance, processor stacks can be edited, saved and restored. The full processor stack is applied to each newly acquired image.

When the Image Pool ribbon tab is selected, you may use the To Mask Image command to create an overlay-capable duplicate of the currently visible image. Note that when the TeachBench window is displayed with its minimum size, this command is moved into a drop-down gallery named General.

|

|

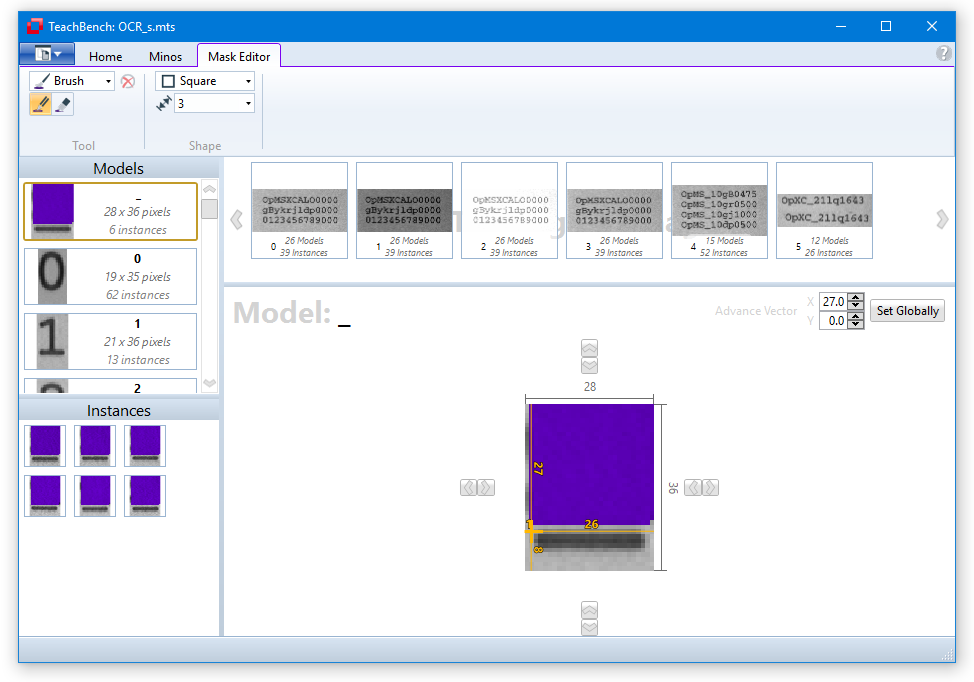

The mask editor may be used to edit a bit mask on Common Vision Blox images that have been marked as overlay-capable. This bit mask effectively hijacks the lowest bit of each pixel to define a mask that is embedded into the image data. Some Common Vision Blox tools may interpret this mask in specific way, for example it may be used by Minos to define regions where the learning algorithm may not look for features (known as "don't care" regions). The masked regions are displayed in a purple hue on monochrome images and as inverted regions in color images.

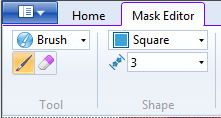

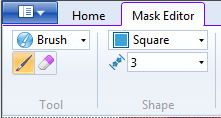

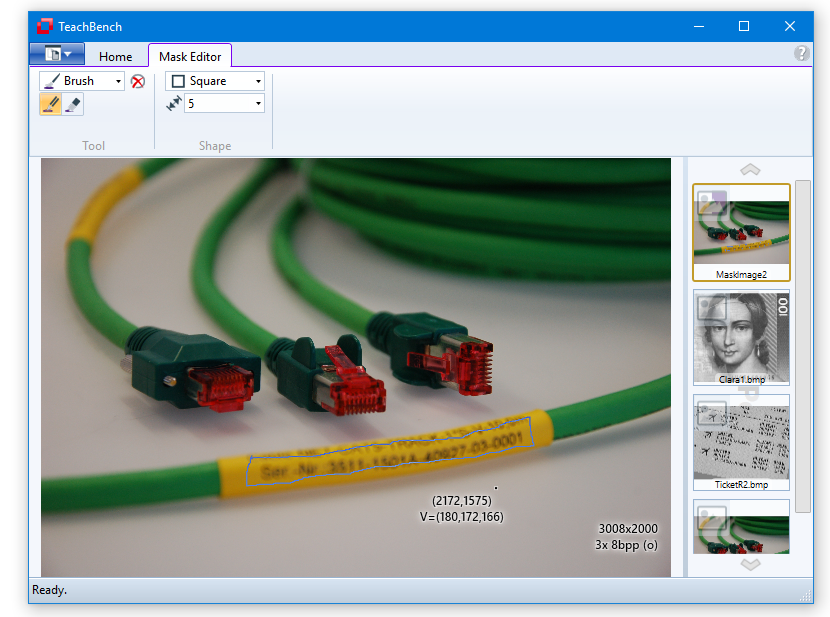

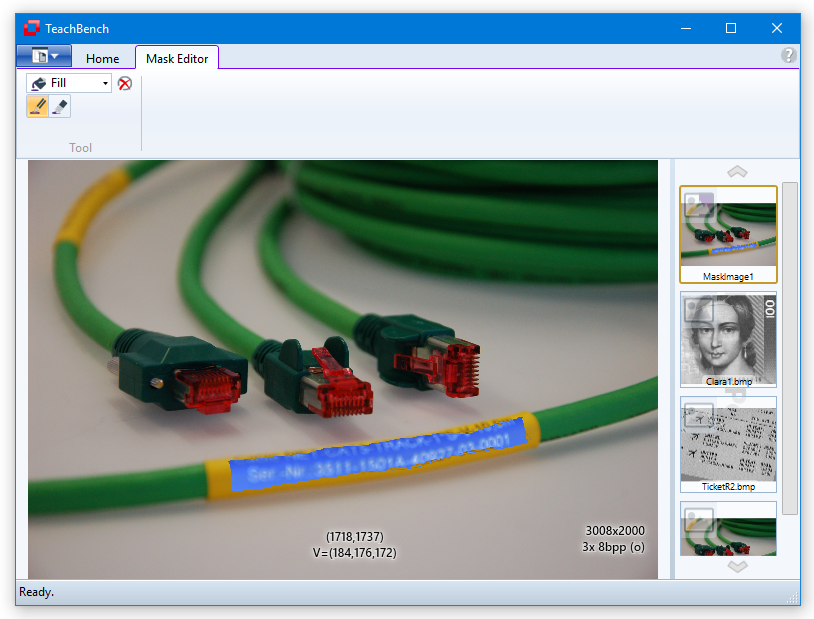

Whenever an overlay capable image from the image pool has been selected, the Mask Editor tab becomes available (whereas the Image Pool tab vanishes - the operations available for regular image pool images are usually not sensibly applicable to overlay-capable images as they will usually destroy the mask information). From the Tool section you may choose between Brush or Fill and between drawing (pencil) and erasing (rubber). In the Shape section various shapes and sizes can be selected for the brush-tip.

While the mask editor tab is available, you may "paint" on the image currently on display in the center region. The brush tool is useful for marking single small areas of the image. To mark larger areas it is recommendable to use first the brush tool to generate a closed line and then the fill tool. In the pictures below an area of the image has been outlined with the brush tool and is then filled with the fill tool:

The outlined area gets filled.

For an example how to use the Mask Editor to create Don't Care Regions see Don't care masks.

The main function of TeachBench is to provide an environment to work with pattern recognition projects. In these projects, patterns are taught (trained and then learned) using sample images in which the operator points out objects of interest and labels them with the information necessary for the pattern recognition tool to build a classifier. The training databases can be stored to disk (the actual format depends on the pattern recognition tool in question) and reloaded, as can the resulting classifier and (depending on the module) test results.

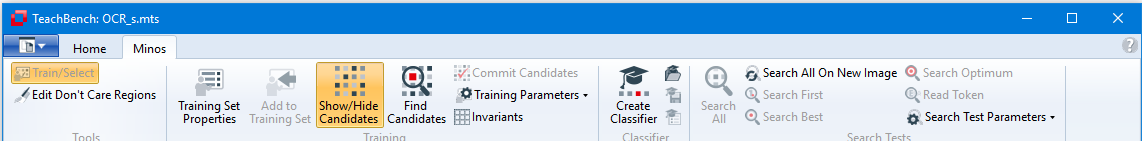

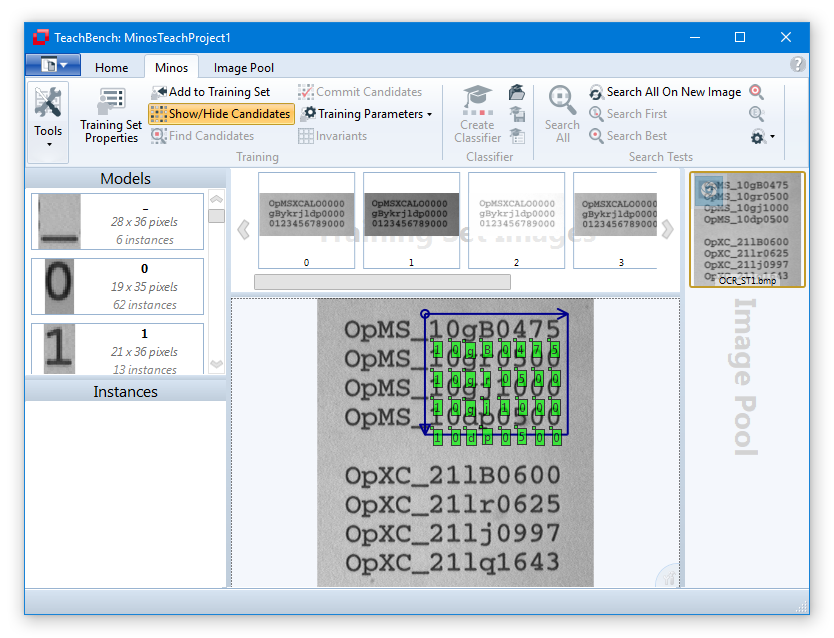

The Minos module for the TeachBench is intended to teach (first train then learn) patterns with the help of labeled sample images. The images which constitute the training material (Training Set Images) may be acquired from any image source supported by Common Vision Blox (i.e. cameras or frame grabbers, video files or bitmap files). A Minos classifier may contain one or more sample patterns - typical applications therefore range from locating a simple object inside an image to full-fledged OCR readers.

To train patterns, all the positive instances of the pattern(s) in the Training Set Images need to be identified and labeled by the operator. All image areas not marked as a positive instance may be considered to be counter-examples (also called Negative Instances) of the pattern. To be able to recognize an object that has been rotated or rescaled to some degree Minos may use transformations for the automatic creation of sets of models which differ in terms of size or alignment (see Invariants; note however that Minos is not inherently working size and rotation invariant an will not easily provide accurate information about an object's rotation and scale state).

Minos will use the instances and models defined by the operator to create a classifier which contains all the information necessary to recognize the pattern. When a new image (that is not part of the Training Set Images and is therefore unknown to a Minos classifier) is loaded and the classifier is applied to it via one of the available search functions, then Minos recognizes the taught pattern(s). As only the characteristics of the learned object(s) is/are stored in the classifier, the classifier is much smaller than the image information source (the Training Set Images) on which it is based. You can test a classifier directly in the TeachBench.

By switching between the learning/teaching of further instances of the pattern and testing the resulting classifier by means of the search functions in TeachBench, you can gradually develop a classifier which is both robust versus the deformations and degradations typically visible in the image and material and efficient to apply.

The sections that follow provide a guide for the steps involved in creating Minos classifiers and are divided into the following chapters:

To perform pattern recognition, Minos uses a technique which is comparable with the logic of a neural network. Patterns are recognized on the basis of their distinctive characteristics, i.e. both negative and positive instances of a pattern are used for recognition.

The criteria which distinguish between positive and negative instances are combined in a classifier in a so-called "filtered" form.

The classifier contains all the information necessary to recognize a pattern. The information is collected from various images (Minos Training Set, or MTS for short) from which the positive and negative instances of the pattern are gathered.

Once the pattern has been trained and learned, it can be recognized in other images. To do this, the classifier is used for the pattern search. We distinguish between two types of search function: pattern recognition and OCR.

Terminology

In this section the Minos specific terminology will be explained. For an overview of terms generally used in the context of TeachBench modules please refer to the Definitions section.

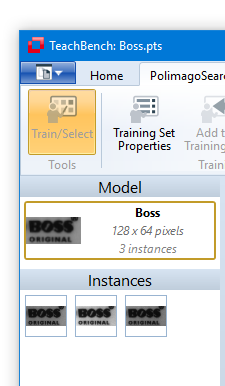

Model

A model in Minos is an object that is supposed to be recognized. It consists of at least one (positive) instance and is the product of the training process in Minos (see Creating Models and Instances). It is a user-defined entity. A model consists of at least one instance with its features and has a specific name. It has a specific size (region or feature window) and a reference point, these are equal for all instances that contribute to a single model.

Class

A class in Minos refers to the name of the model(s). A class is made up of at least one model. If multiple models are given an identical name, they belong to the same class. For an example see the graphic above: there are different "g" character models that all form the class "g". One should be careful when selecting the positive instances of a pattern which Minos is supposed to learn; for most applications it is advised to create more than one positive instance for a pattern before testing the classifier. In the case of patterns which are difficult to recognize, you should create at least ten positive instances before assessing the performance of a classifier. In general, the more positive and negative instances are learned, the more robust the classifier will be. However, excessive numbers of positive instances which barely differ in their properties will not significantly increase the performance of the classifier. The learning and testing processes are of decisive importance for the accuracy of the classifier and thus the application's subsequent success.

Performance

Minos offers a level of flexibility and performance which cannot be achieved by pattern recognition software which works on the basis of correlations. Despite this, correlations, with their absolute measurement of quality, offer advantages for many applications. For this reason, Minos also contains an algorithm for normalized gray scale correlation. In this procedure, the pattern and the images for comparison are compared pixel by pixel on the basis of their gray scale information.

If, up to now, you have only worked with the correlation method, there is a danger that you will approach your work with Minos with certain preconceptions which may lead to unintentionally restricting the available possibilities. We therefore recommend that you read the following sections carefully to make sure you understand how to work with Minos and are able to apply this knowledge in order to take full advantage of its performance.

Pattern Recognition

Minos is extremely efficient when searching for taught patterns. Thanks to its unique search strategy, it is able to locate patterns much more rapidly and with a far lighter computing load than software products which use correlation methods. Unlike the correlation methods, these search functions can also be used to recognize patterns which differ from the learned pattern in their size or alignment.

In Minos, it is enough to learn a single classifier in order to be able to search for a variety of patterns. This classifier may be used, for example, to locate the first occurrence or the first match for the learned pattern in the image. Alternatively, you may use the classifier to detect all occurrences of all learned patterns and record these, for example, to read all the characters on a page of text.

Flexibility

You can combine different search functions and multiple classifiers in Minos. This makes it possible to develop high-performance search routines for applications such as part recognition, alignment, completeness inspection, quality control, OCR or text verification.

Easy-to-use tools and parameters allow you to control the search process, location and size of the image segment to be searched as well as the pixel density to be scanned. This means that Minos can be quickly optimized for individual application needs.

Dealing with Noise

Minos can recognize patterns with ease even in images exhibiting differing level of brightness. However, the software is sensitive to large differences in contrast of neighboring pixels (in images which contain a high level of noise or reflection). Minos is most successful when searching in images in which the shifts in contrast within the pattern are predictable and uniform. If the pattern to be learned exhibits a high level of local contrast fluctuation, or if the background is extremely varied, you will need to learn more positive instances than what would normally be the case. Using this approximation method, you can ensure that Minos will acquire the largest possible range of pattern variations which are likely to occur within your scenario. In addition, you have to adjust the values of the Minos learning parameters to be robust against a higher level noise in the image (Minimum Quality parameter, Options) or reduce the sensitivity to local contrast (Indifference Radius parameter, Options). Another way to reduce noise in an image is to pre-process the search image using the image processing functions provided by the TeachBench (Normalization, Image filtering, Applying Image Processors and sub-sections).

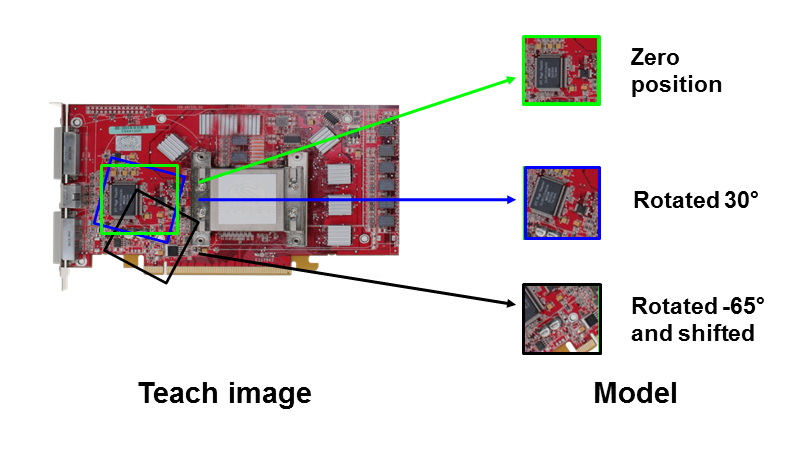

Coordinate System and Search Windows

Minos possesses two utilities which can be used to create "smart" search routines: flexible coordinate systems and intelligent search windows. Each pixel in an image is unambiguously defined in Minos in terms of its relationship to a coordinate system which is superimposed on the image. The coordinate system itself is flexible. That means that you can shift its origin and define its size and alignment starting from the point at which the pattern was located. This means that the position of a pattern can be described with reference to any other point. Similarly, the angle of rotation of a pattern can be expressed with reference to an alignment of your choice. Minos makes it easy to define the angle of rotation of a pattern and align the coordinate system correspondingly so that the image is normally aligned with reference to the coordinate system.

In this way, you can also search in rotated images just as if they were not rotated. When you compare this capability with correlation techniques, in which neither the scale nor the alignment of the image can be modified, you will quickly realize that Minos represents a new, higher performance approach to pattern recognition. What is more, intelligent search windows help you increase the efficiency of your application. Minos allows you to draw a rectangle as an AOI (Area Of Interest) in the search image (also see Testing a Classifier). You can specify the origins, proportions and search directions for this area. The properties of the search window are set with reference to the coordinate system, i.e. if you modify the coordinate system, the changes are also applied in the search window

In Minos it is often sufficient to create a small search window - it is unnecessary to create a large search window which demands considerable application processing time. In comparison with the search pattern, the dimensions of the search window may even be very small. It simply needs to be large enough to accommodate a pattern's reference point. Thus, it is possible to speed up the teaching and classification process significantly.

If the positioning point of a pattern is likely to be found within the region drawn in the bottom left corner, Minos need only search for the pattern in this area. Minos is able to find this pattern on the basis of its reference point.

Influence of the Coordinate System on Search Operation

For each search operation, the classifier is first transformed by the coordinate system. The coordinate system thus defines the "perspective" from which the image is to be viewed. The search direction of the Search First command is also influenced by the coordinate system: the closed arrowhead is the significant one here. Therefore, in an image in which the patterns to be found are arranged at an angle, a transformed coordinate system can considerably improve the speed of the search and the accuracy of recognition.

Quality Measure for Patterns

The Minos module of the TeachBench contains a utility named Find Candidates (see Adding Instances and Improving Models). This utility helps speed up the training process by pointing out potential instance candidates for the models that have already been trained. The potential candidates are decided upon by a correlation algorithm that runs in the background and looks through the available training set images for instances that might have been overlooked during the training process. Similarly, correlation between the original and a pattern detected during a search operation is calculated to determine an absolute quality measure of the search result (see Testing a Classifier). This measurement is performed on the basis of all pixels that have been determined to belong to the pattern during the learning phase (in other words: the correlation is only calculated over the features in the classifier, not the entire feature window).

Image Format Restrictions

Minos only works with single channel (mono) images that have a bit depth of 8. If you want to work with images that do not meet this requirement, you can use the image processors that are present in the TeachBench to make your image suitable. See Image Processing.

You can use TeachBench with Minos to teach patterns which can subsequently be searched for in new images. In this chapter we show how to teach a pattern in Minos and explain the components of the Minos TeachBench module. This section is structured along the steps of a typical work flow when creating a classifier:

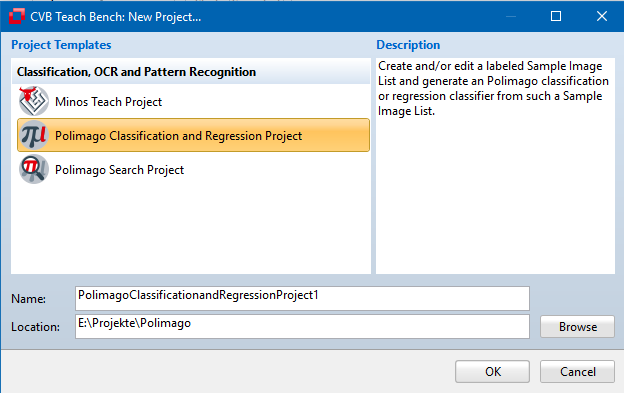

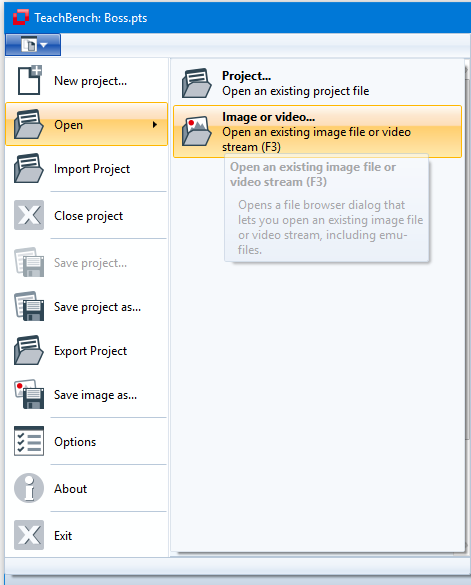

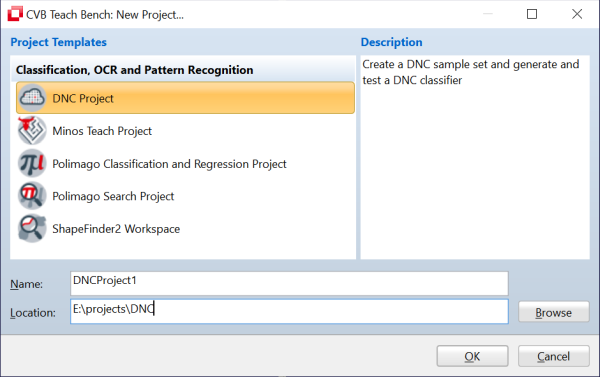

When starting from scratch and no MTS (Minos Training Set) file exists, it is advised to use the Project Creation Wizard. The wizard can be reached via the File Menu > New Project....

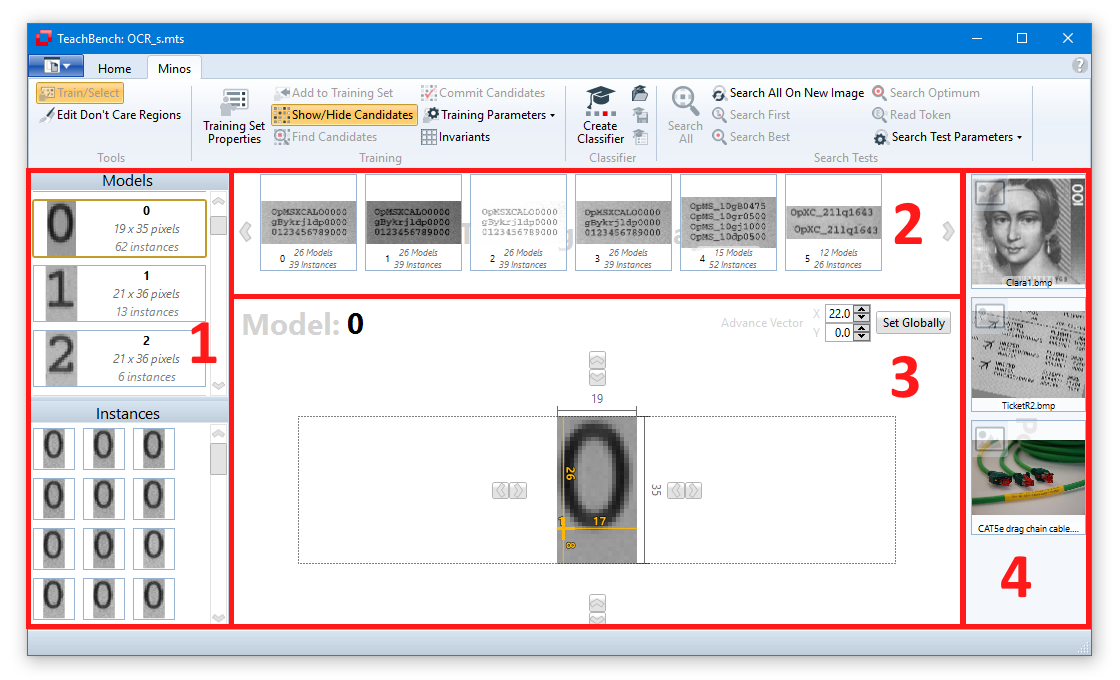

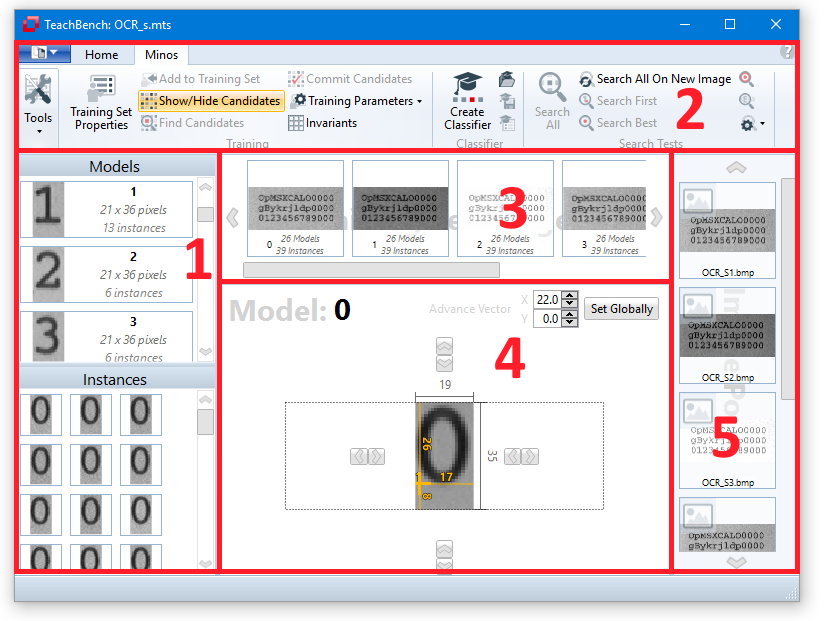

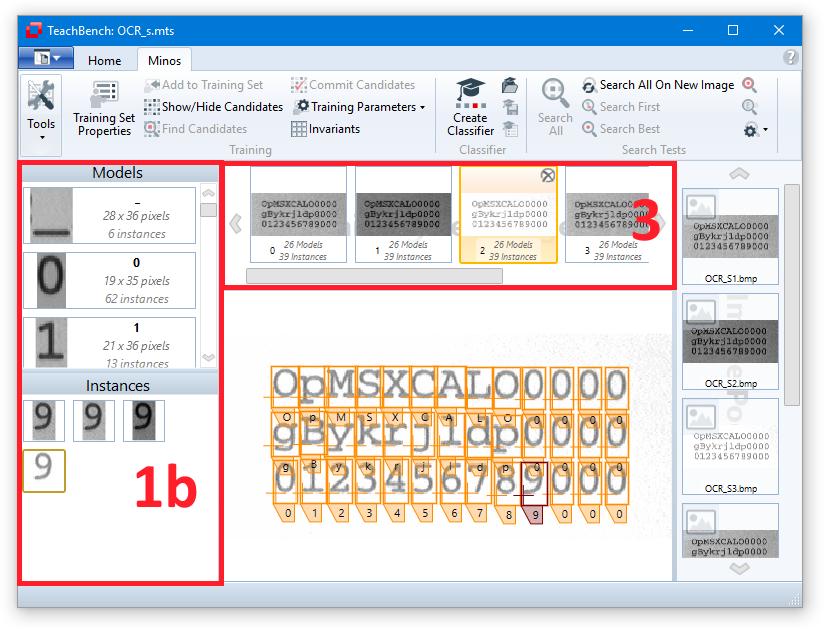

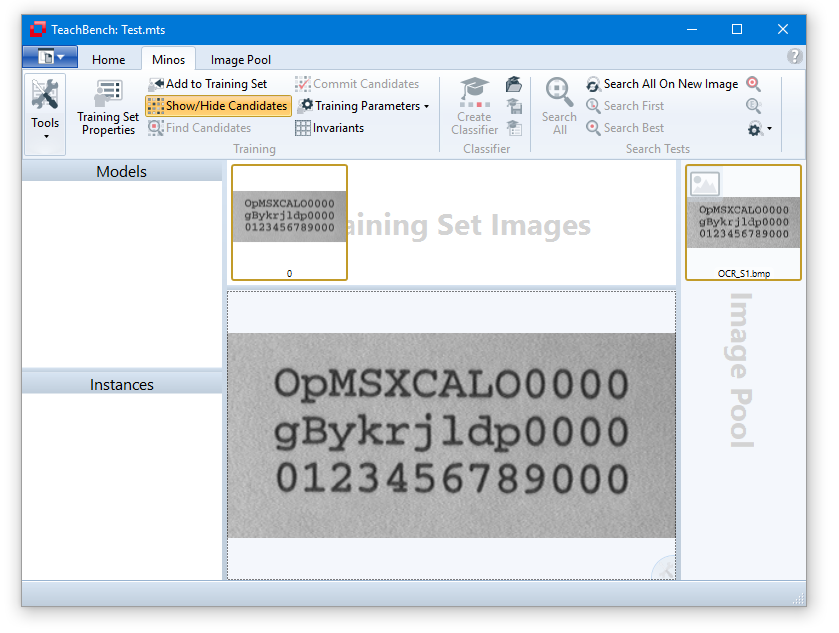

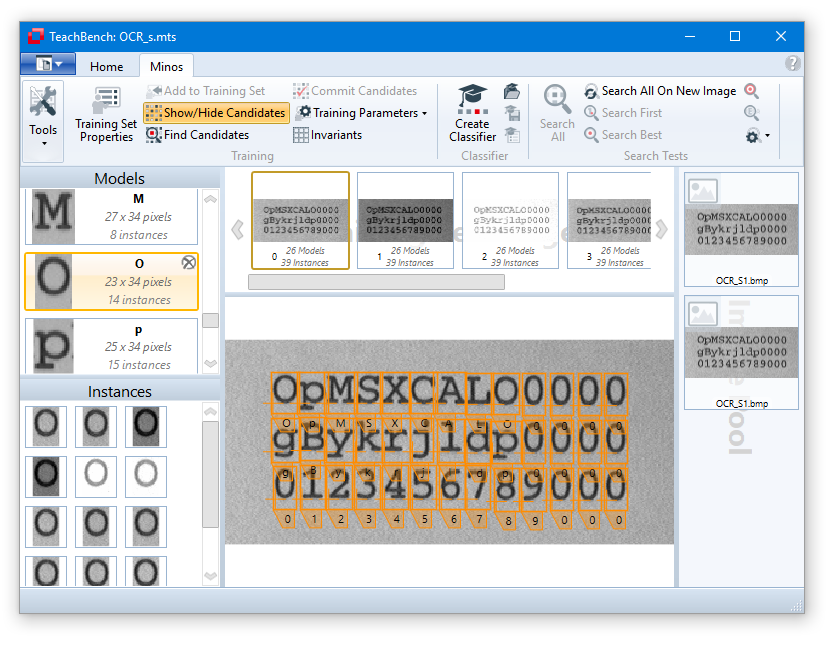

After confirming the dialog, an empty Minos View is shown which consists of the:

When saved to an MTS (Minos Training Set) file, the only information that is being saved are the Training Set Images (3) along with the models and their instances (1). Trained classifiers are to be saved separately via the corresponding button from the Classifier section of the Minos ribbon menu (2).

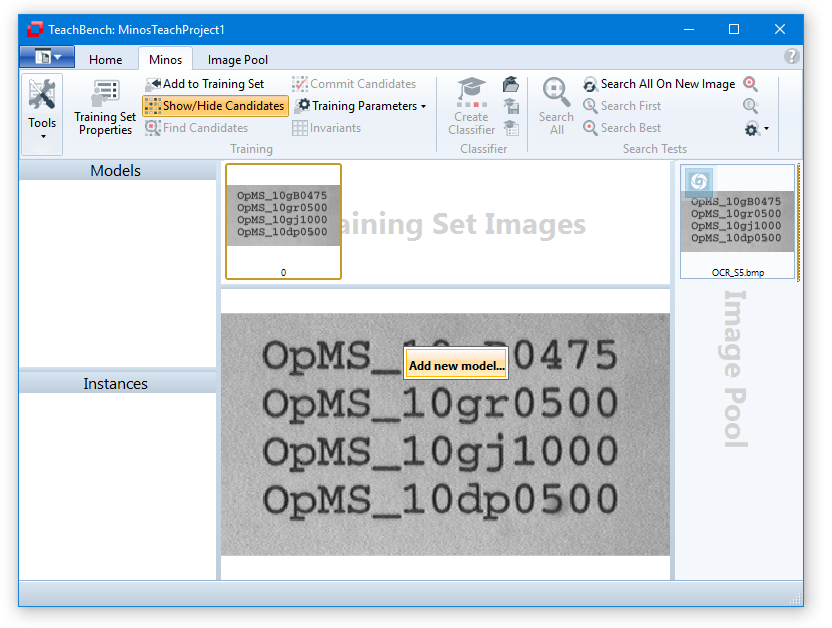

Adding images to the training set

When an image is opened, it is automatically placed into the Image Pool. Images can be added to the Training Set Images with the corresponding tool (button "Add to Training Set" at the top left ) from the Minos ribbon menu. Images can be removed from the Training Set by clicking the x-icon in the upper right corner, that will be shown when hovering the mouse over a particular image.

) from the Minos ribbon menu. Images can be removed from the Training Set by clicking the x-icon in the upper right corner, that will be shown when hovering the mouse over a particular image.

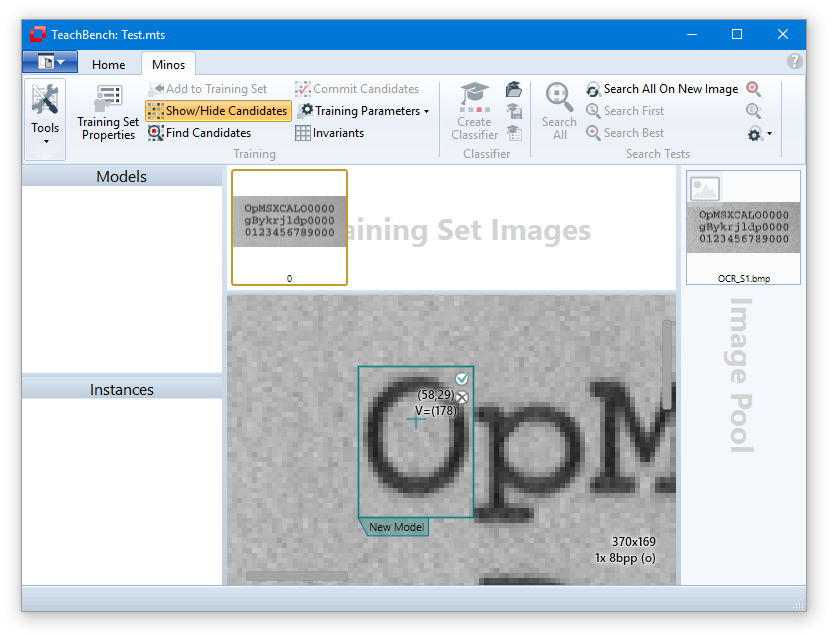

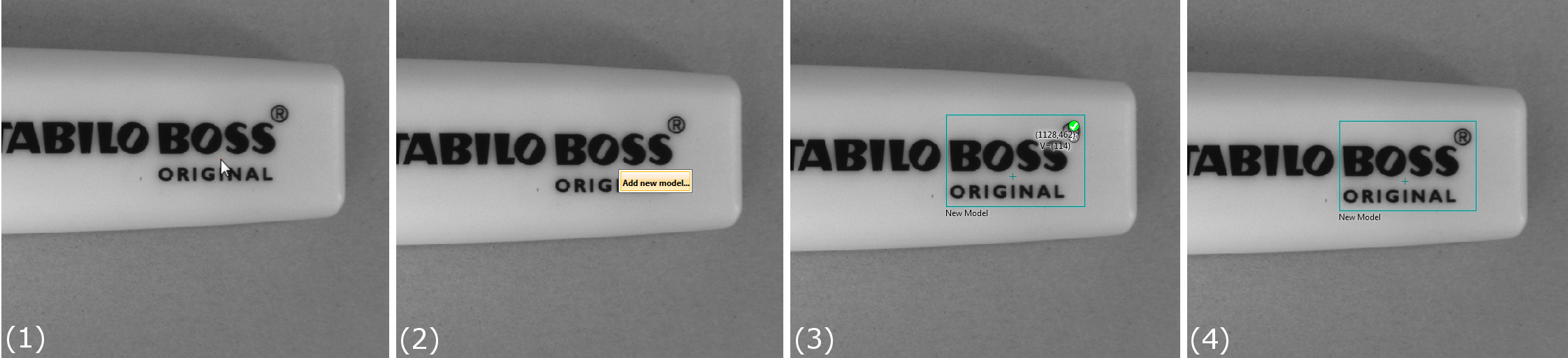

Adding an Instance

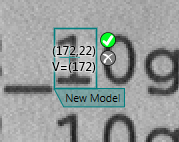

To create a new instance for a new model:

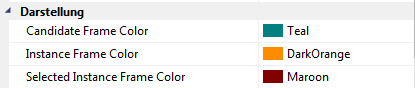

Please note: The color of the Instance Frame may be selected in the Minos options (Options).

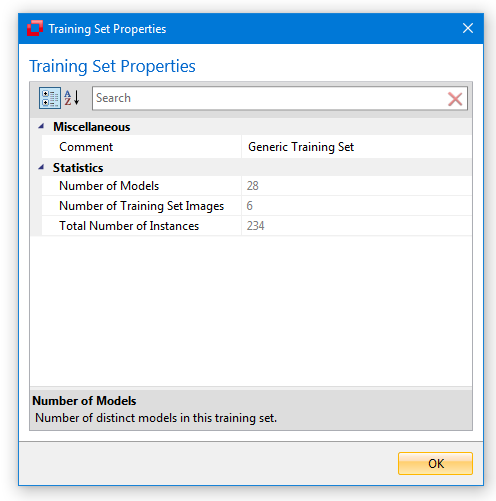

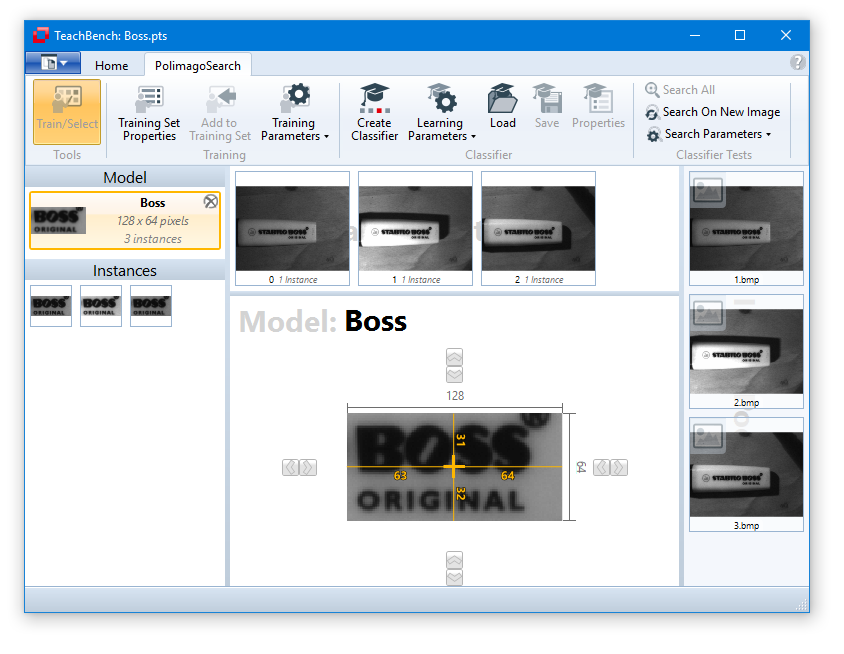

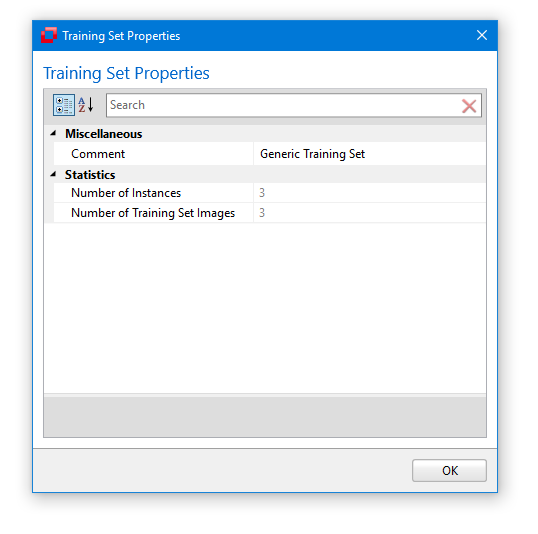

Training Set Properties

Once a training set has been created, you can review the training set properties via the corresponding button from the ribbon menu (to the left). Clicking this button will open a dialog window that shows various information regarding the currently loaded training set.

(to the left). Clicking this button will open a dialog window that shows various information regarding the currently loaded training set.

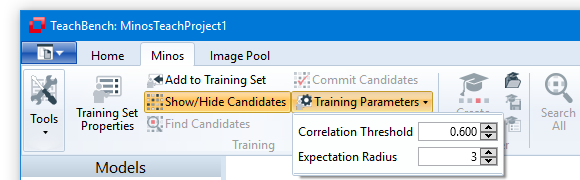

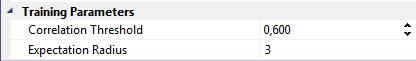

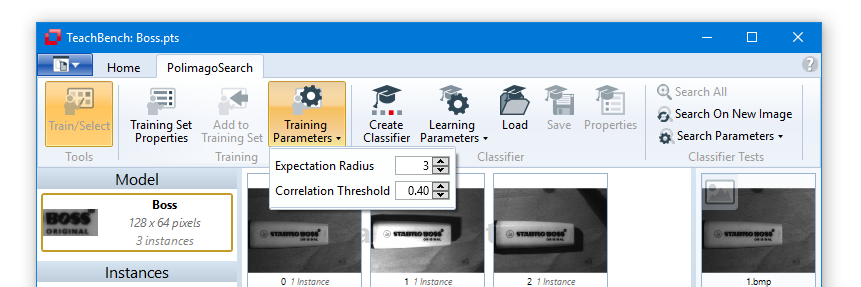

Before you actually start adding instances, you might want to review the available Training Parameters. Here you can adjust the Correlation Threshold and Expectation Radius. The values are grouped under Training Parameters in the Training Tools section of the ribbon tab. The meaning of the parameters will be explained in this section.

You may want to consider saving your training set often: The TeachBench does not currently provide any kind of Undo functionality, so any modification made to the Training Set will be committed to the data immediately and the easiest way to revert to an earlier state is to reload a saved Training Set.

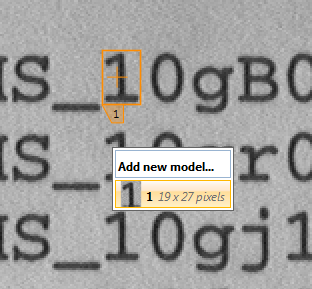

Manually Adding Single Instances

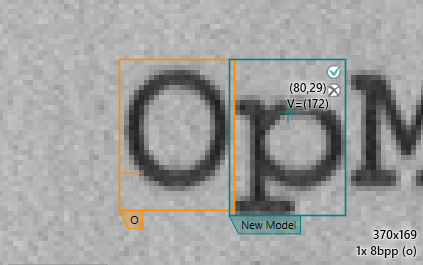

To add additional instances of an existing model, click the desired position (ideally by aiming for the reference point of the model in question) and selecting the model from the pop up window that opens. After the first instance for a model has been created, Minos is already able to approximately recognize the pattern. Thus when adding a new instance, all previous instances will be taken into consideration to best match the newly selected instance. To manually add an additional instance for an existing model it is therefore enough to roughly click the location of the reference point and Minos will try to best fit the region.

The area in which Minos tries to fit a new instance is determined by the Training Parameter Expectation Radius. The smaller the radius, the more accurate you will need to select the position of the reference point, whereas a fairly high value for the Expectation Radius may lead to the correction to drift a away to an unsuitable location (especially if only very few instances have been trained yet).

By the way: You may change the color of the Instance Frame in the Minos options (Options).

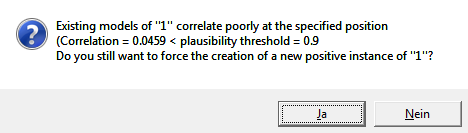

Poor Match Model

If you attempt to train an instance of an existing model that might be considered to be a poor match to the existing instances, you'll get a warning for a Poor Match to Model. In this case the correlation between the model image visible in the model list in the leftmost region of the Minos Module and the location that has been clicked is below the currently set Correlation Threshold and adding this instance to the model may decrease the resulting classifier's accuracy. If you click YES, the instance will be added to the model anyway - this is a way of passing information about the expected pixel variance in your pattern to the classifier and will effectively define a range of tolerance in which the acceptable patterns lie. If, however, you close "No" instead, the currently select reference point will be skipped and no instance will be added.

You should consider adding a poorly matching instance to the model only if the new instance actually represents an acceptable variation of the model to be expected in the image material. Note however, that if many of the variations leading to poorly matching instances are to be expected it is often a better choice to add them to new models with the same name.

In general, it is recommendable to have a large number of distinct instances for one model to grant good classification results. The instances should be distinguishable from each other. But, if successive samples do not contain much additional information, one runs the risk of unintentionally adding false information to the Training Set (for instance image noise), which may weaken the classifier. This can lead to so called Over Fitting of a classifier and can lead to many false positive results during search operations. Also, the various models should be as distinguishable as possible - select the regions accordingly.

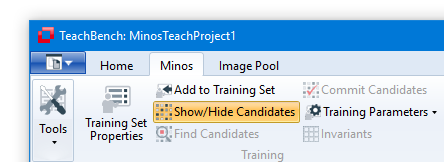

Once training has started and the first instances have been added it is possible to get automatically matched suggestions for additional instances by using the tool Find Candidates at the top left of the ribbon tab. The suggestions will be calculated for the image you are currently working on in the center Editor View Region. Before using this feature, the toggle button Show/Hide Suggestions

at the top left of the ribbon tab. The suggestions will be calculated for the image you are currently working on in the center Editor View Region. Before using this feature, the toggle button Show/Hide Suggestions has to be enabled. If Show/Hide Suggestions is enabled a search for new candidates will be carried out automatically whenever a new image has been added to the Training Set. Nevertheless it makes sense to trigger the candidate search manually from time to time - for example if new models have been added to the Training Set it's a good idea to re-evaluate the already available images.

has to be enabled. If Show/Hide Suggestions is enabled a search for new candidates will be carried out automatically whenever a new image has been added to the Training Set. Nevertheless it makes sense to trigger the candidate search manually from time to time - for example if new models have been added to the Training Set it's a good idea to re-evaluate the already available images.

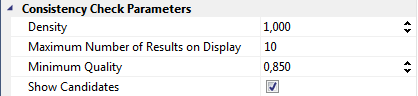

There are three parameters in the TeachBench Options > Minos dialog (see Options) that correspond to the Find Candidates feature. These are grouped under "Candidate Search Parameters":

Density

The density parameter defines the search density to be used when looking for candidates (for the definition of the density please also refer to the Definitions section). Higher density values will result in a (significantly) higher search time - however the search will be done asynchronously, leaving the TeachBench as a whole available for other things. Setting the density to a lower value makes it more likely for this feature to miss a candidate in an image. While a candidate search is happening in the background, the TeachBench's status bar will show this icon:

Minimum Quality

The value of Minimum Quality is essentially a correlation threshold that determines the minimum matching level which a potential candidate must have to pop up in the image.

Maximum Number of Results

This value determines the maximum number of results that will be simultaneously shown in the central Editor View Region. Found suggestions are arranged by descending matching score so that the best ones will be shown first. If you confirm or reject a suggestion, the next one in line will be shown (if available). Limiting the number of results simultaneously on display helps preventing the Editor View from becoming too cluttered. The suggested candidates may be rejected or committed one by one to their corresponding Training Set models by clicking the respective button shown inside or next to the candidate frame. All currently visible suggestions may be added at once with the Commit Candidates button from the ribbon menu.

By the way: The color of the instance frames may be selected in the Minos options. See Options Window.

If training has already covered (approximately) all the models that are going to be needed, there is yet another feature that makes training easier, called "Next Character".

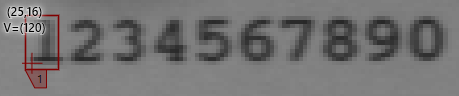

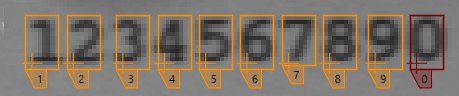

Consider the following situation: You are working with a training set that has all the characters 0-9 already trained from at least one image. Now add another image with one (or more) line(s) of numbers and manually mark the first character in the topmost row:

If all the subsequent characters (2, 3, 4, 5, 6, 7, 8, 9, and 0) have already been trained (and, if necessary, had their OCR vector adjusted) the remaining string can simply be trained by pressing the characters that follow in the correct sequence (in this case simply 2, 3, 4, 5, 6, 7, 8, 9, 0):

This works as long as three conditions are met:

The way the "Next Character" feature works is fairly simple: The moment you press the character to be trained next, the TeachBench will add the currently selected instance's advance vector (in this case the advance vector of the model '1') to the position of the selected instance and act as if you had clicked there and selected the model named with the character you just pressed. This means that the correlation threshold and the expectation radius (see here) will apply just as if the new instances had been trained by clicking their locations in the training set images with the mouse.

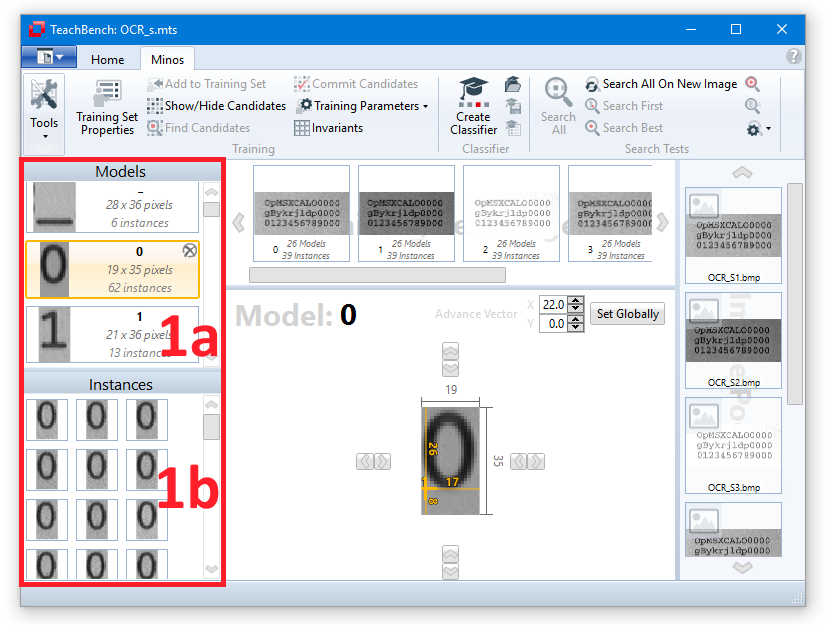

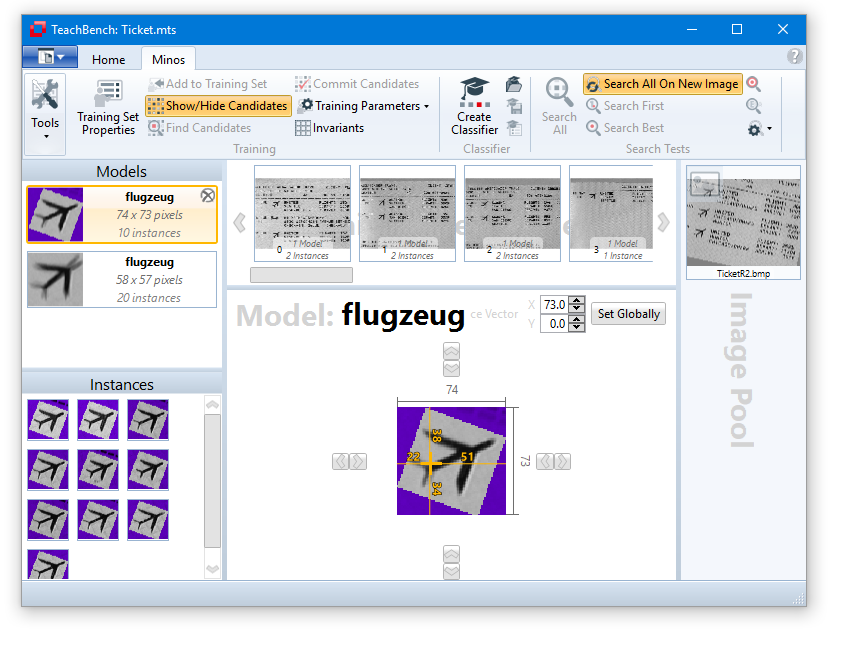

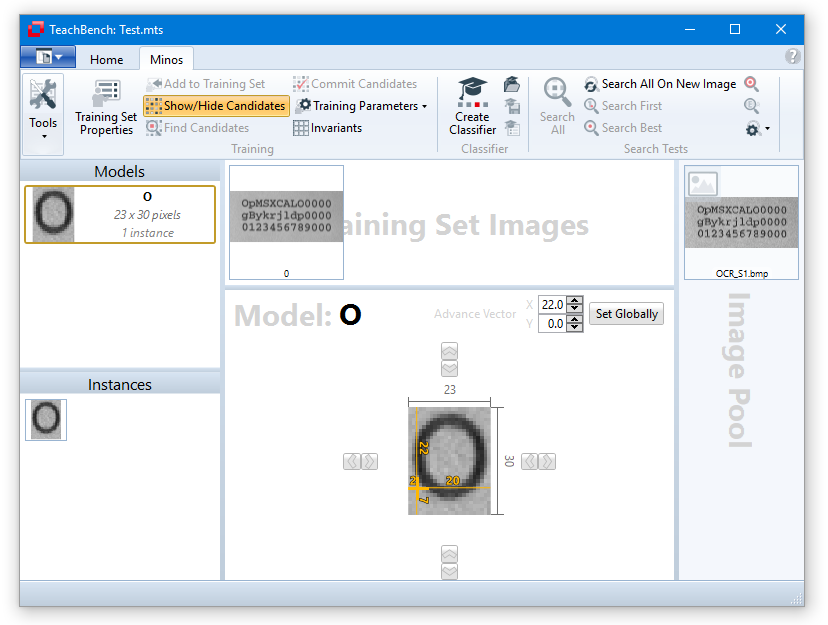

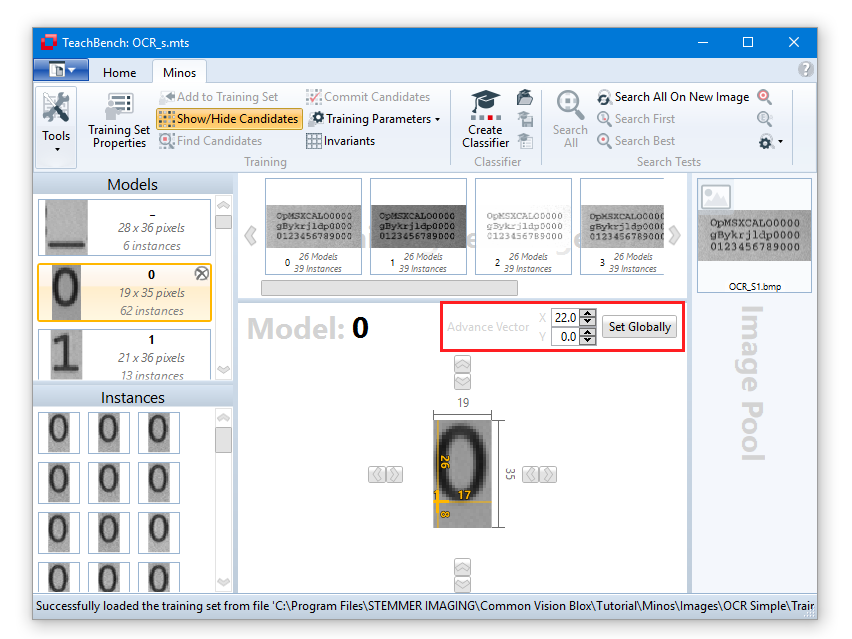

Selecting a model from the Models/Instances region (leftmost region of the TeachBench) will switch to the Model Editor View. Here you may fine-tune the feature window, move the reference point, change the Advance Vector parameter and the model name (for details on advance vectors see Defining an Advance Vector).

If there are parts of the instances you don't want to use for classification, the Mask Editor tool may be used to mask out regions in the model that are irrelevant for training (see Mask Editor View) - particularly useful when training models that have overlapping feature windows.

tool may be used to mask out regions in the model that are irrelevant for training (see Mask Editor View) - particularly useful when training models that have overlapping feature windows.

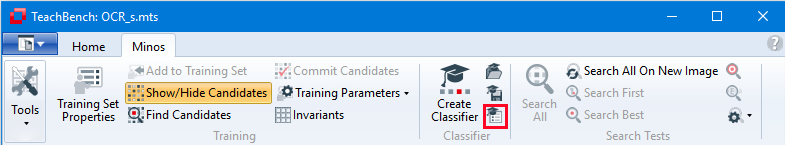

Before you start creating a classifier you should make sure that a sufficient number of instances for each model has been created (the number of created instances is shown for every model at the Models View region at the left). To train the models and instances, simply use the Create Classifier button from the ribbon menu Classifier section and save it with the Save a Minos Classifier button

from the ribbon menu Classifier section and save it with the Save a Minos Classifier button

Classifier Properties

When you've learned or opened a classifier, the Classifier Properties can be display via the equally named button from the Classifier section of the Minos ribbon tab. Here you can set a description and see the (read only) parameters that have been used for learning. Additionally you can find various data here like the date of creation, date of last change, model names and description.

from the Classifier section of the Minos ribbon tab. Here you can set a description and see the (read only) parameters that have been used for learning. Additionally you can find various data here like the date of creation, date of last change, model names and description.

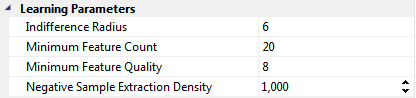

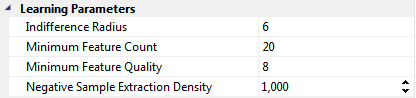

Learning Parameters

A Minos classifier can be optimized in various ways. Besides choosing the appropriate feature window and distinct model representations during training, you should also consider the Learning Parameters Minos provides. These parameters can be found in the Minos tab of the option menu (also see Options).

Indifference Radius

This parameter specifies the spatial distance between positive and negative instances. It defines a square whose center is located at the reference point of the positive instances. From the remaining area (pixels) outside the indifference radii of a Training Set Image, Minos may extract negative instances. In other words: The Indifference Radius defines a spatial buffer (or "neutral" zone) around positive instances where no negative instances will be extracted from. For a definition of the radius see also the Definitions section.

Setting a higher value than the default of 6 for the indifference radius may be useful if it is necessary to recognize exceptionally deformed patterns or patterns with high interference levels. In contrast, lower values result in a more precise position specification of the search results of the classifier. If uncertain, it is advisable to leave the default value unchanged.

Minimum Feature Count

Specifies the minimum number of features used for each model. Lower values result in shorter search times when using the resulting classifier whereas higher values result in increased accuracy.

Minimum Feature Quality

The minimal threshold of the contrast of a feature. Lower values result in lower contrast features to be accepted into the classifier, effectively enabling the classifier to also recognize extremely low contrast objects, whereas setting a higher value will result in features that are less sensitive to interference in the image (e.g. noise), making the classifier as a whole more resilient to noise-like effects in the image.

One should only set a higher-than-default Minimum Feature Quality if the images used for the training process possess a high level of interference (noise) and therefore a relatively high number of false negatives (patterns which are not recognized as such) are expected during testing of the classifier. Lower-than-default values are only recommendable if the aim is to recognize low contrast object ("black on black").

Negative Sample Extraction Density

This has a similar effect as the Search Density parameter (see Search Parameters). During the learning process, a grid is superimposed on the images of the training set and only the grid points will actually be checked for potential negative instances. The density of the grid is defined using the value of Negative Sample Extraction Density (for a definition of "density" see the Definitions section).

Lower values may considerably reduce the time required for the Learning Process but increase the probability that useful negative instances of a pattern may not be detected which may decrease the classification capabilities of the classifier. It is generally recommendable to use the default value of 1.0 here.

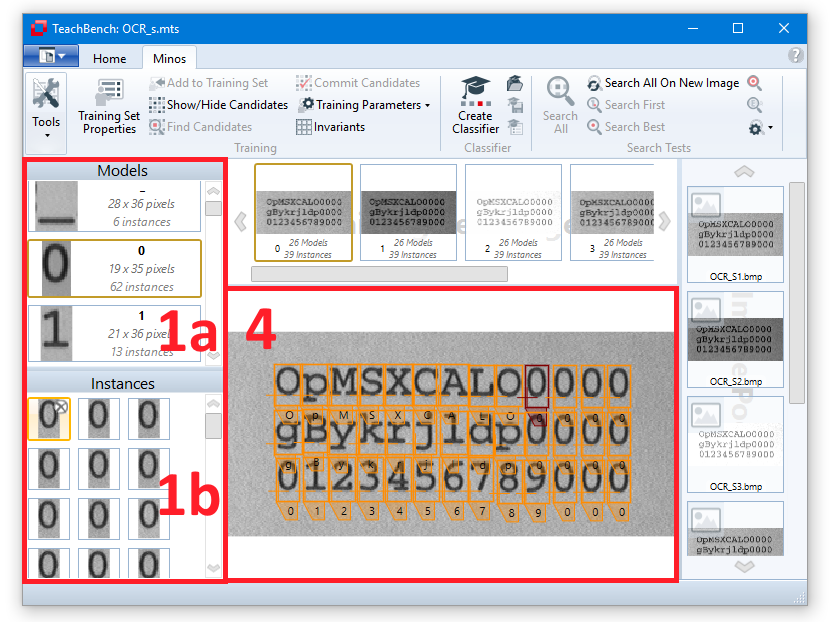

Opened (you can load one with the corresponding button from the ribbon menu ) or learned classifiers can be tested directly inside the Minos module of the TeachBench. To test a classifier, open an image that you did not use for training. Select the image you want to test with from the Image Pool by clicking it.

) or learned classifiers can be tested directly inside the Minos module of the TeachBench. To test a classifier, open an image that you did not use for training. Select the image you want to test with from the Image Pool by clicking it.

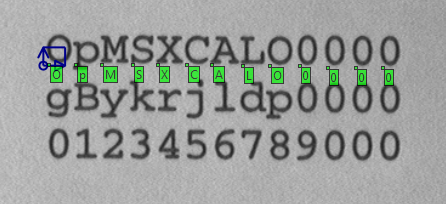

You may now either create an area of interest by dragging the mouse over the image in the center Editor View region or use the whole image by default (if no region is set explicitly). When defining an area of interest, the area can be opened in four different directions (left/top to right/bottom, left/bottom to right/top, right/top to left/bottom, right/bottom to left/top), and the direction that has been used will be indicated with two different arrows on the area of interest indicator. The area of interest is always scanned in a defined order and the direction of the two arrows defines the order in which Minos will process the pixels inside the area of interest during a search:

With Search First the* the result strongly depends on the orientation of the area of interest. Search First always interrupts a search operation if the first pattern has been found. For example in the area below the result of a Search First call will be the terminating 0 of the string.

the* the result strongly depends on the orientation of the area of interest. Search First always interrupts a search operation if the first pattern has been found. For example in the area below the result of a Search First call will be the terminating 0 of the string.

Please note: If you can't create a search region or cannot use the Search Test tool in general, then there is either no classifier available yet or the image you are working on is not suitable for Minos to work with. See section Image Restrictions in Overview.

The utilities for classification tests are located at the right end of the Minos ribbon menu and are given self-explanatory names plus a tool tip that helps understanding them.

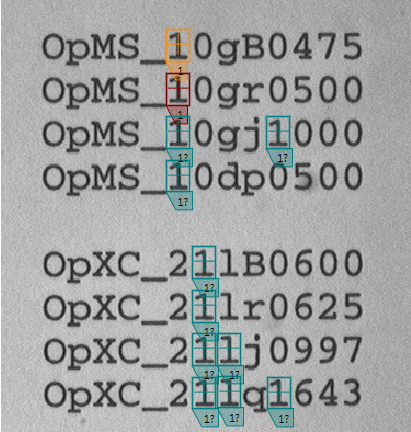

For the following images, Search All has always been used to find all matches for the models "1" and "2" with a quality threshold of 0.90 (see the Search Parameters section for details). Search results are indicated by a label in the center Editor View region.

has always been used to find all matches for the models "1" and "2" with a quality threshold of 0.90 (see the Search Parameters section for details). Search results are indicated by a label in the center Editor View region.

If false positive results are found one either tinker with the threshold and the other search parameters or go back to training and refine the models and instances with more sample images and learn a better classifier.

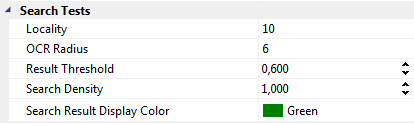

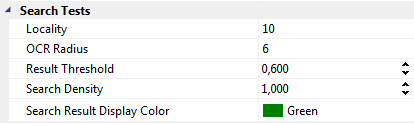

The parameters for search tests, named Search Parameters in the TeachBench, are available via the the Search Parameters drop down menu and in the under the group "Search Test" in the Minos section (Options) of the TeachBench options.

Search Density

When using a classifier on an image (or an area of interest defined on an image), a grid is superimposed on the search area and only those pixels that are part of the grid are actually checked for correspondence to one of the models available in the classifier. The density of these grid points is defined using the value Search Density (for a definition of the term Density here please see the Definitions section). Low density values reduce the time required for the pattern search considerably but increase the probability that an instance of a pattern may be missed.

Result Threshold

The threshold value represents the minimum result quality value for the pattern recognition. The higher the value, the less false matches Minos will detect but it also might miss some otherwise correct matches. The lower the value the higher will be the total number of matches Minos detects, but the chance for false positives increases as well.

OCR Radius

The OCR-Radius parameter defines the size of the OCR (Optical Character Recognition) Expectation Window when testing a classifier with the Read Token command. The expectation window is a small square, the midpoint of which is given by the sum of the Advance Vector (for the class of the last recognized character), added to the position (reference point) of that last recognized character. For details see also the sections Defining an Advance Vector and Definitions. During a read operation, the classifier only examines the pixels in the expectation window in order to recognize a character. Thus a low value for the OCR Radius permits only small deviations in the positioning point with reference to the previous character. Consequently, if there are larger variations in relative character positions a higher value for the size of the expectation window should be set (keeping in mind that too high values for the OCR radius will likely result in overlooked characters during a search).

Locality

Locality is only effective if the Search All command is used. It specifies the minimal spatial distance between matches (recognized instances). Practically speaking, if Minos recognizes two candidates within a distance lower than the given Locality (measured with the L1 norm!), it will reject the candidate with the lower quality score.

Search Result Display Color

Defines the color in which a search result will be displayed in the Main Editor View.

Another type of error which may occur are false negatives. During a search test, generally three types or errors can be identified:

False positive

An object not trained into the classifier is incorrectly identified as belonging to a class. Usually, false positives possess a significantly lower quality measure than correct search results. The identification of false positives indicates that there might not be enough positive and negative examples in the Training Set to enable the classifier to work efficiently and reliably even under difficult search conditions. So, to avoid this of problem, add the corresponding images to the contents of the Training Set (of course without marking the location of the false positive result(s)).

False negative

An object belonging to a class is not recognized as such. In other words: In this case, the search function fails to find a good match, i.e. a corresponding pattern of high quality, even though at least one is present in the image. Also in this case, the performance of the classifier can usually be improved by adding further images to the Training Set. To do this, it is necessary to use a wider range of positive instances and counter-examples. Add more images containing positive instances to the model/Training Set. Make sure that these images satisfy the framework conditions which obtain in your actual application. If you have a classifier that shows a surprising amount of false negatives during search test, it might also be a good idea to re-evaluate the images in the training set and search for instances of the class(es) involved that might have been missed during training. The "Find Candidates" command may be a useful help here (and it might make sense to slightly decrease the correlation threshold in the Minos options).

Confusing results

The result of the search function is inexplicable, incorrect or rare. Check the images in all the training sets to make sure that no positive examples have been overlooked. You can us the Find Suggestions tool from the Minos ribbon menu to aid you with finding all instances. Also see Speed Up Creating Instances - Find Suggestions.

When a model is selected in the Models/Instances View at the left (1, upper part) the center Editor View Region shows the Model. The Training Set Images of the current project are shown in the dedicated region (3). All images that you added to the Training Set from the Image Pool (5) with the button Add to Training Set from the Minos ribbon menu (2) will be shown here. When you save a project as a MTS (Minos Training Set) all the Training Set Images, models and their instances are saved. Trained classifiers (CLF) have to be saved separately via the corresponding button from the ribbon menu.

Model Region (1)

All models that belong to a Training Set are shown in the Model Region. The models are sorted ascending by their name. Under the model name you can find the size of the model region and the number of instances that have been added to the model. If you hover over a certain Model, you can remove it from the Training Set by clicking the x-icon in the upper right corner.

Model Editor View Region (2)

If you select a model from the Model Region the center Editor View will change to the Model Editor View (4). Here you can fine-tune the region for the model with the corresponding controls. You may also drag the reference point for the model, change the model name and modify the model's Advanced Vector (also see Defining an Advance Vector).

When a model is selected in the Models/Instances View (1a) the list of corresponding Instances (1b) will be shown.

Selecting one of the Instances (1b) will select the image from which it has been extracted and display it in the Editor View region (4) with the selected instance highlighted.

Highlighting will also work in the other direction: Selecting one of the Training Set images (in region 3) will bring up the selected image and show all the instances that have been trained from it. Clicking one of the instance frames will highlight it and select it in the Instances list (1b).

The highlighting color for the selected instance and the unselected instances may be defined in the options menu (Start menu > Options).

(Start menu > Options).

The following section shows you:

Minos allows you to create classifiers which recognize a pattern in more than just one rotation and scale state in one of two ways:

Adding bigger rotation/scale steps to new classes is necessary because of the way Minos works: Minos always tries to build a classifier that fits all the instances that have been added to one model. However, this is only possible if there are features common to all the instances of a trained model. Rotating models by up to ±180° will make this highly unlikely (unless the object that is being trained has some rotation-symmetric elements), making it necessary to group the instances of different scale/rotation states into different models based on their similarity (or lack thereof).

It often makes sense to combine both: If e.g. the aim is to have a rotation invariant classifier, it's recommendable to first generate the rotations up to ±180° with a higher step size (for example 10°) and then "soften" the models that have been produced by adding smaller rotation steps as well (e.g. ±3°).

The Invariants tool permits a rapid and automatic generation of the such models from existing models (see also Invariants).

permits a rapid and automatic generation of the such models from existing models (see also Invariants).

Exemplary Work Flow

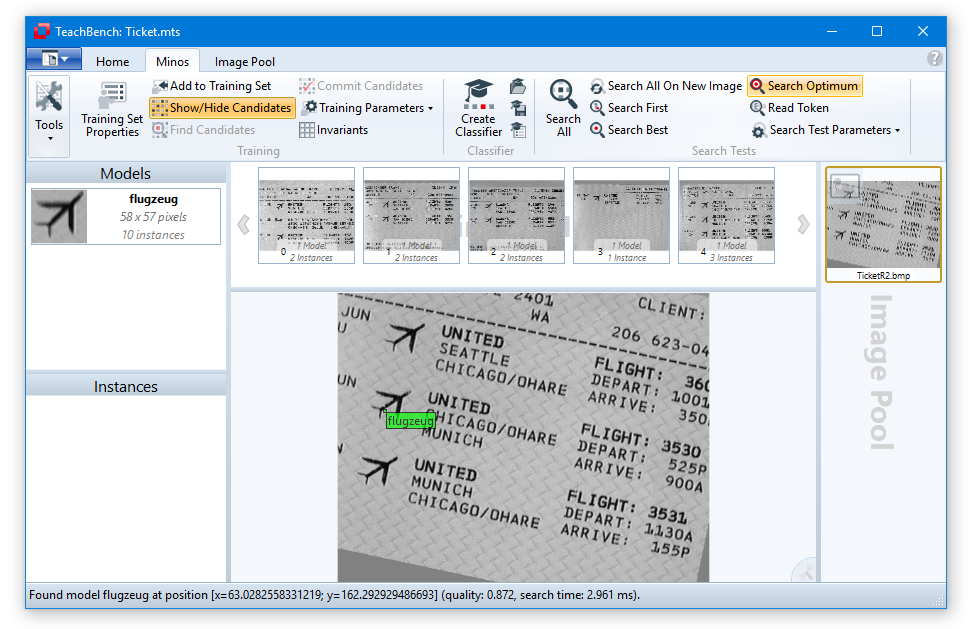

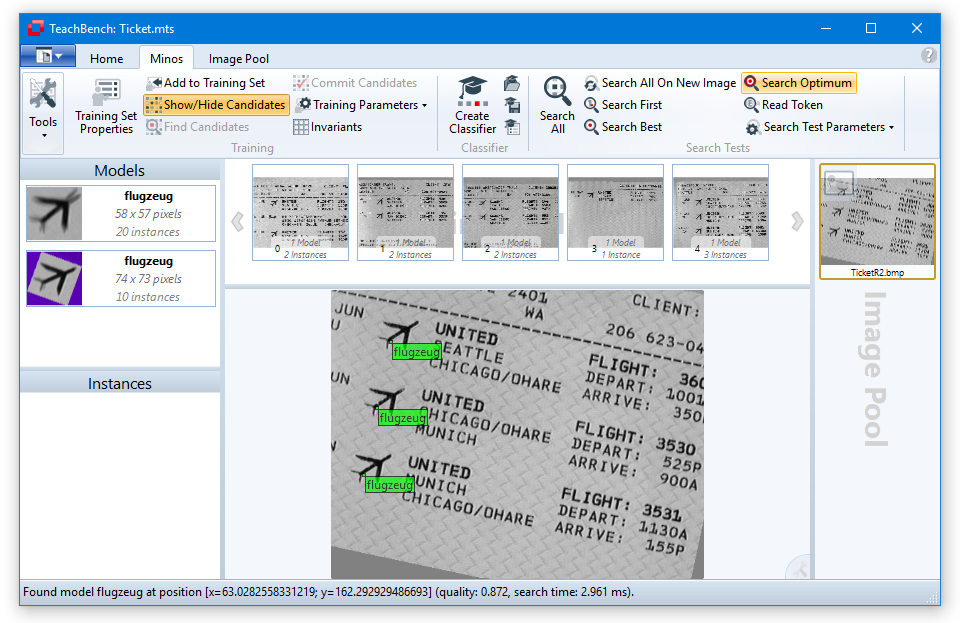

First, open the image TicketR2.bmp from the CVBTutorial/Minos/Images/Ticket sub directory of your Common Vision Blox installation. Then open the files Ticket.mts (Minos Training Set) and Ticket.clf (Classifier) from the same directory. Now press the Search Optimum button from the Search Tests section of the Minos ribbon menu. As you can see, not all instances are recognized correctly.

from the Search Tests section of the Minos ribbon menu. As you can see, not all instances are recognized correctly.

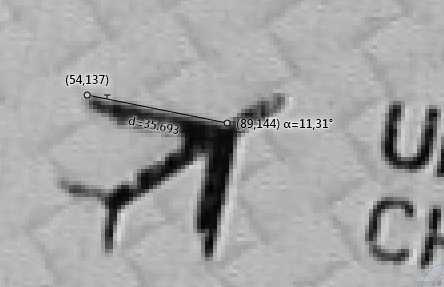

To improve the classifier, one may first determine the angle of the rotated object in the image TicketR2.bmp. To do this you can use the Measurement Line tool (see Image Pool View). In the original model, the line from the tip of the left airplane wing to the point at which the wing joins the fuselage is almost horizontal, about 0°. The angle measured here is approximately 11°.

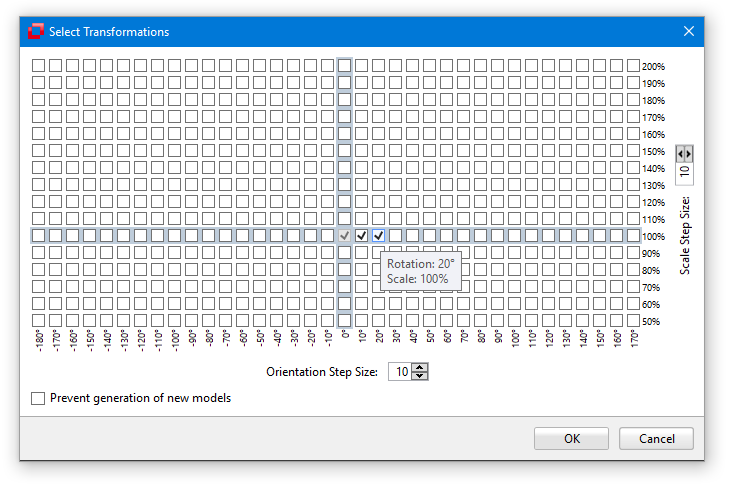

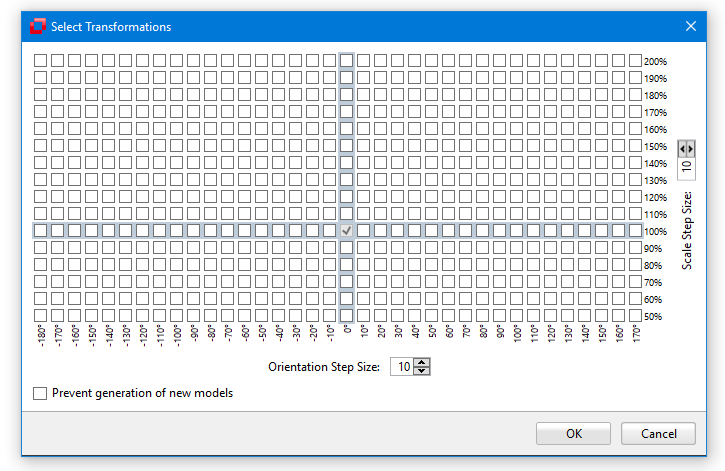

To obtain an optimum search result, is is therefore required that the model is rotated approximately 11°. To do this, click the Invariants button from the Training section of the Minos ribbon menu. Now click the two grid squares which lie immediately to the right of the origin. Since the grid has a resolution of 10, the values 10 and 20 are selected.

from the Training section of the Minos ribbon menu. Now click the two grid squares which lie immediately to the right of the origin. Since the grid has a resolution of 10, the values 10 and 20 are selected.

To generate the selected transformations, click the OK button. Minos will automatically generate a new rotated model with the corresponding instances.

Since the content of the MTS (Minos Training Set) has changed, it is necessary to re-learn (and maybe save) the classifier.

Now test the classifier using the image TicketR2.bmp. You will see that the output quality measure is significantly higher and all three objects are now recognized.

To increase the speed of the search, reduce the Search Density parameter to approximately 500 and test the performance of the classifier again. Reduce the Search Density to 250 and see how the search speed increases. (also see Search Parameters)

The Invariants tool from the Training section of the Minos ribbon menu opens a dialog box with which additional model sets can be automatically created from existing models by means of geometrical transformations (scaling and rotation).

from the Training section of the Minos ribbon menu opens a dialog box with which additional model sets can be automatically created from existing models by means of geometrical transformations (scaling and rotation).

The Invariants tool greatly simplifies the learning of patterns which can occur in different sizes and angles of rotation (see also Rotation and/or Size-Independent Classifiers). One may simply define a single model and then use Invariants to generate additional models of the same pattern with the required scaling or rotation.

As described in Rotation and/or Size-Independent Classifiers there are situations where it makes sense to use the Invariants tool more than once on a Training Set. However please note that when using the Invariants tool repetitively, duplicate transformations will not be detected and if they do occur (e.g. by selecting +10° & +20° in the first application, then -10° and -20° in the second) they will be retained even though they do not provide useful information.

To avoid wasting storage space, it is advisable to proceed as follows when using the Invariants tool: Save the Minos Training Set (MTS) before using the Invariants tool and right before you are ready to generate your classifier (i.e. if you feel that all the necessary training has been done). Then generate the Invariants and learn the classifier from the augmented Training Set. Saving the training set is not really necessary because the generated invariants may easily be re-generated from the last saved MTS file if the transformations are known (it might be a good idea to write them into the classifer's comments, though...).

Warning: The size of the storage (and memory) space which the MTS requires after the transformations is approximately the product of the size of the original MTS multiplied by the number of transformations performed.

The Invariants tool opens a dialog box which contains a grid. Each cell in the grid corresponds to a possible orientation and size of the model in the MTS. The cells which have the size 100% and the position 0° are highlighted to provide an orientation for the user. Initially the grid is gradated in steps of 10. To obtain a finer resolution (e.g. steps of 1), you can change the step size in the box at the bottom and/or to the right of the grid matrix. The smaller steps you choose the smaller the extent to which the transformation may be performed.

In addition to its general purpose pattern recognition capabilities, specialized high-performance functions for Optical Character Recognition (OCR) have been implemented in Minos. The following chapter illustrates how to train characters in Minos, learn the classifier and test it.

The character definition process is similar to that of general purpose patterns. However, a number of additional guidelines have to be taken into account since the purpose of an OCR application is to recognize strings of characters in sequence and is thus somewhat different from "standard" pattern recognition scenario. However, these guidelines can also be applied to different applications, for example completeness control, since one significant variable for OCR, the known distance between two objects, can also be applied for different scenarios.

Before creating an OCR application you should consider the two following points:

Position

The position of a character is the pixel which represents the taught anchor point/reference point, in this case the intersection of the baseline with the extension of the left character edge. Most characters fill the entire area they occupy, but some, however, do not. Thus, for example, narrow characters such as the "i", comma character, period character and others, occupy an area which is larger than the character itself. This is particularly clear in fonts with fixed inter-character spacings.

Feature window

The feature window for all characters should be roughly identical in height, i.e. "Top", "Left" and "Bottom" should have identical values for the feature windows of the different characters. The "Right" value of a feature window describes the distance from a character's positioning point through to and including the pixel before the positioning point of the next character. In the case of fixed pitch character sets, the "Right" values of the various feature windows are also roughly identical.

Advance Vector

A character's so-called Advance Vector indicates the position at which the next character is expected, i.e. the pixel on the character's baseline which lies one pixel before the left edge of the feature window of the following character.

Expectation Window

The expectation window is a small square, the midpoint of which represents the target pixel of the advance vector. When performing a read operation (e.g Read Token), Minos only examines the pixel of this expectation window in order to recognize a character (in the TeachBench this parameter may be set in the Search Parameters, see OCR-Vector).

Don't care points

You can use the Mask Editor to mark undesired, interfering points within a feature window as areas which are not considered by Minos when learning the classifier (see also the section Don't care masks).

).

).